Making code fasterMicro optimizations and parallel work

I really wanted to leave this series of posts alone. Getting 135 times faster should be fast enough for everyone, just like 640KB was.

Unfortunately, performance optimization is addictive. Last time, we left it at 283 ms per run. But we still left some performance on the table. I mean, we had inefficient code like this:

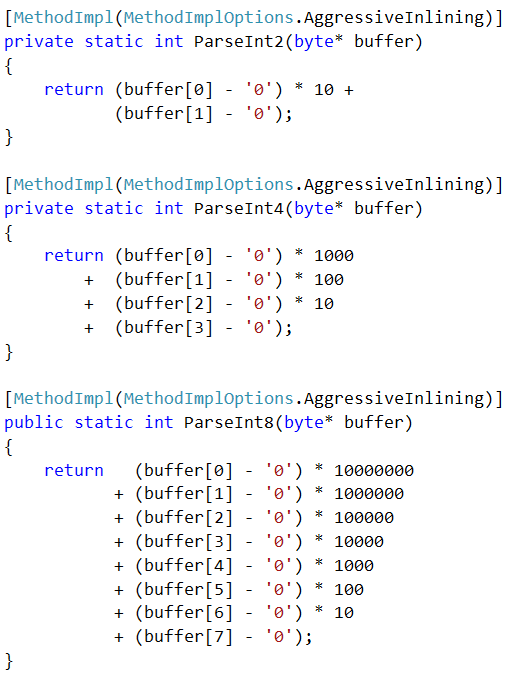

Just look at it. Analysis showed that it is always called with 2, 4 or 8 only. So we naturally simplified things:

Forcing the inlining of those methods also helped, and pushed us further toward 240 ms.

Another cost that we had was date diff calculation, we optimized for the case where the day is the same, but in our dataset, we have about 2 million records that cross the day line. So we further optimized for the scenario where the year & month are the same, and just the day is different. That pushed us further toward 220 ms.

At this point the profiler was basically laughing at us, and we had no real avenues to move forward, so I made the code use 4 threads, each processing the file at different locations.

That gave me: 73 ms and allocated 5,640 kb with peak working set of 300,580 kb

- 527 times faster than the original version.

- Allocate 1350 times less memory.

- 1/3 of the working set.

- Able to process 3.7 GB / sec.

Note that at this point, we are relying on this being in the file system cache, because if I was reading it from disk, I wouldn’t be able to do more than 100 – 200 MB / sec.

Here is the full code, write code like this at your peril.

More posts in "Making code faster" series:

- (24 Nov 2016) Micro optimizations and parallel work

- (23 Nov 2016) Specialization make it faster still

- (22 Nov 2016) That pesky dictionary

- (21 Nov 2016) Streamlining the output

- (18 Nov 2016) Pulling out the profiler

- (17 Nov 2016) I like my performance unsafely

- (16 Nov 2016) Going down the I/O chute

- (15 Nov 2016) Starting from scratch

- (14 Nov 2016) The obvious costs

- (11 Nov 2016) The interview question

Comments

Your code is buggy

stats.Length is 4.

Also, when you fix this bug, you will have to deal with another problem: there is no warranty stats arrays be the same size, so the following code can fail with IndexOutOfRangeException:

You forgot to mention one optimization you made in the final version.

The code now handles the case where the year and month are the same, but the days are different. There's no need to worry about leap year in this case, so the code just has to deal with different calculating the difference of days in seconds as well.

The bookkeeping code I added showed the following for the sample data: same day count = 5,454,657 same month, different day count = 333,066 other = 11,104 (calls the full, slow datetime parse code)

Jesus's bug report means that the summary.txt file is empty since there are no non-zero values in the first 4 slots.

Once you fix Jesus's bug, the file contents are wrong because of another bug.

As I commented in yesterday's article, there's a bug in the Parse() method.

the first line of Parse has the start and end positions swapped:

There's a third bug, this time in the WriteFormattedTime() method.

In the output, the timespan numbers are supposed to be separated by colons, but WriteFormattedTime() uses the wrong indices for writing the seconds value, thereby overwriting the second colon:

After fixing the above-mentioned 3 bugs, app runtime is ~135ms.

You can reduce the runtime further by not creating a Timespan object for each value output in the WriteOutput() method. Instead you calculate the seconds, minutes and hours directly. Along with inlining the WriteFormattedTime() function, this reduces the runtime by about 10-15ms in the parallel version.

With the above change, the runtime is around 120-125ms on my machine.

N.B. I re-read today's entry and found where you mentioned the optimization for the same month - I was distracted by the pretty pix of source code. :)

I found a fourth bug in the code.

In the Main() function, the code that calculates the start and end ranges for each Task to process is wrong.

The integer division used does an implicit floor() of the division result. That messes up the calculation for the end point since the bias is to round down to the nearest integer.

Here's debug output of the start, end, and total entries in the file for each Task.

The previous Task's range end should be the next Task's range start.

The problem is that the last Task's range end stops short of the actual entries end, so the code doesn't handle all the entries in the file.

Fixing the bug shouldn't affect the runtime significantly.

Thanks for writing all the blog entries. It's been an interesting read.

Jesús , Thanks for noticing, after fixing this issue, I'm seeing runs of about 130 ms.

Johnny, Thanks for noticing, I fixed the issue with start/end swap, but I'm not following on where I'm still using leap year if the year/month is the same. The only time this happens is on the full blown date parsing, and it seems that there isn't enough on the datafile to justify more complexity there.

Johnny, Good ideas, I also converted the writes to mmap as well, and write to it in parallel as well. Performance is now ~100 ms.

Some more optimizations which reduce the Parse runtime by about 20--25%

For DiffTimeInSeconds, time conversion code can be replaced with

For ParseInt8, replace with

Johnny, Can you explain what you are doing there?

See https://johnnylee-sde.github.io/Fast-numeric-string-to-int/

and https://johnnylee-sde.github.io/Fast-time-string-to-seconds/

Johnny, That is pretty awesome stuff, thanks

Comment preview