Making code fasterThat pesky dictionary

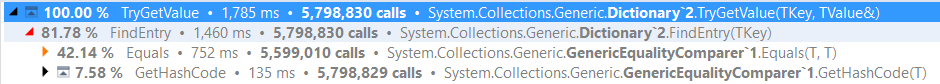

It is a fact of life, if you are looking at a high performance code, a dictionary is probably going to be one of those things that will be painful. Let us see how this look like in our current program:

Are you kidding me?! Here I am worrying about every little bit and byte to try to get the most performance out of the system, and Dictionary is eating almost 50% of my performance?

Let us look more deeply into this:

So that is about 3 μs, which is pretty fast. But it isn’t fast enough.

Now, know that there are about 200,000 unique values in the dictionary (look at the Insert calls in the profiler output), and we have some knowledge about the problem space.

The id that we use has 8 characters, so at most, we can have a hundred million ids. An array of that size would be roughly 762 MB in size, so that is doable. But we also can be fairly certain that the ids are generated in some sequential manner, so there is a strong likelihood that we don’t need all of this space.

So I wrote the following function:

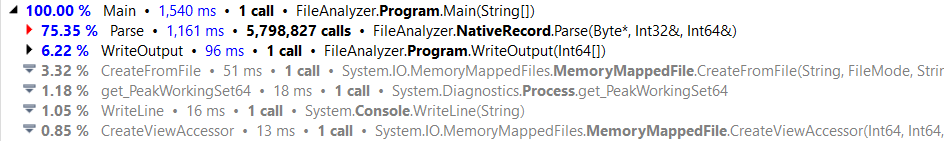

Changed the stats to start with an array of 256 longs, and run it, the results are nice.

587 ms and allocated 2,576 kb with peak working set of 296,484 kb

And our costs look like:

This is single threaded, of course, and it is faster than all the previous multi threaded versions we had before.

More posts in "Making code faster" series:

- (24 Nov 2016) Micro optimizations and parallel work

- (23 Nov 2016) Specialization make it faster still

- (22 Nov 2016) That pesky dictionary

- (21 Nov 2016) Streamlining the output

- (18 Nov 2016) Pulling out the profiler

- (17 Nov 2016) I like my performance unsafely

- (16 Nov 2016) Going down the I/O chute

- (15 Nov 2016) Starting from scratch

- (14 Nov 2016) The obvious costs

- (11 Nov 2016) The interview question

Comments

Often wondered about the exponential growth factor used in collections. If the collection grows from 2^18 to 2^18 +1 then it's just allocated a whole heap of memory for 1 extra entry. This is fine if it grows up to 2^19. The point being that the growth steps start to get significant at larger sizes. Thoughts on a more linear growth strategy? This also assumes that id's start from near zero.

Choices would all revolve around usage expectations. But an example of something that would have "unintended consequences" when some future dude says "sure, we can throw a 100x volume at it. It's always worked before" :)

Andy, That is a well known strategy to handle such cases, and it ensures that at most you'll waste half the space. This is important when the cost of copying the data is significant, so you need to optimize between times you grow and the size you grow with.

With RavenDB, we grow by half up to 1GB, then grow by 1GB every time, for example.

@Andy Pook, doubling in size is the only strategy that minimizes array copy in the general case (matematically proven). The amount of copied memory will be on average equal to 2x the size of collection you are using.

Fixed size grow will save more memory for large collections but will exponentially copy more data. Say if you start at 1000 size grow by 1000 items at a time by the time the colection reaches 1.000.000 items the amount of memory copied will be ~500.000.000 times the size of array item.

So this is not a tradeoff you can make for a generic purpose collection ,you you might waste half the memory when doubling size, but with the fixed sizing approach you can waste exponentially increasing memory bandwith.

@Pop Catalin I understand the theory. Keywords: general purpose and tradeoff. It's "the right thing to do" for the general purpose case when the collections are "small". The point I was failing to make is that it has consequences as the collection grows. It will work very well until the data set grows just enough such that the next doubling starts eating multi-GBs of memory or blows through the 32bit processes memory space. If you know that the data set will only ever be several 100K all is good. If it gets to the 100's or 1000's of millions different strategies must be explored. As Oren says, grow this way until a certain limit then grow this other way. Maybe it could use "buckets" instead of a contiguous array...

The point, I guess, is that you must define your expectations, know your domain, before you choose your strategies. Optimizing some aspects will mean the solution will behave badly if the conditions change.

@Pop Catalin, I have just been polishing my pedantry badge, so...

The data copied if you use fixed-size growth is only quadratic in the size of the data, not exponential.

@Andy Pook the rough intuition is that if you've used more than x GB then it's reasonable to assume you're going to need about the same again, absent more information about your allocation pattern. Or, put differently, if you are within a factor of 2 of exhausting your system's capacity, you're probably already in trouble, so why try harder?

There's a related heuristic that things tend to last about as long again as they've already existed, which I'm fairly sure is in one of Taleb's books although I can't find a reference after a quick Google.

Of course if you know more about your allocation patterns then go can do better.

Comment preview