Reviewing Lightning memory-mapped database libraryOn disk data

As mentioned, the data in LMDB is actually stored in page. And I am currently tracking through the process in which we add a new item to the database. The first thing that happen is that we allocate a leaf page. I think that I’ll have to go over how pages are allocated now, which should explain a lot about how LMDB reuses disk space.

A new page is allocated by first finding the oldest transaction that is still active. This is how LMDB implements MVCC. Basically, any free page that is older than any existing transaction is eligible for reuse. The first db (although a better term would be to call it btree, or maybe a table) contains the free pages, and at first we search there for an available page. If we can’t find such a page, we use the end of the file for that. This happens in mdb_page_alloc.

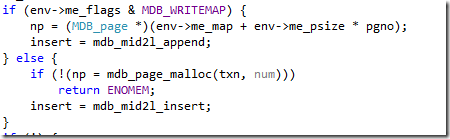

An very interesting aspect is the fact that LMDB allows direct memory writes (with the additional risk of corrupting the db if you messed the data), interestingly, this is done in the following code:

If the options allows directly memory writes, you get a point to the mmap file. Otherwise, LMDB will allocate a page (or re-use one that has already been allocated).

I am not really sure what is going on with the insert yet. This is a function pointer (delegate for C#). And it select which behavior will happen later on. I am just not sure what those two different function do yet.

I think I got it, the key is here:

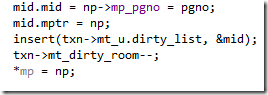

You can see that we use the insert method to add the mid variable to the dirty list. So if we give you a direct pointer, we append it to the list. But if we give you allocate a page, we need to add it in order.

The mdb_mid2l_insert will add an item to the list in the appropriate location to ensure sorting. I think that this is done so when we actually write the dirty page to disk, if we do that using file I/O, we will do that in ascending file order, so we can get good seek times from the disk. A new page has 4,084 bytes available for it (12 bytes are taken by header data).

A database is actually created the first time data is written to it. The root page is allocated and recorded. And then the data is added to the page.

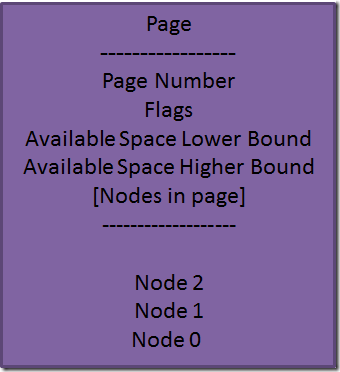

As you might recall, data inside a page is kept in a sorted list. But remember that the data is also stored on the page, and there is the whole notion of overflow pages, so I think that I am going to have to draw a picture to figure out what is going on.

This is more or less how it works. But I don’t think that I really get it. Note that there are two collections here. First is the positions of nodes in the page, and the second is the node themselves. The reason we have this complexity is that we need to maintain both of them in an efficient manner. And we need to do that without too much copying. Therefor, the list of nodes in the page is in the beginning, growing downward, and the actual nodes are in the end, growing upward. The page is full when you can’t fit anything between those two lists.

A node, by the way, is the LMDB internal name for a key (and maybe data). But I think that I might have an error here, because I don’t see the code here to actually add nodes to the page in a sorted fashion, so it might be doing linear search on the content of a node. I am not tracking through the code for adding a second value into a database. And I think that I have to be wrong about linear node search. The code I was looking at was invoked at the the new db creation section, so it might be taking shortcuts that aren’t generally available.

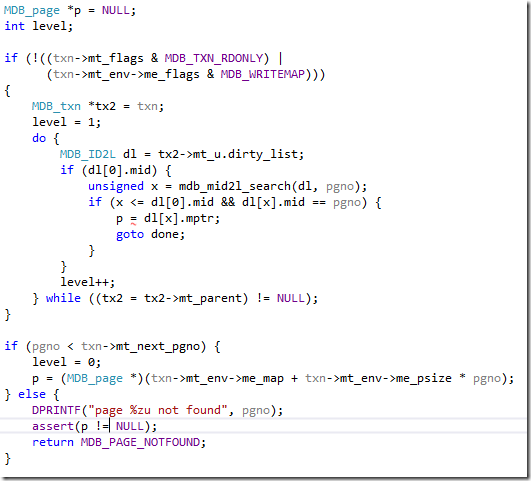

At any rate, the logic for adding an item goes like this… first we need to find the appropriate page. And we do this by starting from the root and asking for the actual page. Which gets us to mdb_page_get, where we have the following:

The really interesting part here is that each transaction have a dirty list of pages, so if you modified a page, you’ll get your own version, even before the data was committed. Otherwise, we will just hand you the current version. This is quite nice.

And then we get to the already familiar mdb_page_search_root function, and I can confirm that the nodes in a page are actually sorted, which only make sense. I am not sure where that happens yet, but I have got the feeling that I am going to see that in a bit.

Okay, I am going to go on a bit of a rant here, mostly against C, to be honest.

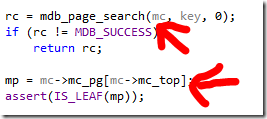

Take a look at this code. mdb_page_search does something, but what it does it mutate the state for the cursor (mc), and you are then going to access the mc->mc_pg[mc->mc_top] to get the actual result you wanted. To make things more interesting, the is also a mc->mc_ki, which is the index of the node inside the node. And it drove me crazy, because I just couldn’t see the association between those three values, especially because I am so not used to this type of code that I never even considered this as a possibility.

At any rate, now I know how it gets to insert things in the right order. When doing mdb_page_search, it calls to mdb_node_search, which does the appropriate setup to tell the next call where it need to actually do the insert to get things in a sorted fashion. I am currently in the mdb_cursor_put, which is trice cursed and really hard to follow. A 400+ lines method with gotos galore.

But I am in the section where we are actually going to be writing a new value. (new_sub: goto header, if you care). And the code is pretty straight forward from there.

Next up, I want to see how it handles a page split, but that is a subject to another post.

More posts in "Reviewing Lightning memory-mapped database library" series:

- (08 Aug 2013) MVCC

- (07 Aug 2013) What about free pages?

- (06 Aug 2013) Transactions & commits

- (05 Aug 2013) A thoughtful hiatus

- (02 Aug 2013) On page splits and other painful things

- (30 Jul 2013) On disk data

- (25 Jul 2013) Stepping through make everything easier

- (24 Jul 2013) going deeper

- (15 Jul 2013) Because, damn it!

- (12 Jul 2013) tries++

- (09 Jul 2013) Partial

Comments

Again I'm surprised at your confusion over mdb_page_search() since the function documentation says exactly what it does and how.

It would be illustrative for you to read Martin Hedenfalk's btree.c code, and see how he managed parent page linkage. His code is quite clumsy, requiring multiple meta-structures for pages to link things together. Most B-tree implementations have bi-directional links between parent and child pages in a tree. What most implementors fail to realize is that the upward links are only needed when you're actually performing a traversal. If you store those pointers in the pages themselves you're wasting 4 or 8 bytes per page, multiplied by however millions of pages are in the DB. In btree.c's case you're wasting space in alternate meta-structures and management overhead for those structures. (pageparent, dirtypage ptr, dirtypage head ptr, all kinds of garbage.)

Alternatively, look at commit 2e3bc39fa94f21d692d8e94183f57aef9122c487 which is where I ripped out the last remnants of the original page linkage code and replaced it with the streamlined cursor code.

Again, if you want to learn what the code does and why, you have to read not just the current version of the code but its actual change history and evolution to understand it. Read Martin's btree.c to see where we came from, read my papers to see where we went and why.

(forgot to mention - in a copy-on-write tree, like LMDB and pure append-only B-trees, you obviously cannot store parent links in the pages themselves, which is why Martin's code has all the extra scaffolding around the actual data pages. But as LMDB shows none of that extra cruft is needed.)

Howard, Your definition of how and mine differ. In particular, I find it hard to follow the number of states that you have to keep track of, in terms of the number of variables, the length of the function and the number of other data that is being manipulated. For example, the cursor stack is manipulated sometime on the page side and sometimes on the ki size. I wonder, was there a reason not create a struct of page pointer and indx_t, instead? That way both items would be in the same place. I think it would have made it easier to follow.

Also, note that I am not reading to code to understand how to use it. For that, I think that the docs do a pretty good job of explaining things. I am reading the code to understand how it _works_.

Howard, Regarding the copy-on-write & parent tracking. I agree, not having to track the parent pointer really simplify things. I like how this change means that a whole lot of complexity is just... gone.

So by now you should understand, your questions should not be "was there a reason" since obviously everything in LMDB was designed a specific way for a specific reason.

You're in an obvious mindset, one encouraged by contemporary object-oriented programming education, of thinking about data as abstract blobs instead of what it really is inside a computer - a sequence of bytes. You really need to spend some quality time writing in assembly language to break out of this mindset because it's incredibly harmful and will always hinder you from writing efficient code.

The reason why the cursor stack exists as a separate array of page pointers and cursor indices is simple - a page pointer is 4 or 8 bytes long (depending on 32 or 64 bit machine architecture) and a cursor index is only 2 bytes. If you define a single structure and declare an array of these structs you will be wasting 2 or 6 pad bytes per array element due to structure padding. The reason none of this organization bothers me is because I only see data as bytes, and I associate them together mentally when I need to, and disaggregate them as I need to.

Logically they are a unit, and in an abstract description of algorithms we would talk about them as such. But logical abstractions should only be used for descriptions between humans, they have no business existing in real code. They don't help the computer at all.

Howard, I think that you are seriously under estimating the mental cost of what you are doing. With regards to the padding, can't you use #pragma pack(1) to control that? The data is all going to be sitting in one big array anyway, so I don't see the issue there.

Logically it's all the same. I can describe this in English "A cursor is a stack of page pointers and page indices." Whether that's typedef struct celem { MDB_page *mp; indx_t ki }; celem stack[CURSOR_STACK]; or the current LMDB structure, the description is identical.

Using #pragmas is highly non-portable. Also, on the compilers where it works, you will get horribly inefficient code since the majority of accesses will be unaligned.

We write code for machines to execute. That's the purpose. I wrote LMDB to have code that runs well. In fact, it runs better than everything else out there. The mental cost is inconsequential compared to the amount of runtime saved. If you think that cost is too high for you, cool, not my problem. If you want to excel at something you have to pay attention to details. If you want to be free of the burden of paying attention to details you will never produce anything better than mediocre results. I expect better, from myself and from the things I work with.

You don't see the issue because you don't understand the machine architecture. Again, like I said, you need to spend some quality time writing in assembly language. That is, if you truly want to understand what works and why. You need to read some CPU architecture books as well, and work with the actual machine language.

After you've had that experience none of this will appear difficult or costly.

Just wanted to say, I love the banter going back and forth here. Both sides are brutally honest, and it's amusing to watch.

My 2 cents: C programmers often write code for machines first, humans second (or not at all). It's why C programs are very efficient while difficult to comprehend by anyone other than the author.

This is affirmed by Mr. Chu's statement,

"We write code for machines to execute. That's the purpose...it runs better than everything else out there. The mental cost is inconsequential compared to the amount of runtime saved."

It's pretty ironic to see Ayende on the receiving end of being scolded for not being low-level enough - THE guy who is known for preaching how to limit your abstractions: http://ayende.com/blog/154209/limit-your-abstractions-refactoring-toward-reduced-abstractions

Comment preview