Reviewing Lightning memory-mapped database libraryPartial

Continuing in my quest to learn more, I decided to go over the LMDB codebase.

LMDB is:

LMDB is an ultra-fast, ultra-compact key-value data store developed by Symas for the OpenLDAP Project. It uses memory-mapped files, so it has the read performance of a pure in-memory database while still offering the persistence of standard disk-based databases.

It has some interesting feature set, and a really small codebase. I am anxious to see how they managed to do so much.

Interestingly, the data model for LMDB is quite different from the usual append-only / transaction log. Instead, it allows only a single concurrent writer, and modify the data in place. There also appears to be a lot of dire warnings regarding usage of long transactions, since they would result in increased file size, presumably because the db couldn’t find the pages the scavenge because they are locked by ongoing transactions.

One thing that I should note already, the code (I am currently about 1/3 of the way of lmdb.h file) is very well commented, and it explains a lot about what is going on. If the rest of the code is like that, this is going to be really nice to read. Okay, I take it back. The main files seems to be lmdb.h and mdb.c. The first one is 1,300 lines and the second is over 7,500 lines. Admittedly, this is impressive in the sense that pretty much everything is done there, and there are a lot of docs. But damn, I wish this was better organized. Right now my head feels like I need to pop up and take a breath.

I read ~2,000 lines of code so far, and I haven’t found anything that does something. It is all headers or comments or macros up until now. I skipped over to the code, and it is really hard to understand what is going on.

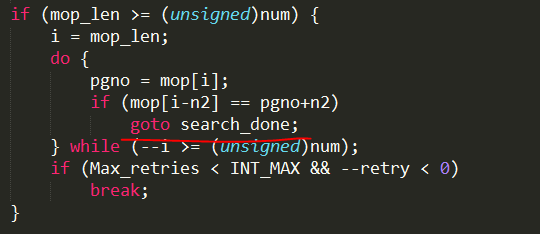

Take this code snippet:

It shows a lot of the things that make it hard to work with. effectively random naming convention (under_score, Pascal_naming, SHOUT_NAMING, etc), the goto trick is used WAY too much, lovely variable names such as n2. This is part of a method that goes on for something like 150 – 200 lines. And it include the following code:

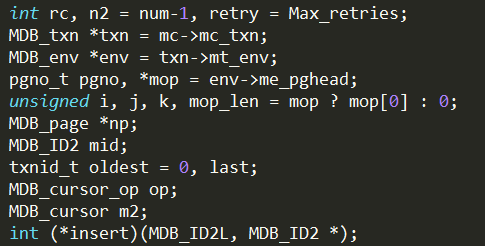

Quick, can you tell me how many variables are declared here? And note that they are all local variables. I counted it twice, once getting to 17 and once getting 18.

That is just too much, I am not going to go any deeper. The leveldb codebase was easy to follow, it had structure. This codebase is just a code dump. It might be a really good codebase, for what it needs to do, but I literally can’t follow it.

More posts in "Reviewing Lightning memory-mapped database library" series:

- (08 Aug 2013) MVCC

- (07 Aug 2013) What about free pages?

- (06 Aug 2013) Transactions & commits

- (05 Aug 2013) A thoughtful hiatus

- (02 Aug 2013) On page splits and other painful things

- (30 Jul 2013) On disk data

- (25 Jul 2013) Stepping through make everything easier

- (24 Jul 2013) going deeper

- (15 Jul 2013) Because, damn it!

- (12 Jul 2013) tries++

- (09 Jul 2013) Partial

Comments

If you need an idea for a future blog post: I would care to read your opinion on memory mapped files as a design technique. There are important pros and cons and I believe you've got some experience with that topic.

Tobi, Can you create a list of questions around this?

Ayende, my key question would be: when to use it and when not?

What are the most important advantages and disadvantages? Why are using well known products like SQL Server custom caches instead of memory mappings? Why are other products like LevelDB using mmap instead of custom caches? Are they well suited for managed code? How does performance compare with other techniques? Is it possible to use them with mutable data structures and retain crash recoverability? What guarantees does the OS make regarding ordering and durability?

Hope that helps.

Wow that is some crazy looking code.

I count 17 variables up to the line "int (*insert)(MDB_ID2L, MDB_ID2 *);". However, my c/c++ is a bit rusty so I'm not even sure how to read that line.

Some people are very clever and can create very fast running and full of amazing features code. But at the same time no one but them and computers understands this code. I wonder what you think is better. Mediocre/good programmer trying to write clean code (backed up by tests) or very clever programmer that creates code like this?

Jedak, That is the C function pointer. Similar to a C# delegate.

Jiggaboo, In most code bases, there are hotspots that deserve special attention. I get why you would go deep there. But with most of the codebase? I don't get that. I would rather see good code.

I can't understand why would anyone need so fast, custom and extremely optimized database engine for an LDAP project. All RDBMSes or well-known document databases seem to be perfectly capable of handling all LDAP queries you could imagine so why not use them instead of writing another one?

Rafal, I am not sure, probably you want to ask them.

All the RDBMSes you know of are at least 3 orders of magnitude slower than LMDB. If you want to build a complex system you need your core components to be perfectly lean, otherwise the bloat that accumulates as you go up higher levels of abstraction begins to outweigh your intended functional code.

More here http://lists.andrew.cmu.edu/pipermail/cyrus-devel/2013-July/002851.html

Also, you should look at the history of the changes. E.g., read a version of the code from 6 months ago, or a year ago. Things have gotten a lot more complex recently than originally intended.

Howard, RDBMS tend to do quite a bit more than what LMDB does. To start with, pretty much any RDBMS that I can name will allow me to do concurrent writes.

Howard, Do you have a recommended version that I can look at?

I can tell that from looking at the history, it appears that there are only 2 major contributors to LMDB (you and Hallvard Furuseth). There are a total of 6 contributors, period. And all the other 4 contributors added 6 commits overall. I would say that at least part of the reason for that is that the codebase (as I currently read it) is so hard to get into.

Howard, Based on your suggestion I checked the code from Nov 2012, but it still has a lot of the same issue. Case in point, you have mdb_cursor_put method. That is 400+ lines method, it has 10 gotos and is really really hard to follow.

While I agree with half of this, you need to learn more C before commenting on C code. Different naming styles are the C way of doing namespaces. INT_MAX is a macro constant from a system header, Max_retries = enum constant. leveldb uses C++, which offers better ways. And C++ doesn't require all declarations to be at the beginnig of a scope, unlike ISO C90 which mdb.c is written to be compatible with.

Unfortunately I'm hoping to avoid reorganizing the code too much for readability for a while. The less it changes, the easier bughunts get: E.g. 'git blame' on a wrong line will often show the commit which introduced the trouble - unless the code gets reorganized.

Hallvard, Good point about the naming stuff, thanks for informing me.

I still think that this is stupid, but I'll refer the comment to C style naming rather than this codebase.

(Sent this reply in private email before...)

"Howard, RDBMS tend to do quite a bit more than what LMDB does. To start with, pretty much any RDBMS that I can name will allow me to do concurrent writes."

But not necessarily with any good performance. It's all a shell game. At the end of the day somebody has to serialize all of the writes, whether into a write-ahead-logger or some other serial data structure. If you fall into the trap of ultra-fine-grained locking you spend more CPU time in lock code than in any other functional code. If you have deadlocks or conflict detection and recovery you spend more time repeating work.

Compare our throughput with BerkeleyDB to our throughput with LMDB. http://wiki.zimbra.com/wiki/OpenLDAP_MDB_vs_HDB_performance

The headache of fine grained high concurrency locking isn't worth anything.

Oren Eini (Ayende Rahien) wrote:

Not in particular. You could start at the initial commit into git, and compare it to Martin Hedenfalk's btree.c, which is where this code started from. Then browse forward in time thru the logs and see what got added and why.

I would say the main reason is that until a few days ago very few people had ever heard of it. And another reason is that C programmers have become quite rare these days, with most programmers using Java and other "easier" languages.

Another reason is that LMDB already does 99% of what it was designed to do. (The only features remaining to flesh out are for FIXEDMAP object relocations, and a couple other ancillary functions.) After that I expect the code to stop evolving while we plug it into more higher level systems.

(Another thought on contributor base - this is a very tiny piece of code. It doesn't need a lot of contributors. The entire BSD 4.2 release was written by only 4 people, after all. A large number of contributors is neither necessary nor a reliable indicator of anything.)

Howard, Good point about the committer size. I worked on a few successful projects where I was basically the lone committer for the entire time.

Howard, it is possible to get multiple writers with very fine-grained concurrency and low overhead. The trick is to use MVCC and an execution model that doesn't change any data structures until the commit so you don't need exclusive access during writing, only during committing.

I worked on a commercial SQL database (ANTs ADS) where we did locking at the cell level (that is, one value in one row) and we had good benchmarks for high-volume transactional work loads involving thousands of simultaneous readers/writers.

David: sure, but what you consider "low overhead" is very different from what I consider it to be. LMDB is already MVCC, and it has very high write throughput compared to other solutions. Look at our benchmark results...

In fact there's no other practical way besides MVCC to approach an mmap-based database. Since the OS can flush modified pages back to disk at arbitrary times, you cannot use a traditional update-in-place DB access method. (Or, you could, if you use mlock/munlock everywhere to force pages to be released only at specific times, but then you introduce so much overhead you lose the benefit of using mmap in the first place.)

And ultimately, any approach that uses locks of any kind cannot scale reasonably. LMDB's read performance scales perfectly linearly across arbitrarily many CPU cores, where "linear" means 99.9%, not just 60% or whatever some other "linear scaling" DBs achieve.

It's definitely a tradeoff. I know we could have slightly faster write performance in LMDB if we add a bit of finer-grained locking in certain areas. I've tested this in a separate branch of code already, but we had to give up read performance as a result of it. And anything that harms read performance is forbidden in my code.

I've seen the benchmarks. In fact, I found this blog post while looking for more information about LMDB. I've been thinking for some time about writing a key-value store that works with mmap and MVCC that uses copying rather than updating pages so you get streaming writes, minimal fragmentation, and compact data structures (rather than having to leave space for updates) so I was very interested in what you've done.

I was planning to take a look at the code some time, but frankly I'm a little discouraged by Ayende's code review so I may not.

David, LMDB is quite interesting. I don't like the way the code is structured, and I can't speak about how robust it is (no experience in judging that in C codebases), but from results perspective, I think that this is going to be a good choice.

I would say Ayende's code review is educational for higher level language programmers, but he's clearly not an experienced or fair judge of C code. I've been writing C code for the past 28 years, and my projects have consistently been compact, efficient, and crash-free. (Working on communications for the Space Shuttle, all of these are absolute requirements. In fact my software allowed communications to be maintained even when the dedicated ground receivers failed on a mission in 1994. You don't hear that stuff in the news, but NASA still uses my software to this day.)

LMDB's behavior is thoroughly characterized and documented. It has been rigorously crash-tested at companies like Verizon and T-Mobile and exceeded all of their requirements, unlike any of their previous systems. (In fact, if you're a customer of any of these companies, you've already been using OpenLDAP and LMDB for at least the past 6 months.)

It's not even feasible to fully characterize some of these other systems because they are so large and have such complex APIs and so many moving parts. The combinatorial explosion couldn't even be tested in multiple consecutive lifetimes. LMDB is provably better than carrier-grade, we have the test reports that show it.

On the topic of code complexity and comprehensiblity, I've got a number of reactions.

1) A lot of the fretting over this comes from the lore of misguided managers who believe programmers are interchangeable, and you can easily replace an experienced older engineer with a young cheap engineer without paying any consequences. Clearly this is BS, continuity and experience are invaluable. And the interesting thing about open source is that no one can fire you from a project and replace you with a novice. (I've been working with OpenLDAP since 1998...)

2) The LMDB code is heavily commented. Anything noteworthy or non-obvious has already been described in great detail. (Much of Ayende's difficulties are self-inflicted, he chose to skip over the comments and published documents rather than read them. If our roles were reversed I would have read every available description in addition to the code itself, to learn as much and as quickly as possible.)

3) Sometimes things are simply hard. Hard to formulate, hard to implement, and hard to understand. That doesn't mean they're not worth doing. Indeed, it was nearly 2 years between the time we first started openly discussing LMDB and the first line of code being written. See the email threads here: http://www.openldap.org/lists/openldap-devel/200905/msg00036.html http://www.openldap.org/lists/openldap-devel/200909/msg00016.html http://www.openldap.org/lists/openldap-devel/201103/msg00006.html http://www.openldap.org/lists/openldap-devel/201106/msg00009.html http://www.openldap.org/lists/openldap-devel/201108/msg00001.html http://www.openldap.org/lists/openldap-devel/201109/threads.html http://www.openldap.org/lists/openldap-devel/201109/msg00007.html

As you can see we were originally just thinking along the lines of a slightly revised BerkeleyDB. A lot of sustained thought went into it and the idea evolved a lot over time. We wandered down a few dead-ends too before arriving at the solution we have today.

4) The original code had a single author. A 2nd committer joined in a couple days after the code was made publicly available (2011-06-27 to -29). To me that's an existence proof that an experienced coder, previously unfamiliar with the codebase, can get up to speed on it without too much hassle. Opinions from a non-C-coder that this is hard to read are basically without merit.

(repasted for clarity)

http://www.openldap.org/lists/openldap-devel/200905/msg00036.html

http://www.openldap.org/lists/openldap-devel/200909/msg00016.html

http://www.openldap.org/lists/openldap-devel/201103/msg00006.html

http://www.openldap.org/lists/openldap-devel/201106/msg00009.html

http://www.openldap.org/lists/openldap-devel/201108/msg00001.html

http://www.openldap.org/lists/openldap-devel/201109/threads.html

http://www.openldap.org/lists/openldap-devel/201109/msg00007.html

Howard,

1) I whole heartedly concur with you regarding experience. In my projects and in my company, we try very hard to optimize for the exerpience developer. Especially if that dev is already familiar with the codebase.

That said, we are also targeting outside contributers, and that means that we want to create a codebase that encourage people to jump in and contribute. We generally do that by layering stuff. The lower levels can be fairly gnarly, since they deal with low level stuff (mem allocations, scheduling, etc).

But on top of that, we have a lot of stuff that we do that is really accessible, and we design upfront to make it easy for someone to join and contribute something.

Regarding being fired from OSS, that actually can happen. See: http://www.youtube.com/watch?v=ZSFDm3UYkeE

Although that is pretty rare.

However, having accessible code means that you don't rely on a small set of people for the project. That brings back the bus factor, the ability of outside people to debug & fix things, etc.

2) Oh, I absolutely agree that I bashed my head against the wall. On the other hand, I think that I have gained a better understanding about how LMDB works than I would have otherwise. That said, I have since gone over those docs & comments, and they explain stuff, sure, but the code is the source of truth, and I don't think that I would have known nearly as much by just reading them.

All of that said, the codebase does have issues. In particular, there is a lot of code duplication. I pointed out some of which in the posts. I am willing to concede that you're by far a more knowledgable person with regards to the low levels of CPUs, machine code & C. And I would even agree that in order to get the absolute best performance, you accept certain level of ugly code. Code duplication is by far the most serious one, but the sheer complexity of some things (especially VERY long methods) make it a very hard to approach codebase.

Now, I have spent a few weeks digging into it, and I can see how it is structured now. But it is still hard.

3) Thanks for the links, they provided useful background information.

re: layering - it should be quite evident that LMDB is low level. Indeed, you are apparently author of a database and yet this is the first time you've had a peek inside an actual database engine. If someone is not comfortable doing the detail work that it involves (e.g. http://www.openldap.org/lists/openldap-devel/201109/msg00008.html ) then it clearly is not meant for that person. There's plenty of higher level code in the OpenLDAP Project for new contributors to get into.

Howard, I have been reading actual database engines for quite some time. CouchDB, for example, http://ayende.com/blog/3627/reading-erlang-couchdb-from-rest-to-disk-in-a-few-function-calls That is circa 2008, IIRC

And even for something that is low level, there is a whole lot of chance for separating things outs. You already have something like that with mdb XXX op

Just to clarify, Howard, I didn't mean that Ayende had put me off of LMDB as a piece of software, but that he had discouraged me from reading the code for educational purposes. And I wasn't endorsing his comments about the code, just mentioning that his post had influenced me in a certain way.

But like you, I'm an old-school C programmer and your defense of the code is vigorous and heartfelt so maybe I'll take a look anyway. We old-timers have to stick together against these new-fangled languages and their programmers. Garbage collection!? Well, hell, son, that takes half the fun out of programming! :)

It seems LMDB has no way to increase size of db? Size it fixed at opening and if you want to increase at some point you have to throw everyone out then increase the size??

Anders2020, Yes.

Ayende what happened to Voron.

Andres, Nothing, we are working on it.

Voron; Is it possible to have a look at the code. I have to be honest I might not qualify for the requirements your specified for it. But you king of piqued my appetite

Comment preview