RavenDB indexing optimizations, Step II–Pre Fetching

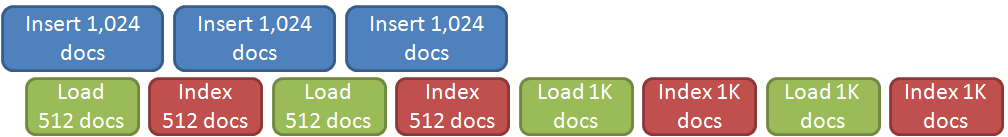

Getting deeper into our indexing optimization routines, when we last left it, we had the following system:

This was good because it was able to predictively decide when to increase the batch size and smooth over spikes easily. But note where we have the costs?

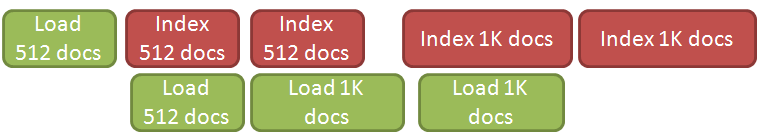

The next step was this:

Pre fetching, basically. What we noticed is that we were spending a lot of time just loading the data from the disk, and we changed our behavior to allow us to load things while we are indexing. So on the next indexing batch, we will usually find all of the data we needed already in memory and ready to rock.

This gave us a pretty big boost in how fast we can index things ![]() , but we aren’t done yet. In order to make this feature viable, we had to do a lot of work there. For starter, we had to make sure we would take too much memory, and we wouldn’t impact other aspects of the database, etc. Interesting work, all around, even if I am just focusing on the high level optimizations. There is still a fairly obvious optimization waiting for us, but I’ll discuss that in the next post.

, but we aren’t done yet. In order to make this feature viable, we had to do a lot of work there. For starter, we had to make sure we would take too much memory, and we wouldn’t impact other aspects of the database, etc. Interesting work, all around, even if I am just focusing on the high level optimizations. There is still a fairly obvious optimization waiting for us, but I’ll discuss that in the next post.

Comments

I wonder why you have to load any data at all. If the docs have just been inserted or modified they should be in memory so you can index them without any loading. Maybe you should index the most recently modified document first and catch-up with the remaining ones later? This way the 'hottest' document would be indexed first, without any additional loading cost.

.... and the cache wouldn't be polluted with older documents loaded there just for indexing.

@Rafal

You would have to also be mindful of "starvation" of the older documents. If you have a steady stream of new documents coming in, eventually you have to just say "enough guys, I've got to go back and get these other documents in."

oops, my response disappeared somehow. So, let's try again: 1. if your indexing cant keep up with the rate of modifications and there's starvation then it doesn't matter how you order documents for indexing - you won't be able to index them anyway and some will always 'starve' 2. But if you start with the wrong order and you have to load documents because they are not in the cache then you pay a double performance penalty - a cost of loading the data and even greater cost of throwing away already cached documents 3. Imho in normal operation you should never have to load documents to be indexed - they should always be already in the cache. So I'm not sure why Ayende is talking about the cost of loading documents - maybe this applies to batch processing or initial data load

@Rafal

Take a look at the post in the queue, it's titled, so I think it'll answer some of your questions.

"RavenDB indexing optimizations, Step III–Skipping the disk altogether"

Rafal, Consider what happens when you have existing data in the database and you add an index. You don't have all of the previously created documents in memory. Also, indexing by most recently modified means that you run into a LOT of issues with just tracking what you indexed and what you didn't. Especially when you add the notion of updates during indexing.

Rafal, Docs loaded for indexes are not actually cached. And we have steps in place to avoid starvation, we move to higher and higher batch sizes, optimizing our IO throughput along the way.

And I am talking about things like adding an index, or what happens after a restart, etc.

Thanks for explanation, Ayende In case anyone thought so, I'm not nitpicking, just being curious about how Raven manages its resources during periods of high load.

And another question: what is your idea for monitoring Raven's performance? I'm talking about automated, continuous collection of key performance data, like number of updates/sec, number of docs indexed/sec, cache size/hit ratio, indexing lag, number of sessions, transactions, Esent performance, memory, etc? I've been recently quite busy with monitoring application and server performance in Windows ecosystem and was wondering how Raven does these things, compared for example to MS SQL. And btw I have some pretty nice results with using NLog for collecting performance data, which might be useful for RavenDB too.

Rafal, We have several ways of doing that. We expose a number of performance counters, and we also provide /admin/stats and /databases/DB_NAME/stats endpoint that expose a lot of details about the internal structure of how ravendb works.

Comment preview