RavenDB indexing optimizations, Step I–dynamic batches

One of the major features in RavenDB was significant improvements to indexing speed. I thought that this would be a good idea to discuss this in detail.

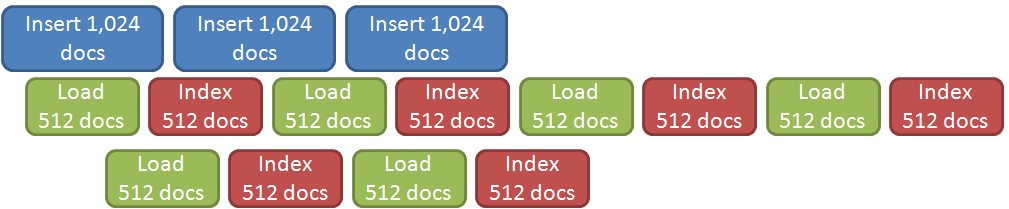

Here is a very simple example of how RavenDB used to handle indexing.

The blue boxes are inserts, and the green and red ones are showing the indexing work done.

This is simplified, of course, but that is a good way to show exactly what is going on there. In particular, you can see that it takes quite a long time for us to index everything.

The good thing here is that the actual cost we have is per index, so we want to batch things properly. Note that this behavior is already somewhat optimized. Most of the time, you don’t have a few calls with thousands of documents. Usually we are talking about many calls each saving a small number of documents. This approach would balance those things out, because we can merge many save calls into a single indexing run.

However, that still took too much time, so we introduced the idea of dynamically change the batch size (the reason we have batches is to limit the amount of RAM we use, and allow us to respond more quickly in general).

So we changed things to do this:

Note that we increased the batch size as we note that we have more things to index. The batch size will automatically grow all the way to 128K docs, depending on a whole host of factors (load, speed, memory, number of indexes, etc).

Since the cost is most in per batch, we actually got a not insignificant improvement from this approach. But we can do better, as we will see in our next post.

Comments

Comment preview