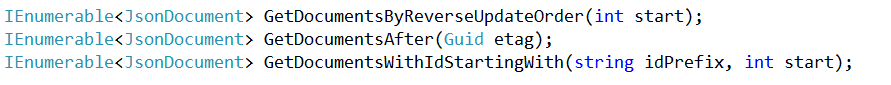

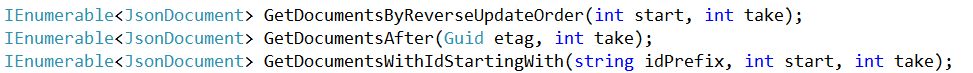

Compare and contrastPerformance implications of method signatures

time to read 1 min | 66 words

More posts in "Compare and contrast" series:

- (02 Apr 2012) Performance implications of method signatures

- (20 May 2008) Rhino Mocks vs. Hand Rolled Stubs

Comments

Not sure I get the question completely, but the second version limit the resultset ( a thing we almost always need ) while the first don't.

Second option can inherently be more performant - "take" is a hint for server.

They both return IEnumerable so I assume they both stream. The only difference I imagine is that the second will stop returning results once the "take" limit is reached, something someone could probably simulate by calling the first not iterating after a certain number of results.

First always returns all items. Even when we dont need them. Filtering is done on the client.

Second returns only returns items we need. Filtering is done on the server, thus saving bandwidth.

The latter mathod signatures are stimulating the developer to give more insight to how many documents are expected. This then allows the server to perform better.

After returning "take" documents, server doesn't need to scan for other candidates any more. Also it would not return extra data ower network.

I suspect the API doesn't do stream, but retrieves whole result set and then presents it as IEnumerable. Otherwise every "fetch" from IEnumerable would be possibly an network round trip.

They both have the same performance implication to the client as they all returning IEnumberable<> so it's up to the implementation to decide how to feed the enumerator.

Even without "take" parameter in the first set of APIs, it's understandable it may have internal paging mechanisms. The APIs themselves just doesn't promise anything.

I'd make the API to return IQueryable<> to imply better performance characteristics. As IQueryable<> suggests the query will be deferred when a complete query spec is available (e.g. with all filters, projections and paging ready). This way it's up to the client to decide how to achieve the best performance with its own specific requirement.

A lot of people have talked about 'on the server' - where in the post does it mention anything about a server?

If we take the methods as stated, we cannot say for certain what the implication are. If the implementations rely on streaming of object (i.e. IEnumerables 'all the way down'), then the second set it possibly slightly less performant as we have an extra argument to pass and check (e.g. > 0).

If the implmentations do involve batch processing of objects (i.e. loading from an external service in groups rather than streaming), or they rely on a service that can perform better knowing the 'take' then it's possible they will perform better knowing that how many the consuming code will require.

Without the implmentation, it's impossible to say. I would have the most appropriate and only add the other if testing proves advantageous.

The later one allows the server to read only 'take' records from data source while the former does not make any restrictions and can result in unbound result set.

the second version is safe-by-default against unbounded result lists.

Xing Yang and Lee Atkinson are right. Plus, nothing prevents a consumer of this API of passing int.MaxValue to get as many records as he/she can.

The only facts we can take from the code sample without making any assumptions is that the second set of methods are having to copy an extra value type parameter - which will degrade performance albeit in a negligible way. Any other conclusion we draw is based on assumptions which may not hold true.

Making the page size parameter explicit means less overhead. For a UI, you might want a page size of 20, since you're only displaying 20 records to the user; if it's hard-coded to 100, you retrieve a lot more each time than you need. For batch processing, you want a much higher page size, and end up making more calls to the server than you would otherwise.

Since this is using IEnumerable, the pagination might be implicit rather than requiring additional calls, but the principle is the same.

that's funny that so far everyone sees the second as "safe by default", you're just thinking about one case, but you're giving the user the chance to (abuse) specify how much they want, where on the first one, you can decide what "safe by default" means, the second one is just giving more responsibility to the user

One phrase: bounded result sets. Oren has tried to hammer this concept home for a long time.

The second one is allowing the user to specify a really LARGE number say 1,000,000,000 which has its own problems.

The second set make paging an explicit concept, whereas the first do not. If these methods are retrieving data from a server, the first set either a) gets ALL results in a single pass, b) implements some paging transparently, or c) makes a server call for each successive result (which is just paging with a page size of 1). In case (a), it's probably more results than the user really wants. In case (b), it's very unlikely (unless configurable via some other call) that the internal page size is optimal for the caller's purposes. And (c) is almost certain to be suboptimal.

There's no guarantee that the second set of methods perform filtering server side (if there IS a server involved). But they make server-side filtering a possibility, whereas the first do not (or at least, don't encourage it).

By forcing the caller to think about paging, we're more likely to encourage correct use. e.g., the developer might think, "Do I really need more than one page?" or "I should display one page, and then only load an additional page if necessary," while the first set does nothing to actively encourage that sort of thinking.

The caller is more likely to use an optimal or nearly-optimal page size in the second version, which (again, IF server-side filtering is enabled) will mean more efficient use of resources.

And @Eber, there's no reason you have to respect a call with take=Int32.MaxValue. You can impose a maximum result set size in the second method set as easily as in the first. Perhaps more easily (from a usage point of view), since the caller is already expecting to only get a certain number of results per call when using the second set, whereas s/he could reasonably expect to be able to iterate over all results in the first set.

Since the methods have less than 2 params can't they be used with x86 Fastcall?

Maybe I'm over thinking it but I've had a lot of experience with x86.

It we're talking about RavenDB, you recently taught me about the result set being limited to 128 results unless you specify a Take, in which case it can go to 1024 results. By requiring Take as a parameter, you enforce it being entered by the caller, and you can choose to validate that it's not larger than 1024, throwing an exception if it is.

If we're just talking about c# method signatures, I would guess that an extra parameter means more IL, but I don't know that I'd consider that a perf issue.

Second option is much more better specially if database holds lots of data. I am using in quite different way, Method(int id, int page, int perpage=10),

It wouldn't be the same, calling the FirstSignature.Take(2) using linq? I mean, the execution will be deferred and you only will take what you need. (assuming you are use yield return inside both methods)

Comment preview