The final monitoring feature in RavenDB 3.5 is SNMP support. For those of you who aren’t aware, SNMP stands for Simple Network Management Protocol. It is used primarily for monitoring network services. And with RavenDB 3.5, we have full support for it. We even registered our own root OID for all RavenDB work (1.3.6.1.4.1.45751, if anyone cares at this stage). We have also setup a test server where you can look at the result on SNMP support in RavenDB 3.5 (login as guest to see details).

But what is this about?

Basically, a lot of monitoring features that we looked at boiled down to re-implementing enterprise monitoring tools that are already out there. Using SNMP gives all those tools direct access to the internal details of RavenDB, and allow you to plot and manage them using your favorite monitoring tools. From Zabbix to OpenView to MS MOM.

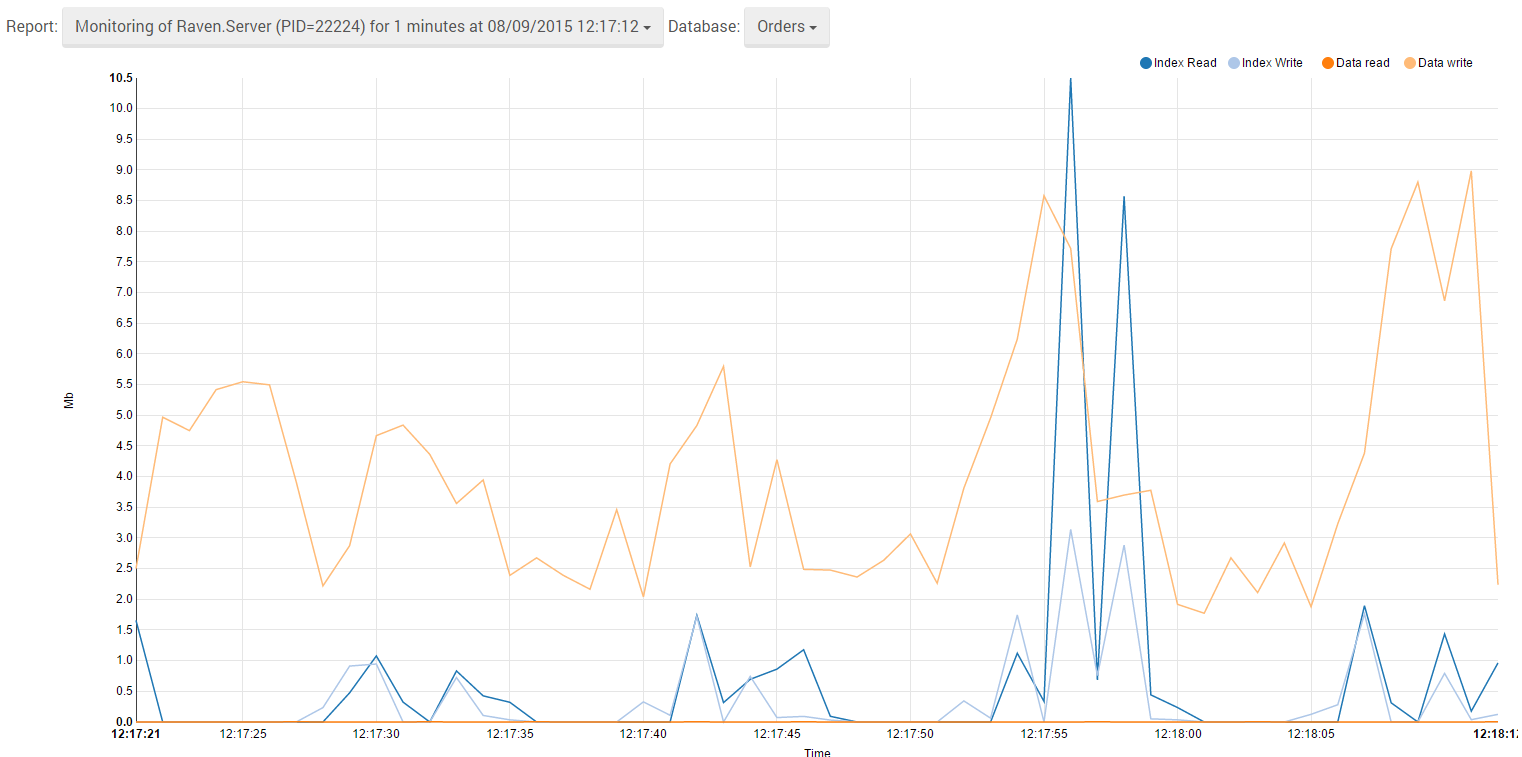

We expose a long list of metrics, from the loaded databases to the number of indexes items per second to the ingest rate to the number of queries to how much storage space each database takes to…

Well, you can just go ahead and read the whole list and go over it.

We are still going to put effort into making figuring out what is going on with RavenDB directly from the studio, but as customers start running large numbers of RavenDB instances, it becomes unpractical to deal with each of them individually. That is why using a monitoring system that can watch many servers is preferable. You can also set it up to send alerts when certain threshold is reached, and… those are now features that aren’t RavenDB features, those are your monitoring system features.

Being able to just off load all of those features is great, because we can just expose the values to the monitoring tools and go on to focus on other stuff, rather than just have to do the full monitoring work, UI, configuration, alerts, etc.