RavenDB indexes are one of the things that make is more than a simple key/value store. They are incredibly important for us. And like many other pieces in 3.0, they have had a lot of work done, and now they are sparkling & shiny. In this post I’m going to talk about a lot of the backend changes that were made to indexing, making them faster, more reliable and better all around. In the next post, I’ll discuss the actual user visible features we added.

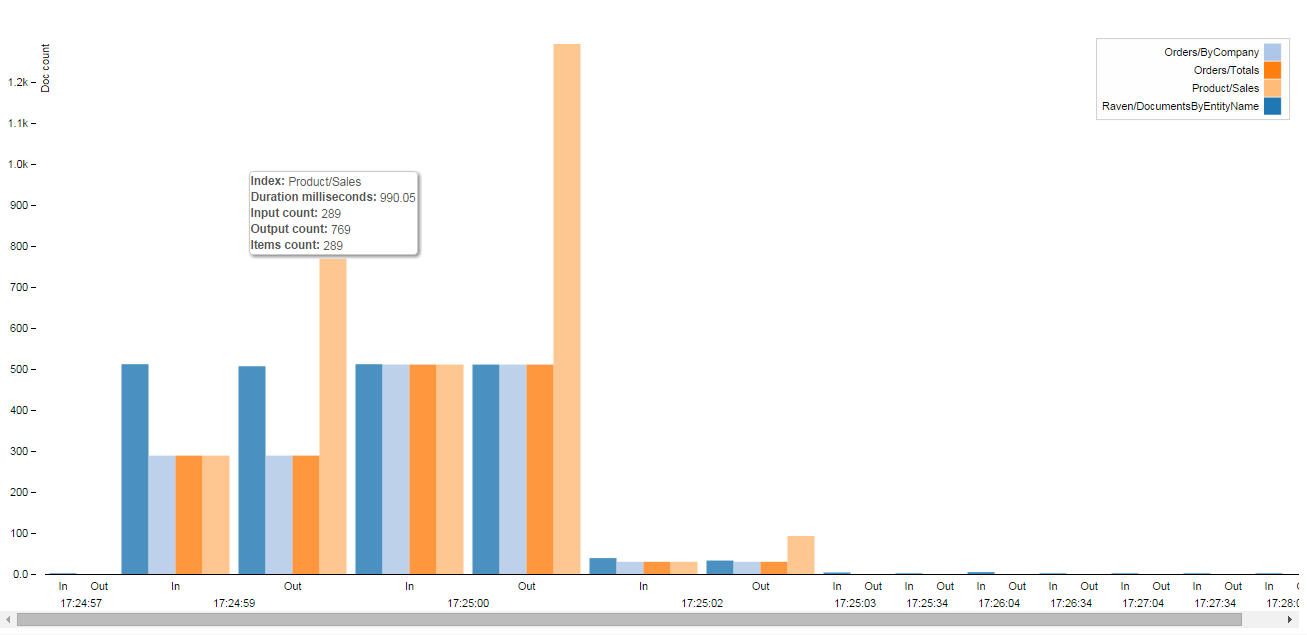

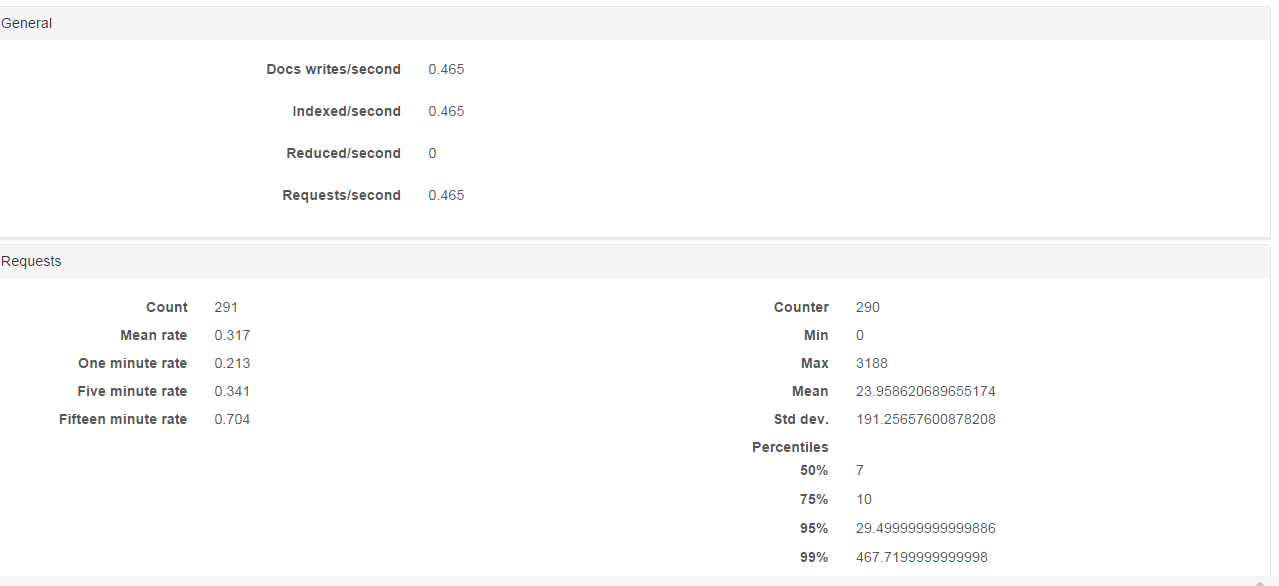

Indexing to memory. Time and time again we have seen that actually hitting the disk is a good way of saying “Sayonara, perf”. In 2.5, we introduced the notion of building new indexes in RAM only, to speed up the I/O for new index creation. With 3.0, we have taken this further and indexes no longer go to disk as often. Instead, we index to an in memory buffer, and only write to disk once we hit a size/time/work limit.

At that point, we’ll flush those indexes to disk, and continue to buffer in memory. The idea is to reduce the amount of disk I/O that we do, as well as batch it to reduce the amount of time we spend hitting the disk. Under high load, this can dramatically improve performance, because we are I/O bound so much. In fact, for the common load spike scenario, we never have to hit the disk for indexing at all.

Aysnc index deletion. Indexes in RavenDB are composed of the actual indexed data, as well as the relevant metadata about the index. Usually, metadata is much smaller than the actual data. But in this case, an index metadata in the case of a map/reduce index include all of the intermediary steps in the process, so we can do incremental map/reduce. If you use LoadDocument, we need to maintain the documents references, and on large databases, that can take a lot of space. As a result of that, index deletion could take a long time in RavenDB 2.5.

With RavenDB 3.0, we are now doing async index deletions, so you can delete the index, which does a very short operation to free up the index name, but do the actual work to cleanup after the index in the background. And yes, you can restart the database midway through, and it will resume the async index deletion behavior. The immediate behavior is that it is much easier to manage indexes, because you delete a big index and not have to wait for this to continue. This most commonly showed up when updating an index definition.

Index ids instead of names. Because we had to break the 1:1 association between index and its name, we moved to an internal representation of indexes using numeric ids. That was pretty much forced on us because we had to distinguish between the old index Users/Search (who is now in the process of being deleted) and the new Users/Search index (who is now in the process of being indexed).

A happy side effect of that was that we actually has a more efficient internal structure for working with indexes in general. That speeds up writes and reads and reduce the overall disk space that is used.

Interleaves indexing & task executions. RavenDB uses the term task to refer to cleanup jobs (mostly) that run on the indexes. For example, removing deleted index entries, or re-indexing referencing documents when the referenced document has changed. In 2.5, it was possible to get a lot of tasks in the queue, which would stall indexing. For example, mass deletes were one common scenario were the task queue would be full and take a bit to drain. In RavenDB 3.0 we have changed things so a big task queue won’t impact indexing to that extent. Instead, we can interleave indexing and task execution so we won’t stall the indexing process.

Large documents indexing. RavenDB doesn’t place arbitrary limits on the size of documents. That is great as a user, but it places a whole set of challenges for RavenDB when we need to index. Assume that we want to introduce a new index of a relatively large collection of documents. We will start indexing the collection, realize that there is a lot to index, so we’ll grow the number of items to be indexed in each batch. The problem is that is is pretty common that as the system grows, so does the documents. So a lot of the documents that were updated later on would be bigger than those we had initially. That can get to a point where we are trying to fetch a batch size of 128K documents, but each of them is 250Kb in size. That requires us to load 31 GB of documents to index in a single batch.

That might take a while, even if we are talking just about reading them from disk, leaving aside the cost in memory. This was worse because in many cases, when having so much data, the users would usually go for the compression bundle. So when RavenDB tried to figure out what is the size of the documents (by checking its on disk size), it would get the compressed size. Hilarity did not ensue as a result, I tell you that. In 3.0, we are better prepared to handle such scenarios, and we know to count the size of the document in memory, as well as being more proactive about limiting the amount of memory we’ll use per batch.

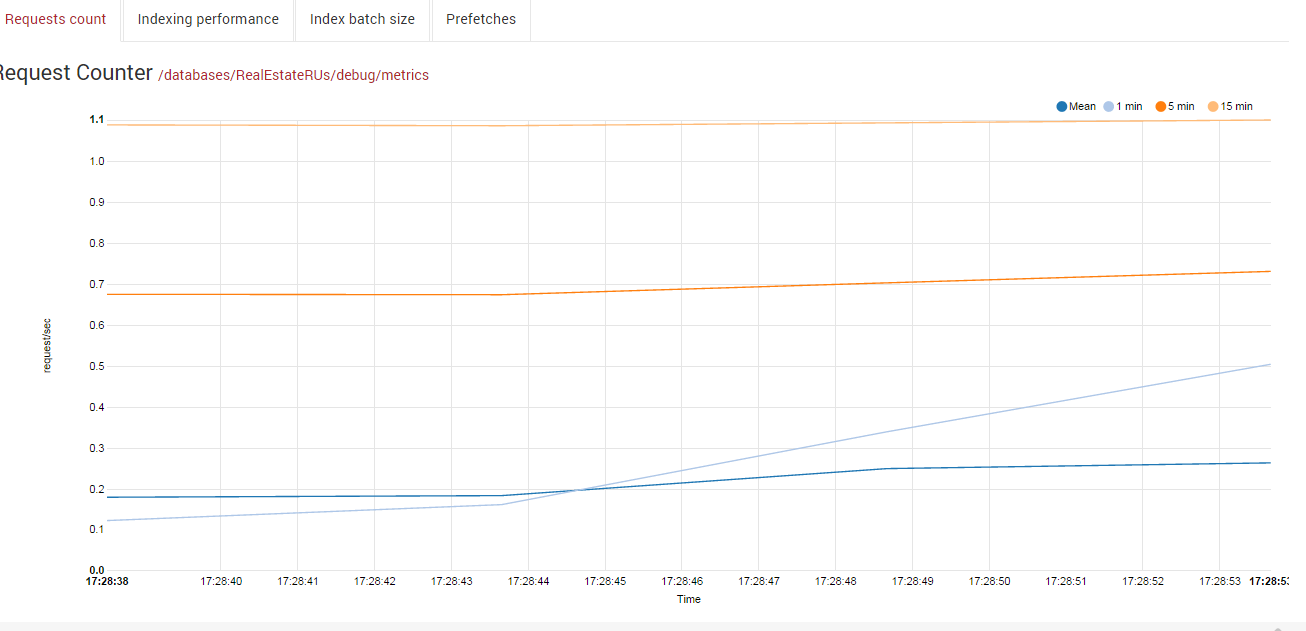

I/O bounded batching. A core scenario for RavenDB is running on the cloud platforms. We have customers running us on anything from i2.8xlarge EC2 instances (32 cores, 244GB RAM, 8 x 800 GB SSD drives) to A0 Azure instances (shared CPU, 768 MB RAM, let us not talk about the disks, lest I cry). We actually got customers complaints about “Why isn’t RavenDB using all of the resources available to it”, because we could handle the load in about 1/4 of the resources that the machine in question had available. Their capacity calculations were based on another database product, and RavenDB works very differently, so while they had no performance complaints, there were kinda upset that we weren’t using more CPU/RAM – they paid for those, so we should use them.

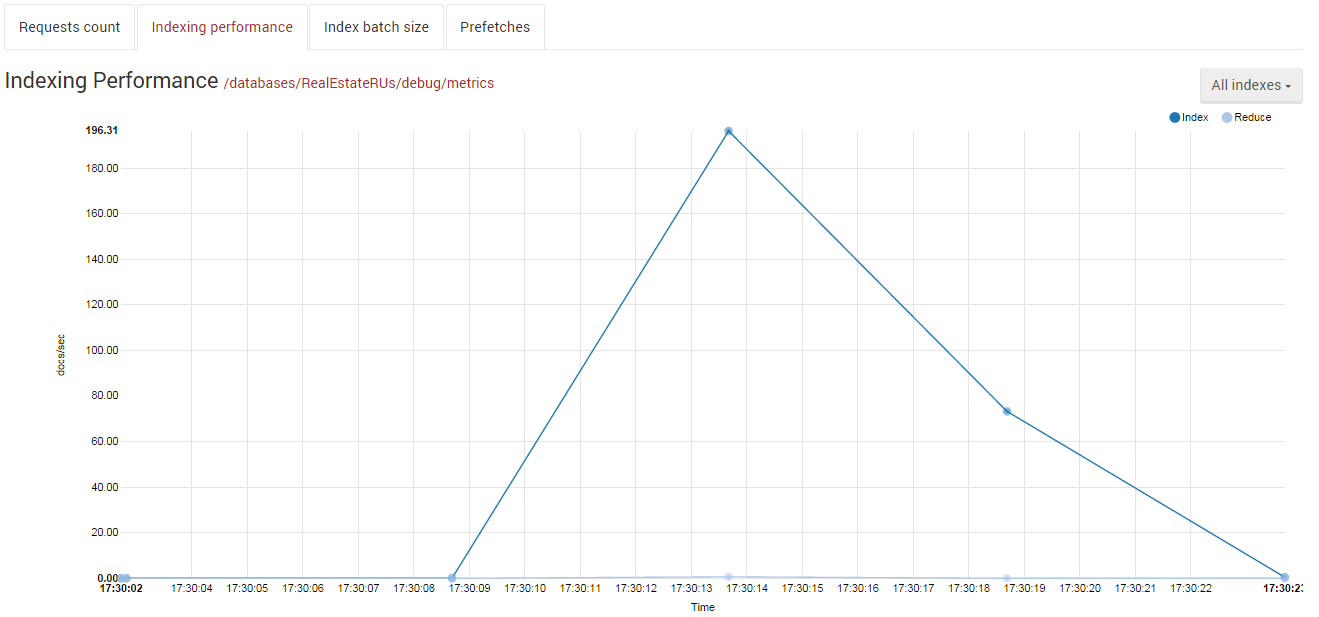

Funny as that might seem, the other side is not much better. The low end cloud machines are slow, and starved of just about any resource that you can name. In particular, I/O rates for the low end cloud machines are pretty much abysmal. If you created a new index on an existing database in one of those machine, it would turn out that a lot of what you did was just wait for I/O. The problem would actually grow worse over time. We were loading a small batch (took half a second to load from disk) and indexing it. Then we loaded another batch, and another, etc. As we realized that we have a lot to go through, we increased the batch size. But the time we would spend waiting for disk would grow higher and higher. From the admin perspective, it would appears as if we were hanging, and not actually doing any indexing.

In RavenDB 3.0, we are not bounding the I/O costs, so we’ll try to load a batch for a while, but if we can’t get enough in a reasonable time frame, we’ll just give you what we already have so far, let you index that, and continue the I/O operation in a background thread. The idea is that by the time you are done indexing, you’ve another batch of documents available for you. Combined with the batch index writes, that gave us a hell of a perceived performance boost (oh, it is indexing all the time, and I can see that it is doing a lot of I/O and CPU, so everything is good) and real actual gains (we were able to better parallelize the load process this way).

Summary – pretty much all of those changes are not something that you’ll really see. There are all engine changes that happen behind the scene. But all of them are going to work together to end up giving you a smoother, faster and overall better experience.