When race conditions & unbounded result sets are actually the solution – the resolution

Originally posted at 4/13/2011

We discussed the problem in detail in my last post. As a reminder:

The requirement

Starting at a given time, charge as many accounts as fast as possible. Charging each account is a separate action, we don’t have the ability to do batching.

The first question to ask, however, isn’t how we can get the data out of the database as fast as possible. The first question to ask is actually:

What are the limiting factors.

In this case, we have to make a separate request for each account. That means that we have to access a remote service, and that means we have to figure out whatever we can actually parallelize the work in any way.

Most production systems would limit the number of concurrent calls that a single customer can make (to prevent a customer with a lot of servers from crashing their own systems).

Let us say that we have 100,000 accounts to charge. And let us further say that the total time for a charge operation is in the order of 1 sec. What it means is that whatever we want, we are actually going to be limited by the processing capabilities on the accounts, not our own code.

A silly way of doing this would be something like:

var subscriptions = session.Query<Subscription>().Where(s=>s.NextCharge < DateTime.Now); foreach(var sub in subscriptions) { sub.ChargeAccount(); // 1 sec }

This would be silly for several reasons:

- It would take over a day to complete.

- It would load a lot of data to memory, probably only to be used in several hours.

- It puts a lot of strain on our own database and network backbone.

A better alternative would be to try to parallelize this. Assuming that we can perform 5 concurrent requests, we can drop the processing time to just below six hours.

Note where the major savings are, not in how we are actually doing stuff with the code, but rather in how much concurrency we can agree on with the accounts provider. Just to note, let us say that they allow us 25 concurrent requests per second, we are still much better doing chunks of the data all the way rather than trying to process it all at once.

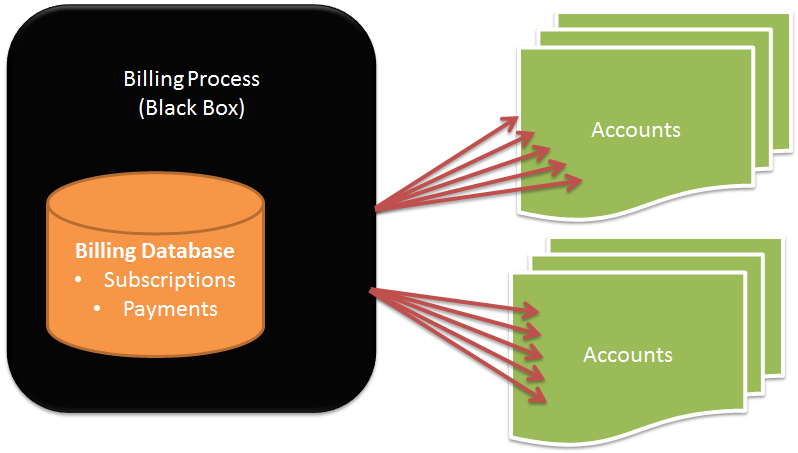

What I would actually do in this scenario is just load the ids of the subscriptions that we need to update into a queue and let a bunch of workers (may be on separate machines) feed off the queue and try to handle as many account charges as they can possibly manage.

Comments

Queues are nice. We use MSMQ for similar application (just not dealing with money :). I like the way you can always pause processing and restart it later (fine granularity).

With introducing of error queues you also get an opportunity to push failed requests to it and get back to them later.

Sometimes, when the error is e.g. network-related, fixing it is as simple as movine the message from error queue back to the regular one.

Not really a tech thing, but I'd consider prioritizing billing operations. For example going for larger outstanding bills first and every x weeks going for small amounts (because, if the business runs well, they may never be charged).

Also it could be considered whether it is feasible to split the bill. That way, if you don't get the full amount you take what is available, and call the transaction xyz pt.1

Additionally it may be worth to throw more money at the intelligence you deploy with regards to when the accounts are filled. Outwitting others in that respect should reduce the need to actually race for the money.

Maybe I'm underthinking this. Why not just use the TPL?

int numConcurentRequests = 25;

Subscription[] subscriptions = session.Query <subscription().Where(s => s.NextCharge < DateTime.Now).ToArray();

numConcurentRequests = numConcurentRequests > subscriptions.Count() ? subscriptions.Count() : numConcurentRequests;

Task[] tasks = new Task[numConcurentRequests];

for (int i = 0; i < subscriptions.Count(); i++)

{

}

Having the numbers: 10^5 requests to perform, 1s - time of a single request, the solution simply begs for using some kind of a dispatcher moving the applications from "I am a single instance of Windows serwice" rather to "I use some bus (MassTransit, NServiceBus) to dispatch all of asynchronous work".

Comment preview