What were you doing with last year logs?

Originally posted at 4/11/2011

I was trying to take a backup of my blog to see if I can do some fancy stuff there, when I realized that the blog backup was 2 GB (!) in size. Now, I know that I post a lot, but I don’t think that I post that much.

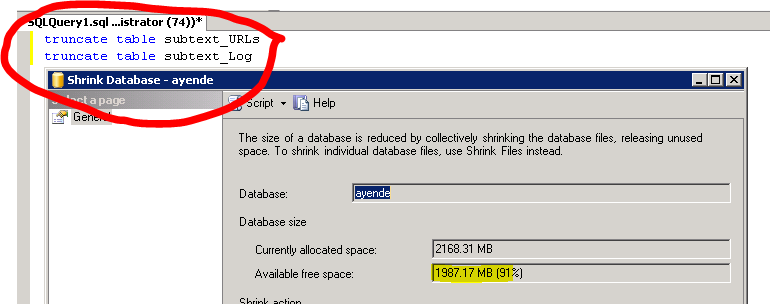

As it turns out, the problem was with unbounded logs that started taking up most of the space in the database:

In general, when building applications that are meant to run over long periods of time without attention, it is better to have some way of getting rid of unimportant information automatically.

Comments

Do note that shrinking the database is one of the worst things you can do for performance, unless you ensure to defragment all of your indexes afterwards. Granted, with a 200MB database, you'll probably be fine as the dataset is so small.

Mark,

When you remove 90% of the data, you probably need to do that anyway, since you already fragmented everything by removing the data.

Also, this makes the size of backups much more managable

I hate deleting data. Who knows what information you could derive from those stats in the future? I recomment moving that table to an archive DB (delete from tab output into ArchiveDB.ArchiveTab) or a bcp file.

You're using sql server? pfft

;)

Wayne, find me a host that hosts RavenDB at a price competitive to winhost, and I'll start writing a blog engine running off RavenDB :)

Frank,

Just host RavenDB embedded.

As for the rest, give me some time :-)

LOL, Ayende, you which tool eats up 4 Gig for its logs in a breeze on my box and does not offer rolling logs (yet)?

NHProf :-)

See it as a feature suggestion.

RickRock,

We actually do handle log trimming

With Sql server usually it is best keeping logs in another filegroup stored on different physical file, you can avoid backing up logs each day, and the main file, with important data suffer less for fragmentation.

I agree the default settings for a new MS SQL Server DB leave a lot to be desired, although I think the reason they leave it unbounded by default is because they are trying to provide a situation that works in all environments. Personally I would put the max size at 10MB and force implementers to define a max size and growth strategy, with some easy cookie cutter options (like blogging) for users that don't need to spend the time learning a whole DB system just to turn on something basic.

It isn't difficult to add bounds (database properties, files, change autogrowth settings) or if you want to limit the amount of growth you can switch the database's recovery model to Simple mode (somewhere else in properties - in Simple it only uses the transaction log for in process transactions so it won't grow the database log file unless you execute a transaction that is enormous enough). This should also solve the fragmentation issue as long as the log file size is large enough to handle your current transaction sizes (as it won't need to autogrow and won't need to be shrunk).

Shrinking database might affect performance since the sql engine requires empty space to work efficiently

So a maintenance over data and log files when server load is minimum or minimized to leave enough space for database engine not to extend datafiles within day time is important.

SQLAdmin,

I am actually talking about the logs table for Subtext, not the DB logs

Comment preview