Maintainability, Code Size & Code Complexity

The most maintainable codebase that I worked with grew at a rate of about ~10 KLoC per month, every month. There wasn’t a large team there, it ranged fro 3 – 6 people. This is the project that I think about whenever I had to talk about good code bases.

It is a WebForms project (under protest, but it is).

What make it maintainable? Not the WebForms part, which wouldn’t come as a surprise. What make it maintainable is that it is the first project where I had applied the notion that large swaths of simple code is better than smaller code base with higher complexity. You could see example of this sort of architecture in the Alexandria and Effectus applications.

Note: I am doing things like measure KLOC here mostly because it is a number that I can measure. As I am saying in this post, KLOC has very little to do with maintainability.

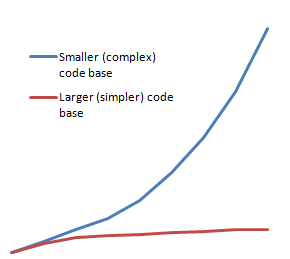

The problem can be expressed well using the graphs. This is a representation of the complexity of the application as it grows, lower maintainability cost is better.

The problem with the smaller and more complex code base is that the complexity tends to explode very quickly. With the larger code base, you end up more or less on the same plane.

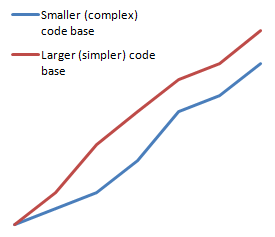

But the above chart is somewhat misleading, because it make the hidden assumption that in both code styles, you’ll have the same amount of code, which is obviously false. Here is what is probably a more accurate representation of how much code is written for style per # of features.

This doesn’t look right. Not when we compare it to the chart above. Of the top of my head, I would expect the second chart to be the mirror image of the first one. But it isn’t. And the reason that it isn’t is that each feature you write still cost you some amount of code. And the rate of growth per features added is pretty constant either way.

Putting the two charts together, you can see that it means that even code styles with focus on less code in favor of more complex solutions grow, and that as they grow, they become less maintainable.

So far I have been very vague when I was talking about “complex” and “simple”. In part, this is because those are somewhat hard to define. There would be people who would claim that ORM leads to complex codebases, but I would obviously disagree.

For my part, I also strongly advocate of having a strong core of infrastructure that gives services for the rest of the application, and that tend to be complex piece of coding. But that is also something that is fixed, once the infrastructure is written, it tend to be static, so that saves you from the fate outlined above.

When I look at a piece of code, I do the usual evals (nesting, conditionals, cyclomatic complexity, etc), but I also look at how often the developers reached for a hammer and beat everything around to submission. There is a lot of gut feeling here. But I do have one objective metric to use to tell you whatever a piece of code fit either style.

In the fight between Don’t Repeat Yourself an Single Responsibility Principle, SRP wins, every single time.

Any time that I have seem code that did double (or triple or dozenile) duty, it led to the sort of “we have to write less code” style of coding that ended up in a quagmire.

Comments

cool! graphs without axis definitions!

Completely agree. This is my experience as well. Don't try to be too smart.

Higher complexity adds a lot of cost to a project for relatively minimal gain. Still there is a lot of drive out there to add complexity, and I believe the key driver there is challenge.

Writing simple code is not gratifying until down the road when you realize that bringing those extra 4 green developers is a whole lot simpler; Going off and doing something and then coming back to look at it is a whole lot simpler; Adding that new feature is a whole lot simpler; Fixing that bug that got missed is a whole lot simpler.

Writing complex code is the challenge. The goal is to make future change even more simple. I thnk to some, complex code is like a badge of honour. With simple code, a "bright star" developer would come in, understand it easily, and probably comment that it's too simplistic. Or the fear that simple to maintain code means you're easier to replace with another developer. Complex = job security.

Only the brightest stars come in and look at complex code and "get it" right off the bat, those that don't get it fear being considered inferior.

I get into lots of "discussions" about simple vs. complex code. Simple code is easier to enhance, debug, and fix. Complex projects eat hours more time, and greatly increase bug/regression risk when that nifty base infrastructure doesn't meet a current requirement.

"In the fight between Don’t Repeat Yourself an Single Responsibility Principle, SRP wins, every single time."

So true, and have to fight the reuse mantra every day. Sure, I've been there myself, spending waaay too much time trying to build frameworks when I could have spent a fraction of the time finishing the application instead. Not to mention the burden of complexity that often is the result.

Also, actually working reuse is often the result of SRP.

I'm curious - did you use WCF in this project?

@gunteman

Admit, writing framework is so much more satisfying than writing just yet one more order entry application.

Steve,

No.

Alex,

ayende.com/.../stealing-from-your-client.aspx

@Alex

I used to think so, but no. Building complex frameworks is not how I want to show that I'm an experienced developer. Delivering quality and delivering fast is.

I am not sure to understand, when you write:

Are you fostering code duplication? something that, if I understood well, you wrote a while back ago when you described your preferred architecture style where basically, there is a 'strong core infrastructure' and N independent components that all sit on top of the core infrastructure.

Modulo 'I understood well' I strongly disagree. Given that:

-> every single line of code is a potential enemy

-> the number of lines of code necessarily grows when the feature set grows

developers duty is to factorize code whenever they can. I really don't see why you seem to advocate that factorizing add complexity? Factorizing is an exercise that lead developers to find the right abstractions at the right level.

The relation between [complexity - maintenability -code size] is driven by the diseconomy of scale phenomenon. Applied to software dev, Diseconomy of scale means that if the cost to maintain a cohesive piece of N lines of code is X, then the cost to maintain a cohesive piece of 2N lines of code is (much) greater than 2X.

This is why the idea of numerous small + well bounded + cohesive components, low coupled, is important. In this condition the maintenance cost of each small individual component is mastered while low coupled global structure avoids the nuisance of the diseconomy of scale.

The least maintainable application I've worked on had a complex infrastructure which allowed for super simple CRUD operations with little to no custom code required. The problem is, whenever anything beyond the simplest scenarios had to be implemented there was no good place to put the code for it. The custom code in general wasn't very complicated, but it just didn't fit into the app very well.

On the converse the most maintainable app I've worked on was based on a cqrs architecture. Again the infrastructure code was pretty complex. On this project we had to write quite a bit of code regardless of whether the operations were simple or complex. The architecture was very structured and it was always well understood where code should be placed.

In these cases maintainability was more the result of the application's structure than code size or code complexity.

I think there's a lot of assumptions made in the post and in the comments here. While I agree with the post, most of it assumes that the small, complex codebase was written that way intentionally. My experience has been that when you see a particularly large codebase, it is because it is vastly too complicated for the requirements, and that's because the developers didn't realize there were easier ways to do things. Further, I don't think I've ever seen a very small, very complex framework: They always tend to themselves be particularly large, suffering from needless, senseless code and anti-patterns abounding.

I've worked on a project before where some view-models easily had over 3,000 lines of code, much of which was far, far more complicated than any code I'd ever seen. Is the reason for that that they did it on purpose to try to keep the view-models concise? I highly doubt it; it is unlikely that conciseness ever showed up in their thought processes. Their thought processes were probably more along the lines, "Well, I'll just add this in here and refactor it later." Or even more likely is, "I'll add this here because there's nowhere else to put it (and then they forget about it and never refactor it later)."

I think a huge reason for this is the typical "architect" person who comes in, pronounces X, Y, and Z to be the patterns to be used on the project, and then leaves. The subordinates then try to implement it based on a glance at articles on Google, fail miserably but do not realize that they've failed, and complain about the fact that they couldn't figure out exactly how to fit the code into the patterns/architecture laid out by more knowledgeable people. The underlying patterns and architecture may be ridiculously complicated, but at the same time, it was shoddy understanding of basic code quality analysis that made the remainder of the application grow into a stupidly complicated piece of garbage.

Sorry, one other thing. Most of the complexity and needless code bloat that I've seen comes from those very same "architects" identifying places that should be "abstracted" - places that do not represent anything that will ever be replaced/reused. I hate that with a passion so bright it would burn to look at without sunglasses on. I don't mind having well-defined places that are abstract and make sense to be replaceable via the Liskov Substitution Principle, but when the abstraction is totally unwarranted, I hunt down that person and let them know about it.

Obviously the motto 'fool me once not twice' should be always followed. It means never create an abstraction for one occurrence, but when a second occurrence appears, go straight, abstract, don't wait for a third occurrence and more! This is adaptative design, don't try to anticipate, but refactor when needed.

My point, Patrick, was that the place was not supposed to be abstracted because the underlying functionality does not represent the same functionality between the two items abstracted (although that wouldn't be obvious from what I wrote - so thanks for pointing it out).

However, this isn't a situation where it's a "one occurrence causing abstraction" - there are in fact "two occurrences" (there's more than two, really - hundreds), but nothing actually uses the abstraction, and so it's pointless. There's not even a chance that anything in the framework could use these abstractions: They are that abstract!

Patrick,

To some extent, yes.

Simple example, let us say that we have a constraint that Name must be smaller than 50 chars.

This needs to be checked at the client side and server side.

There are two ways of doing this.

One is to actually write the code twice, which is the simplest approach from the point of view of the app developer at the current point in time.

The other is to write some sort of infrastructure code that allows you to specify the constraint only once, but apply it twice. That is significantly more complex than adding an additional if statement or two.

My problem is that given a single scenario like this, I have seen people develop their own validation framework to answer that need.

At that point, the code may be "correct" in the sense that it avoided duplication, but it has become significantly harder to work with.

To make things even more interesting, when a new constraint come up (Name must be unique), which is something that can only be done on the server side, those sort of generic solution usually run into a wall.

Nitpicker corner:

No, I am not advocating not using a validation library. I am saying that given a single scenario, you shouldn't roll your own in the name of saving a few lines of code.

Yes, there are issues with just "adding an if statement or two" if taken to extremes.

Patrick,

ayende.com/.../...ng-everything-the-smart-way.aspx

Even in the simple Name length checking, I'd vote for a factorized helper that does the check. Certainly not a validation Fx. Just a one line public static pure 'function' IsNameValid(string):bool. This function would be declared in a public static and stateless helper class dedicated to simple arguments validation. This static class would be declared in an assembly referenced from both server and client code. Compare to the 'if duplication' proposed I can see 3 major benefits:

If the validation has to change somehow (like 70 char max + first char is a letter uppercase) there are only one place to change for all. - with code duplication there is always the (high) risk to forget a place to refactor.

this simple public static function style method are particularly easy to unit test. (and for a fact, the NDepend code base contains thousands of them). - with code duplication, tests should be also duplicated.

last but not least, every call to the function IsNameValid(string):bool constitute an important piece of data. The day someone wants to maintain the code base, a single search for calls to this function can reveal where in the code base the code is concerned about data validation, -> higher maintenability - with code duplication, an important information about original intentions is definitely lost -> lower maintenability.

Having saved a few lines of code cannot be, indeed, considered as a fourth significant advantage of factorization here.

Finally, if the validation has to change for something more complex and eventually asymmetrical (i.e Name must be unique), I just keep the function and refine the server side. - no more, no less work than the code duplication solution.

I agree with you that there are dangerous 'architect astronauts' lurking around. But I cannot imagine a situation where code duplication is preferable to code factorization & abstraction. Keep it simple, factorization doesn't necessarily mean developing a complete framework. Abstraction doesn't necessarily mean interfaces, abstract classes and IoC. For me, the simple function IsNameValid(string):bool is a form of abstraction. For the rest of the code, the validation logic is now something abstract.

Concerning hardcoding constants, I see your point but I prefer to store constants in XXXConstants classes (with the small risk that constant value change are not propagated because of asm not recompiled, I can live with that sort of risk). The reasons for XXXConstants are exactly the same than for IsNameValid(string):bool.

Code factorization, easier testing, higher maintainability.

Today, I've published (internally, in my company) the infrastructure. Setting up the whole pretty complex app with it takes no more than 150 lines of code. I do agree with the part about extracting the infrastructure!

@Patrick

That example of a validation is what most people would consider a pretty simplistic approach to the problem, and in a way it is also going to lead to more code than other approaches. Each validation is a separate method, many doing one or two lines of duplicate work.

Where this goes overboard in terms of complexity is when developers get it in their mind to build a rules engine around the validation, then tie in custom delegates, etc, etc, to make one validator that can validate every possible control and purposed control that does exist, and will ever exist within this application and all others that come afterward.

@scooletz

Let me know how that goes 12 months from now, or after you've used that framework on the 5th unique complex app, whichever comes first.

I've been waiting YEARS for you to see it Mr Simple's way. Nice to see you come around.

You know what's awesome? Having to use a framework where the simple Validation Example Ayende introduced earlier in these comments is solved by having no fewer than 25 separate SQL Statements inserted into a Database...and if one of those SQL Statements is even a tiny bit off, your entire application crashes out. Pure win.

I really doubt there was a point where someone said to themselves that they wanted to create a system like that. It was probably something more along the lines of "Let's make Validation really configurable for a non-technical person, and let's not require a recompile whenever they want to change it." which is a noble goal I guess. Of course, you know what they say about good intentions.

PS, I also have a theory that the reason people design complex code is they want to appear smarter than they are. A simple solution is too, well, simple. I can't prove that one of course, just a gut feeling.

Anyways, here's another resounding vote for simple code.

Steve Py, Steve, I am a strong advocate of simple code too. Concerning validation, a one line pure static function is the simplest answer I can imagine.

I would go even further, I am an advocate of simple code even when it comes to implement complex requirements.

The problem I have with this discussion is that I feel that in the name of code simplicity, some of you are ready to promote code duplication. Code duplication is a code flaw, as much as 'too' complex code can be a code flaw as well.

That's what confuses me a bit. I would think that if you get your Responsibilities right, DRY isn't very far away.

Patrick,

The problem is that most often the choice isn't between RYO validation framework, an if statement or a method encapsulating that logic.

As Steve points out, the method approach doesn't work in many cases either, but the real problem is that the choice in most cases isn't between multiple options. What I see in the real world that it is a binary choice, RYO framework or write an if statement.

Where? What are flaws of the simple method factorization approach?

Once again I cannot imagine a situation where code duplication brings any advantage over code factorization. This is a fundamental point on which we disagree.

There are

A very bad way (too complex factorization, RYO Fx)

A bad way (simple code duplication)

A clean way (simple factorization, with a single pure static function style, method)

What you said is that because enterprises are full of 'architect astronauts' often the very bad way is chosen. This is indeed a sad reality and you are right to denounce this fact. But then, the answer proposed is that the bad way should be prefered instead of the very bad way!

My position is: Why not promoting the clean way instead of the very bad way?

Patrick,

Consider the case where there are 15 such methods, or 30...

You Helpers.IsNameValid method is just another instance of the if statement, after all.

Frank: "That's what confuses me a bit. I would think that if you get your Responsibilities right, DRY isn't very far away. "

Exactly. But it doesn't work the other way around. If you get SRP right, DRY will follow, but SRP is not the (immediate) result of DRY.

If I have 15 or 30 validation methods like IsValidAddress() /IsValidPhoneNumber() / IsValidPostalCode() / IsValidName() / IsValidCountry() ... I sincerely don't see the problem in packing all of them in a static, stateless, helper, low-level, public class. Code concern locality is preserved (everything relative to record data validation is in the same class), the code is highly testable, highly maintanable and highly learnable.

Ironically, if I have 15 or 30 validator methods, I think it is maybe time to thing of a more serious architecture than a helper class to handle validation, don't you think?

When I read in your post...

..I really have a problem. You advocate that simpler code necessarily means more lines of code, which is the direct consequence of code duplication. I completely disagree, code can (and MUST) be boh super simple/maintainable and also highly factorized with zero redundancy.

@Kyle / Patrick

Yes, but how about the aspect of testability? For example, in a controller, it may take an ICustomerRepository as a dependency. There will only ever be one true implementation, but it's in there because C# is statically typed and we want testability. Surely you make an exception in that case, correct? Quite frankly, that applies to any service you need to call, even though there is almost always 1 implementation.

I find 90% percent of my interfaces only have 1 implementation, and are there for "loose coupling", but mainly testability. More green developers have a hard time with this. I don't see it as complex, but others do.

@Roco - 9 times out of 10, I don't even have one implementation. My tests, in particular, are used to verify that the API I've created is clean, and nothing more. I find that my approach is a happy medium between brittle tests and ensuring a clean, understandable API.

That's a good point you bring up, though: TDD often makes us abstract things that shouldn't be abstracted. For example, one of my coworkers claims that you can't really do TDD without using TypeMock, because with other mocking frameworks, you cannot mock something that isn't an interface/abstract class, so you can't effectively test your dependencies. I laugh at him every time he says it, because he's under the impression that every class must be testable. But tell me this: For your ICustomerRepository, what classes does it return when calling its methods? I presume some kind of Customer class. Does that class have anything but properties? If it has only properties, does it really need to be testable at all? (I realize that I'm making assumptions about this class, and I could be totally wrong here, so apologies if I am.)

The case of the ICustomerRepository is one that it should be obvious that it needs an abstraction and tests to ensure the functions look the way you want them to; but for a Customer class with only various properties on it, I see absolutely no reason to test that object: When will you ever change how the properties are implemented? Fact is, that will almost never happen, but so many people think that it's necessary to put an abstraction to that class, and that is where I begin to get physically ill.

Rocco, I don't understand your point, it seems off-topic. Sure interfaces can have only one implementation because of testatbility 100% agree.

But if I mentioned the 'fool me once, don't fool me twice' principle, it was in the context of the Ayende post, where when 'Keep It Simple' is not respected, this can lead to overly-complex code.

@Patrick

OK I think we agree then. I was just referring to your comment "It means never create an abstraction for one occurrence".

@Kyle,

I think we agree for the most part. However, I'm not even talking about testing the concrete implementation of ICustomerRepository, I'm talking about testing a controller or other class that depends on it. In that case, I want to isolate the dependency and return canned results. That (forget TypeMock for now) involves creating an interface so that I can use a mocking framework to control how that dependency behaves. So we need interfaces for things like this, and a larger codebase as a result. I don't find that complex, annoying yes, but not complex.

As far as testing properties on a class (i.e. a set and get assertion), I agree with you 100%. That's just silly ;)

@Patrick

The problem starts cropping up when your simple helpers simply grows and gets more complex, especially with specification changes.

For example, tomorrow your client ask to be able to set the max length value in a configuration file. Now, you have one helper, but probably need to duplicate the configuration in 2 config files (presentation and business) if they are 2 processes. So, you saved yourself 1/4 of the work, but still is stuck with duplication. Will you implement complex config management to reduce your duplicate config line with mergeable config files?

Next, your client want to customize the max length in an admin screen, which is persisted to the DB. Now, does the helper executes data access? You'd end up with direct DB hits from the presentation, and thus would need to pull up all configs and the whole DAL there?

Or you could even add an if statement in the helper that checks if you are in the presentation or business layer. You need to keep state then. In the presentation layer, it would call a business service to validate it... which is pretty much the same as letting it being handled by the business itself.

Since you have an helper, you are reluctant to look at really simple solution, like pulling up validation parameters inside your DTOs when you get them from your services and then simply put maxlength in your textbox with that parameter, or do some nice interactive visual validation thingy; your not restricting your presentation layer at all. Only the business accesses the DB and each layers validate the data. That would be faster and simpler than having a super complex and intelligent helper classes with optimizations... wouldn't you agree?

I think that's Ayende's point. When you make complex code to handle complex problems, it usually follows that it gets more complex for each new situations, and sometimes that process shadows the real simple and easy solution that you might have otherwise seen.

Some of these posts point out a problem with TDD - the dilution of any architectural significance of an interface.

Typically an interface should represent some meaningful architectural concept. Like IUnitOfWork. It means something - in this case a dependency injection, a representation of functionality that may be needed in, for example, a domain model but that functionality is in fact part of and implemented by another set of assemblies on the other side of a contextual boundary, the persistence layer. In other cases an interface means ICanAlsoBeSomethingElse, i.e. common functionality that is typically implemented by multiple objects.

The structural significance of an interface is very important because whether it is used for common functionality, or used to pass functionality across a contextual boundary, an interface has always represented a contract between various parts of code that needed to always be honored.

But in a typical TDD application, interfaces become too meaningless because they no longer serve any real structural purpose within the application outside of testing, and they no longer represent a real contract. (Sorry, a contract between application code and the tests for that code does not represent a meaningful contract. Tests are not an "equal" - If you change the code, you write (change) tests. A true contract would imply you have an agreement with the tests that the code will continue to support the interface they expect. That is not a meaningful concept.)

People are too reluctant to admit that things like testing does often appear as an artifact in the final code. But it does anyway, and when you compile an assembly that includes a bunch of interfaces that are used for nothing more than tests, the test themselves are stripped out but your code is still full of artifacts in the form of all those interfaces that mean nothing to the resulting compiled functionality.

There needs to be a more declarative approach. ISomeObject should always mean there is a true contract implied somewhere, If an interface is only for testing, declare it that way. Make it ITestSomeObject so that it advertises what it is for, and it is clear there is in fact no real contract implied.

@Karhgath

Really I don't see any problem. I have a simple helper static class stateless that answer simple needs. Tomorrow specifications change for something more complex. Then I eventually refactor my helper class and move to something more elaborated, with data access, interfaces, IoC and whatever is needed.

Absolutely not. I am not reluctant to anything because I have a helper class. If there is a need to refactor, let's refactor!

@Patrick

Yes not you, and not most of us. We'll refactor to different solutions. But unless you are working alone on a project, other (greener) programmers will mess things up. It might even go through code review since it might be seen as clever and complex. But that's not a good argument at all as I think of it.

Mostly, you didn't really gain much at all with a IsUserValid helper shared between business and presentation. Also, you might (and usually will for large software) have 2+ teams, one doing the presentation logic, another doing business logic. So unless the helper is an architectural choice made early, I doubt it would come to life by itself. Also specs might not change, but infrastructure requirements might and force you to put the helper in an assembly deployed to 2+ places, which makes things a tiny bit more complex in some situations. You're adding coupling to reduce code repeat.

The main point is that validation in business is rarely the same as validation in the UI, except for really simple and obvious things. For example, it's hard to validate Email addresses fully in the UI (not impossible tho), you probably will just check the format with a regex, and do a more complicated validation in the business(email confirmation maybe). That's still validation, it just became a business process.

That's my experience anyway. Maybe it's just that the validation example isn't such a great way to represent Ayende's reasoning tho.

I do believe there is a good middle ground between DRY and SRP, and that most of the time, if not all, they come together beautifully with the correct implementation. Like anything else, don't do DRY just to do DRY. Same with SRP actually.

I believe good code is mostly simple, mostly factorized, and mostly decoupled. Sometimes you need to couple something to be able to factorize it and make it simple, sometimes you need to add complexity to something to be able to factorize it and decouple it, other you must repeat yourself to be simple and decoupled.

Like all things in life: choose 2 of 3... but not always the same two. Good choices at the right places are what makes good codebases.

Comment preview