Normalization is from the devil

The title of this post is a translation of an Arabic saying that my father quoted me throughout my childhood.

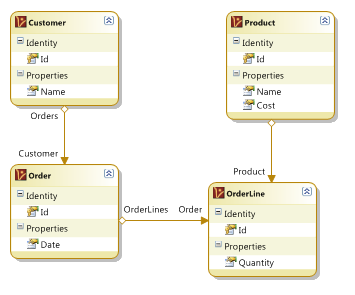

I have been teaching my NHibernate course these past few days, and I had come to realize that my approach for designing RDBMS based applications has gone a drastic change recently. I think that the difference in my view was brought home when I started getting angry about this model:

I mean, it is pretty much a classic, isn’t it? But what really annoyed me was that all I had to do was look at this and know just how badly this is going to end up as when someone is going to try to show an order with its details. We are going to have, at least initially, 3 + N(order lines) queries. And even though this is a classic model, loading it efficiently is actually not that trivial. I actually used this model to show several different ways of eager loading. And remember, this model is actually a highly simplified representation of what you’ll need in real projects.

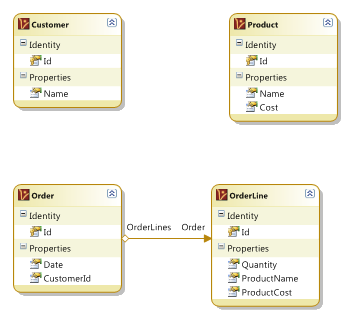

I then came up with a model that I felt was much more palatable to me:

And looking at it, I had an interesting thought. My problem with the model started because I got annoyed by how many tables were involved in dealing with “Show Order”, but the end result also reminded me of something, Root Aggregates in DDDs. Now, since my newfound sensitivity about this has been based on my experiences with RavenDB, I found it amusing that I explicitly modeled documents in RavenDB after Root Aggregates in DDD, then went the other way (reducing queries –> Root Aggregates) with modeling in RDBMS).

The interesting part is that once you start thinking like this, you end up with a lot of additional reasons why you actually want that. (If the product price changed, it doesn’t affect the order, for example).

If you think about it, normalization in RDBMS had such a major role because storage was expensive. It made sense to try to optimize this with normalization. In essence, normalization is compressing the data, by taking the repeated patterns and substituting them with a marker. There is also another issue, when normalization came out, the applications being being were far different than the type of applications we build today. In terms of number of users, time that you had to process a single request, concurrent requests, amount of data that you had to deal with, etc.

Under those circumstances, it actually made sense to trade off read speed for storage. In today’s world? I don’t think that it hold as much.

The other major benefit of normalization, which took extra emphasis when the reduction in storage became less important as HD sizes grew, is that when you state a fact only once, you can modify it only once.

Except… there is a large set of scenarios where you don’t want to do that. Take invoices as a good example. In the case of the order model above, if you changed the product name from “Thingamajig” to “Foomiester”, that is going to be mighty confusing for me when I look at that order and have no idea what that thing was. What about the name of the customer? Think about the scenarios in which someone changes their name (marriage is most common one, probably). If a woman orders a book under her maiden name, then changes her name after she married, what is supposed to show on the order when it is displayed? If it is the new name, that person didn’t exist at the time of the order.

Obviously, there are counter examples, which I am sure the comments will be quick to point out.

But it does bear thinking about, and my default instinct to apply 3rd normal form has been muted once I realized this. I now have a whole set of additional questions that i ask about every piece of information that I deal with.

Comments

Not sure I agree.

Ideally your OrderLine entity should be replicating most of the fields from the Product entity for historical reasons, eg. the Product price, name, description etc. might change, but you want your OrderLine to show how that data was at the time of the order. However, I'd still keep the OrderLine -> Product relationship intact just in case you might find it useful, but make it Nullable for when the Product gets deleted.

Now it seems you only have 3 look-ups for the Order screen, the Customer, Order and collection of OrderLines. The current state of a Product linked to an OrderLine isn't critical IMHO, so maybe that data should be loaded up on demand (via Ajax perhaps).

You can still keep your foreign key relationships intact, and keep things normalised, while reducing query look-ups and helping performance. This is how I'd approach it.

Normalization is not a form of data compression, it's rather a means for avoiding data duplication. And I think it's used mainly because it helps maintain data consistency, not because hard disks are small and slow.

s/was of eager loading/ways of eager loading

Thanks, fixed.

Very interesting post. What I find particularly interesting is that although I don't generally associate the aggregate root concept in DDD with the "query" side of things (rather, I tend to arrange my aggregate boundaries around ensuring invarients are maintained), you make some good observations on scenarios where thinking about the problem in this way really makes sense - after all, aggregate roots should be responsible for maintaining consistency boundaries, and this seems to meet that description quite well.

Don't see the problem as normalization, I see the problem as ORM mappers.

Normalization is only one step in database design. As I learnt it, the main intention isn't to reduce storage cost, but logical cost - ie, getting a clean data model.

Denormalization is an optimization step, and like all optimizations should be done in tandem with performance metrics to measure their impact. It can be very useful and powerful, but "with great power ..." etc etc.

It's still important to go through the original normalization stage, and ask the explicit questions you mentioned, like "What happens when a product definition changes?", etc.

I think it's better to answer to these questions explicitly in your model, rather than throw out normalization and happily notice that it's solved a problem you didn't think to ask originally.

Also remember that storage space is important in 2 ways, 1 cost of storage, 2 - cost of IO.

Unless you're assuming that your database will always run in RAM (admittedly possible these days), IO is going to be massively important. Common data in a common table can be cached, denormalized data that's only used in explicit queries can't be.

Even if your database is fully RAMable, network IO might still be important, and writes will need to go to some sort of slower permanent storage at some point before a transaction can be considered complete.

And you don't have to throw out the concept of views, or assume that your orm mapping must be 1->1 when it comes to queries for viewing things. If you have a view that joins the orderlines to their corresponding products, a query that retrieves the orderlines through an appropriate view will get the associated product details in a single query, no O(N) network round trips required.

Views can also be writeable, meaning that you can still change quantities, add things to it, etc.

Yes, Joins can be expensive, which is why dba's prefere to be in control of the queries that get run against a database (ie, views and stored procs), but if your optimization is to prevent a query for each orderline, you're so far away from worrying about the internal cost of joins it's laughable.

ORM annoys me in general. They appear to encourage a very naive and shoddy way of handling data. The very idea of running an individual query for each line of an order makes me feel extremely uneasy. An object in a program != line in a database, they're temptingly close but what your complaining about are the symptoms of this abstraction leaking very badly.

Mangling the database design probably isn't the best idea. These are not new problems, these are solved problems brought back to life by dumb tools.

@JimT

Be careful about hating on the tools. Nothing in an ORM such as NHibernate forces you to build and execute poorly performing queries. Instead I think it is developer ignorance. You just can't use an ORM and expect it to magically solve your database access problems. You still have to do the database work such as schema and query optimization. The ORM just makes it easier to translate that work into a code model, thus making it easier for developers higher in the stack to get their work done consistently.

The developer using the ORM to create mappings and queries needs to be aware of the performance implications of certain queries (IO, CPU, and memory costs) at the database level.

Seems to me like your problem isn't normalization, but dealing with temporal data. Essentially, adding the dimension of time to the data model.

Notice that your questions (changing “Thingamajig” to “Foomiester”; a book ordered with a woman's maiden name) have everything to do with the date/time an entity had a certain value, and having to connect between versions of the same entity.

Temporal databases are a whole other can of worms, but I'm pretty sure normalization is not the devil here.

Normalization isn't for preventing storage, it's for preventing redundant data, so you don't have to update many rows to change e.g. someone's name.

An ORM with a normal eager loading system can deal with your requirement in 3 queries and not having to surrender to denormalization, so updates and inserts are efficient. Think back at your set-based updates in ravendb which were a pain to add (if they ever made it)

Of course, you can also decide to fetch a simple flat list, a projection for the visualization. (Effectively doing denormalization in the projection). Without having to give up normalization.

@JimT: I think you should do a little bit more research about how modern ORMs solve this, e.g. prefetch paths are easy to use and can give you easy fetching of graph like data trees like the one of the article.

Interesting. So if someone uses flawed business model, then denormalization will fix it for him.

The problems come when you have to update the same data in 10 different places because data tends to be interconnected in real businesses. This is hard to test for when you make changes because you have to remember which places to test. There is a tradeoff in dev time involved because it seems to be that the nice examples where time was saved by denormalizing are much fewer than the ones where time and quality were lost.

The maiden name example goes any way, and that is completely up to a customer requirement. As has been said before, normalization is against data duplication and pro data quality. You must have migrated some database from the times where there was no referential integrity? Sometimes it's absolute garbage what comes out, the same customer appearing multiple times, etc. - If you're lucky to recognize it.

I have used a fair deal of denormalization myself, but if you lose the information of your normalization, how can you undo data garbage?

If you take your 2nd model, how is the ordered product correlated with the product if the product name changes? The first monthly sales report on "Foomiester" will really suck if you change the name on the 29th!

Mostly agree with post!

Let's go step by step how I come to realize that Denormalization is more required, than Normalization in current Web and Enterprise development (maybe will repeat somebody, but anyway):

a) In Web 2.0 sites ALL data in memory anyway (i.e. you use or ala memcached or MySQL Cluster or ... ) - ALL your data IN memory and it's requirement, it's not just "recommendation" :) Memory is CHEAP, so you can put as much instances of cache as you want and it's cheaper than persistent storage! 16-64Gb in servers is "default" now. In Amazon Cloud you can get it very cheap!

From another hand, like Ayende expain - persistence storage (SCSI HDDs really + RAID or network storage) now also VERY cheap and with terabytes capacity... What this means?

YOU CAN STORE DATA AS YOU WANT IN STORAGE :)

No Normalization required now! Store data the way you WANT! :)

b) But what happens when cache fails for some reason or when you bootstrap system after restart? You want to be able to read data as quickly as possible - and Denormalization is big ++++ for this (like people tell - less joins - more quickly you can load data etc)

c) Last time it seems like most implementations of high performance / scalable databases become more like distributed hashtables with build-in replication, fail-over etc, not SQL! Look at Membase, Azure Table Storage, SimpleDb for examples... Such storage's naturally "relations less"! So you maintain relations only in your domain model, but not in storage itself! And because you can't create relations (or it's just not effective) - again, Denormalization is your way to go...

d) If you read say MySQL Cluster DB references or ask somebody who use it - you can found that JOINs really KILL you :D

Store ALL data in entity! Do not "split" entity between tables, unless you know why you do this (and 100% not to save storage space ;-) agree). It's more easy to get something from one shelf than from 10 :) Your logic to extract data become more simple, you can now grow without grow of your stored procedures and amount of joins in them! :)

ah and last :)

e) To kill arguments like "so because of a) - d) you will need now to do 10 inserts / updates / deletes instead of 10 joins" :D

Yes, I will :) But in most cases I can do it "asynchronously"!

And even if I can't (say it's transaction), I can wait to insert data to 10 tables, because all this tables NOT in active usage actually! Remember, all data in cache right? ;-) So while I update persistence storage, another 1000s requests get data from cache and do not create "locks" in database (even with row level locks like in InnoDB for example, it's still a problem to handle big load if your SPs with 10s, if not 20s, of "Joins"!)

evereq, how do you deal with the dev time needed to implement all of those updates?

I don't know...sometimes i ask myself, what kind of applications you're working on in the real world. We build software for hospitals from small healthcare centers to big hospitals. Let's stick to the above example of products...one product is used for many patients...the price can be different for every patient, depending on the benefactor, the date and the duration of the inhabitation. If a patient stays three weeks in the hospital, the price maybe x per day for the first week and y per day for the remaining 2 weeks If he stays one week longer the price z per day comes into play for all four weeks etc. After all the price may change afterwards because of legal regulations or alterations of contracts with the benefactor.

All of this must be calculatable for billings and exactly traceable in statistics. I don't know how this should be done using a denormalized model...but maybe i'm to blind to see it. At this point i have to agree with Jim T. Most ORM tools are leaning towards technical and architectural satisfaction of their developers but leave out some real world needs...

What you are doing here has nothing to do with denormalization. You are not storing the same information twice by keeping the product price on the order line--it's a completely separate fact. One is "the currrent price of the product" and the other is "the price of the product at the time of purchase". This is a pretty common misunderstanding about (de)normalization, and what you are doing is extremely common in normalized models. In fact, I use this type of thing as an interview question about (de)normalization (using a shipping address example).

Another approach that can be taken is to use slowly changing dimensions for things like name and price lookups. This would not address your issue with multiple queries, though (it would in fact require more queries). I personally don't see this as a problem.

I think not storing the product Id in the order line is more likely to cause problems--what if someone wants to know how many times a certain product has been ordered in the past year? Unless you're writing the same info to a reporting database at the same time, you won't be able to find this kind of information.

@Tobi Yeah, updating denormalized data sucks, and you have to remember, which data has to be updated and where. It's pretty annoying and should be handled in convention over configuration matter, probably by database itself.

I think Ayende is mixing busines model with business reports.

"If the product price changed, it doesn’t affect the order, for example"

If your business rule is "the product price in the order is constant", then you have to create a new order object, probably for reporting reasons, anyway.

"The problems come when you have to update the same data in 10 different places because data tends to be interconnected in real businesses."

exactly. once you get past these trivial shopping cart or blog apps, you actually really start caring about data consistency.

and that top problem could be solved in a single view if you're rolling your own SQL. with any kind of sensible indexing it'll be plenty fast enough.

As I see document databases now (after using couchdb and ravendb), they purpose is to be intelligent persistence storage for reports, in the other words, _immutable data_, like twitter or facebook stream is. Trying to implement living business model, or event bend your business model to work with denormalized data, is something like shoot yourself in the balls.

OrderLine is Price, Quantity, ProductName and ALSO ProductId (horror FK)

Because there might be an additional 10 attributes of product I do not want to repeat in every order line, and helps answer business questions like how many ravendb enterprise licenses did we sell when we had that discount of 20% going for the early adopters.

Normalization is not there in a RDBMS to reduce storage, it also exists to report on important metrics that keeps a business alive!

And I do not buy the "let them eat cake/do ETL" argument - a good normalized data model has it's place in the world.

Whether in your CODE you want to have a back ref from Order to Customer, or would rather prefer a CustomerId is your choice.

If the person changed her name in your system, why not store that this person actually CHANGED her name, instead of wiping her of your system by replacing her name?

Having just attended some courses about CQRS and Event Sourcing, It seems that it would solve lots of the problems you are raising here.

Storing the EVENT in a simple document database on the Write side , and create a Read Model that fits as well as you can what ou need to show on the screen.

As you say, nowadays system have very different requirements than the ones from 10 years ago. CQRS and event sourcing provides both fast write (in the event store) and fast read (in a highly denormalized Read Store. The price you pay is to accept Eventual Consistency, but it seems worth it with nowadays requirements.

What do you think of this kind of architecture?

I'll chose to support and work with a normalized model in nearly every situation; you can de-normalize what you want to fit any model you chose via views.

Going from de-normalized to normalized is the devil you speak of...and you'll only know you need until late in maintenance life-cycle of the system. More likely, since your a consultant, you'll have short term gains and inflict long-term pain on those who need that data after you are gone. This is an anti-pattern to the nth degree.

Geez, the limitations of your data access layer has permeated your db design.

In other words, you have tightly coupled your interface to your data store, which is almost always bad in the long term. The schema should be generic in terms of the interfaces that access it.

@tobi:

"evereq, how do you deal with the dev time needed to implement all of those updates?"

Short Answer: I anyway need to implement such logic, at least for cache in very rare situations, when I DO need to support edit of data! So I will just do it :) and time it will take to code this, will be 100% not bigger than my save on hardware / cloud costs later ;-)

More complete answer:

a) In most cases, I do not implement it at all :) It's really rare now that some data can be changed after you store it! I.e. 99% of cases, data 'readonly' anyway. :) Let's say Countries, Cities, States, Product Information (images, description, ..., except maybe price) - all this data really changed very rarely! And in many systems like Ayende point, you DO need to change this data only in one place, not in all existed records! I give another example: say bookings for hotels! Somebody made order for Hotel, and hotel was say 5 stars at that moment :) Then, say in 3 month, this man really check-in and see that hotel not 5 stars anymore, but only 3 ;-) . He open his order in online reservation system and see that "f..ck" hotel only 3 stars! But he remember that it was 5!!! :) So? So you should COPY such data to every order from hotels table (hotel check-in time, say up to 15:00, hotel stars, 5 in our example etc)! Yes, you really should! At least a lot of use cases like this! Sure some data you Do NOT need to copy to all your orders! :) But this is another story :) For data that frequently changed, you should probably create dedicated entities so you can effectively update such data, probably with one to one relations between entities.

b) When I DO need to change all records that store same data, I usually anyway implement such logic in caching infrastructure some way! I.e. somebody change say Hotel name in hotels table, and I should change this name in all hotel reservations (actually who tell that I should? This is up to business to decide what to do in such cases... but let's said it was requirement...).. So in cache system I will have anyway some code that should change all in memory instances of "hotel reservations" with new hotel name :) It can be done effectivelly, while sure agree that current ORMs do not very well support such features (or at least I do not familiar with them too much) - i.e. updates / deletes (actually better not to delete, but just mark as "not active") of data in many cases you really should do manually to get maximum efficient implementation! So yep, in such cases, I do code something, but it not increase development time significantly :)

P.S. just read another interesting story that show just a bit different to what Ayende point, i.e. that probably you need sometimes to implement "normalization" DIFFERENT way :)

Read it here: codebetter.com/.../...-effort-into-being-lazy.aspx (as you see published in nearly same time :D)

@Stéphane

I do agree with you. CQRS and Event Sourcing and finally, Read Store is the end of this path. I mean, a good end :) I you wanted to implement your Read Store with NHibernate, you could use events, especially post-updates and post-inserts where you can easily denormalize your data and save in ReadStore.

I agree too.

In some cases I don't normalize knowingly. For example it could be the solutions for problems with low performance queries.

Poor Codd... :-/

Sell price is different than public price for example...

It all depends of the requirements etc.

shameless copy and past...

First normal form: A table is in the first normal form if it contains no repeating columns.

Second normal form: A table is in the second normal form if it is in the first normal form and contains only columns that are dependent on the whole (primary) key.

Third normal form: A table is in the third normal form if it is in the second normal form and all the non-key columns are dependent only on the primary key. If the value of a non-key column is dependent on the value of another non-key column we have a situation known as transitive dependency. This can be resolved by removing the columns dependent on non-key items to another table.

1) First of all, the last app I worked on was a financial case managment system for 1000s of users in 10 states. Definitely not shopping cart material and definitely real world. My current project is for a multi-discipline, multi-location health care organization. Ditto.

I can honestly say having to update the "same" data in multiple places "10 times" or for that matter 2 times has absolutely nothing to do with either real world or real business data. Sorry, that is just a poorly designed implementation. Period. The only data in either these systems that even required what could marginally be considered as "2 updates" was when there is a data warehouse present.

2) there are some problems but it is not inherent to ORM. Too many developers never even bother to look at a DB schema after it is generated and the ones that do are not willing to change anything because they adhere to "best practices" they often do not understand with the same kind of blind dogmatic fervor like the many DBAS who without understanding just say about ORMs are bad.

I regularly map and set explicit Ids across families of objects to ensure that the database has a set of consistent foreign keys across all tables. There is nothing wrong with mapping Ids for database persistence reasons - the real best practice is you don't do anything with them in your domain model. And if an object knows a little about persistence in the process, big f'ing whoop.

3) But even if I use little or no denormalization, and come with consistently mapped tables, DBAS are still not gonna like it because it uses an ORM and yes it is not the relational only design you would prefer and often could enforce in the past.

Newsflash. Time to move into the 21st century. ORM is a very powerful technology for applications development when properly used, and without applications development, that precious database is not worth even close to enough to merit your paycheck.

Both sides need to cooperate.

Re @Robert: "I can honestly say having to update the "same" data in multiple places "10 times" or for that matter 2 times has absolutely nothing to do with either real world or real business data. Sorry, that is just a poorly designed implementation. Period."

I don't see any problem to do updates for "same" data even in 100 places! :) In case if you do it with consistency and if it can IMPROVE total performance of your system! For 1000s of users you probably do not really need such technology / denormalization at all - agree, for millions you probably do need :) Especially this matter in cases if you have 1000s times more reads than edits in your storage! In many systems we build today, you have this relation much more! Say in MySQL database I monitor exists say 50K reads and only 100 edits per minute!

So why you should limit yourself and care so much about "update" time if it slow down your "reads"?

Denormalization is ONE of technologies that CAN help you :) Sure as always it's depends ;-)

P.S. DBAs have different vision for databases than we (developers / architects) - it's same difference like between developers and sys admins! I will let sys admin to do they job and in MANY cases restrict developers to dial with some production environments! Same way may DBAs really "reject" ORM like data access! They simply "lost" control :) And in some cases it's maybe even make sense :D

I think you are talking about a totally different kind of system. In a complex business system, for example capturing real time data in a healthcare clinical setting, an ORM is used because the entire system tends to be heavily that is very heavy on the O part, I agree with the dbas in some respects and really don't advise much denormalization in this sort of case either. I never do it strictly from a performance view. Sometimes in a really complex inheritance scheme mapping subclass per table causes too much proliferation of tables that have a handful of columns and I may occasionally not pursue a normal form because a table per hierarchy feels like a better approach.

There are some legitimate problems that can result from using ORMs without regard to the database. For example if you have an object that can be both a many to one and a list depending on the associations, the result is inconsistent mapping of foreign keys. That can be problematic if you then have to move the data to a data warehouse using conventional SQL, but it is also an issue that is easily resolved by simply adding a mapping so that the many-to-one entries also get a valid foreign key in the same manner as a list entry.

Developers do need to be concerned about the database structure, and DBAs need to recognize that particularly for complex heavily object oriented applications an ORM is what I consider an essential modern tool, and both sides need to cooperate to achieve the best results.

@Robert: sorry, don't get why you talking so much about ORM, if post actually was more about normalization / denormalization and how we design database today :) You can use ORM and it's cool etc, or you can do raw SQL or .... - the question Ayende point is more about database design itself, than about ORM :) I.e. more about "how better design your schema" in case of RDBMS / document databases / etc... At least this is how I get this post :D Maybe I am wrong? ;-)

@Ayende, it will be cool if you will create another post about "how to dial with denormalized data using NHibernate" so guys like me and maybe others get better picture :) Because it seems like most of comments contain "ORM" word and seems not all developers know how to handle denormalized data using ORM efficiently :) Especially updates ;-)

Few more bits: I think here more like the way functional programming think about immutable / mutable types: I am even ready to go into such extreme like to make a FULL copy of entities (i.e. made entities ala "immutable"), instead of do updates in place! In many cases its actually do make sense to create "immutable" types, so maybe we finally get to such situation in data storages designs ??? ;-) Because it seems like current RDBMS support more "mutable" types (table is mutable!), and as we know true way to scale sometimes really require completely (or partially) functional (persistent) approach! What you think? ;-)

@evereq: actually, as many have pointed out, his post has little to do with denormalization. He is identifying an extremely well-known principle of storing a snapshot of data whose source may change.

This post actually made me cringe a bit, as this concept is something anyone familiar with even the basics of relational modeling would understand, and I think Ayende is way smarter than to present this as denormalization, or to imply (by posting about it) that it is some groundbreaking new way of thinking.

Regarding updating data in 2, 10 or 100 places: yes, it can work, but it should be done with great care and only when absolutely necessary. it should not be done as a sort of premature optimization, it should be done only as a 100% necessary optimization.

The problem with enforcing data rules in application code is that it is prone to failure--developers can miss something, and even if you enforce the data rules correctly when building v1.0, when new devs come on to build v1.1, they may not be as meticulous.

If you need to update data in 100 places, but somehow miss something and only update 99, you are going to have a bug that may not present itself for years, and then have the potential for a data problem that can never be fixed.

There are exceptions of course, but an opening position for any architecture should be:

Enforce data rules in the database.

Enforce business rules in code (BLL or Domain or whatever).

In 99%+ of cases, data is 10000000 times more important than any other aspect of your application, so treating data with the utmost care should always be the first priority of every developer(not just DBAs!).

"Those who cannot remember the past are condemned to repeat it." - George Santayana

@slappy: I've come to a middle in terms of DB design lately, and your post made me think a lot.

A database usually serves 3 purposes:

Store facts

Store states

Store history

These are 3 different things. First, why bunch them all together in one solution? However, I took it further...

Holding fact is exponentially hard the more complex it gets because, facts aren't actually facts, they are facts only facts in a specific context (or dimension, or business view or whatever).

If you really need complex business views, you can use OLAP/star-schema and have dimensions. I've worked in banks with huge OLAP, and still they have to do a lot of work on the data itself because of inaccuracies, incompleteness, errors and such. These are not facts.

Facts aren't the critical part anymore. We could have 2 systems with the same customer, but with different addresses. What's the truth here? We don't know. However, what is crucial is KNOWNING that both customers are the same, in business terms.

So, instead of storing facts, we store knowledge instead. "At the best of our knowledge, this customer lives there." Knowledge also changes over time. Knowledge is then all about state and history. So, in the end, a database stores one thing and one thing only: Knowledge. The data is knowledge, and knowledge is inherently incomplete.

Have you ever worked in a complex system that is 100% consistent, with all data rules enforced in the database with 100% clean data? How much effort did you put in data quality and data validation? How much data is rejected because of quality? Have you ever lost data or had incomplete data? Even after crashes? Incomplete transactions? Backups and restores? How often did you share you data with others? How many systems connect to your database directly? How often did the rules changed? Di you ever forget a data rule and realized it too late?

When you start centralizing facts with global enforcement rules, you end up with a datawarehouse of facts... and if you've ever built one for a complex enterprise domain, I can tell you with utmost confidence that you don't want to do any of that anymore. Even enterprise DBAs and architects are realizing this.

This leaves us with 2 conclusions:

Knowledge can be lost.

Knowledge can be incomplete.

"Can be" ultimately means "will be". Lets acknowledge it and build systems to deal with that. Let's try an example:

A common scenario is having a customer database. With a Customer Service application. This application should worry about it's own context. What do they need to do as a customer service application, how to do data entry, validation rules (which are business), etc.

Then, they share that knowledge with other systems, let's call em consumers. However, 100% of times the data isn't complete for a consumer (for example shipping), they need to aggregate data from other sources, lets say the Order data used by the Shopping Cart application, who itself uses the Customer data.

Which customer information to use? Order or Customer? You might say Customer, because this is the "fact" and entry point, but if you do shipping, you'll probably trust the Order data more in terms of customer information, and the information might be more complete for you. So you enforce Order->Shipping rules for this data. However, you'll probably have to store that customer information in a certain way specific to your needs, that could be then shared to others...

Database is just storage after all, and it does not really store facts. The important part is being consistent with your own data and have explicit data rules. That way, when you need to share it, it is clear what you share and which data transformation to make. Yes you can implement them in the database if you want, if that works for you. But sometimes if you share explicitly data with others(services, etc.), it might be easier for the data rules to be in the business.

Someone is smoking dope. As others have said, the "address at time of order" and "product name at time of order" are discrete facts separate from "current address" and "current product name." All you've done is normalized one thing and denormalized something else completely different that is going to hurt you down the road.

+1 to @Karhgath: 100% agree - it's sometimes one way work and sometimes another way (related to where place validation / consistency rules). And dogma like "have all your data in consistence in DB level" (or "Enforce data rules in the database.") sometimes really CAN be "pushed" to "devil" :D

@Slappy:

a) let's not argument about how to call this technology that Ayende apply :) He describe it right way - he just don't tell anywhere about "denormalization" at all - instead he told "my default instinct to apply 3rd normal form has been muted..." :D :D :D So you can call it as you like - for me if you do NOT apply 3rd normal form - it's "denormalization" (at least probably and hope to 2nd normal form :D)

for you maybe something different :)

b) Re to "If you need to update data in 100 places, but somehow miss something and only update 99, you are going to have a bug that may not present itself for years, and then have the potential for a data problem that can never be fixed." ... yep, you or me can miss whole a lot :D We developers made bugs, but I not sure it's will be really "a problem" to fix such a bug ones you found it ;-) You can always create some additional logic to do checks for consistency in storage, more so in some storage's like Azure Table Storage even MSFT engineers DO implement some "retry" logic to simulate transactions etc etc :D

c) Re to "In 99%+ of cases, data is 10000000 times more important than any other aspect of your application, so treating data with the utmost care should always be the first priority of every developer" :) OK, NOT agree :) If you application store data correctly and even enforce all data rules in Database, but does not fit some non-functional requirements like Performance, Scalability, Efficiency or even Security who will use it!??? Yep, you should NOT lost your data in any case, but sorry I did not see how fact that you store data in 100 places can give you some "issues" with possible data lost ;-)

@Karhgath:

This is the very definition of a fact in the database. "Fact", in terms of data, does not mean the same as "fact" in the sense of an absolutely certainty (like a mathmatical fact).

From the database's perspective, these ARE facts. If my name is spelled incorrectly in the database, it's not a fact? Something being incorrect or incomplete does not mean it's not a fact (in the database definition).

I don't understand much of the rest of your post--it seems that most of it is predicated on not understanding the two different definitions of the word "fact". You also have a whole paragraph of questions that are meant to be rhetorical? I say that because I don't see the point of these questions. Because data can get into an inconsistent state in some situations (which can all be mitigated btw), we should abandon trying to achieve consistency?

Finally, the customer/order example is not a good one, as no one would argue against this type of storing snapshots of information. As others have pointed out, it's not a question of normalization or denormalization, it seems more of a misunderstanding of what those terms mean.

@evereq

I do agree with this, of course. You should indeed consider all tools when building something. However, for most data-dependent applications, a properly normalized model is the correct choice (or at least a good choice) a very high percentage of the time. There are a LOT of benefits to enforcing data rules in the database, but I'm sure I don't need to retread those arguments.

for you maybe something different :)

No, it's not different. Like many others here, I am saying that it does not violate TNF, and is therefore not denormalization.

The difference is that a good RDBMS will enforce the rules for you out of the box, whereas if you have to write the code yourself to enforce the rules, it is much more prone to creating these bugs. Everyone loves the expression "if all you have is a hammer, everything starts looking like a nail". This is a case of "If you have a hammer and a nail, stop looking all over the place for a goddamn sledgehammer".

False premise in here somewhere. Databases can't perform well, be scalable and efficient and provide security? If you are writing the next MMORPG or something that requires that kind of throughput, you might start making these kinds of tradeoffs from the start, but there are plenty of enterprise applications in the world that rely on RDBMSs and meet all of these requirements.

@Slappy:

Re "False premise in here somewhere. Databases can't perform well, be scalable and efficient and provide security? ":

Yes, they can! Because why we use them so long?? Actually why we use RDBMS so long is good name for dedicated blog post :D as seems only last time community massively start see ANOTHER ways, including but not limited to document / object databases etc!

BUT sometimes we SHOULD help database engine to meet all requirements together, not only data consistency requirements! And denormalization / other technologies just help us in such cases :D

Reading most of comments, I do understand that whole a lot of developers just don't come to situations where they must search for another solutions or tweak existed... RDBMS works really well up to some extend :) just make sure you know how to help them to do they job better :)

From what I recall, WOW backend is oracle...

Any financial application is built over oracle/ms sql/sybase.

Happily that rdbms can't scale...

I think Rafal said the one thing that really needed to be said: normalization is not a form of compression.

To elaborate a bit, I doubt that you'd find much in the original relational literature (Codd et al) to support the claim that normalization has any relationship to storage cost. It's a mathematical, not economic, construct. Its goal is to reduce duplication of data to avoid anomalies - i.e. inconsistency. Sometimes you do want to copy the data and allow the copy to change independently, as in the "current price" vs. "price at time of purchase" example. That's fine, because those are conceptually different values and it makes sense to represent or store them separately. On the other hand, normalization has to do with the same value being used in different contexts, in which case it should be represented and stored once - not to reduce storage cost, but so that an update to the value affects all uses of it.

Since the original claim about the purpose of normalization was false, the remainder based on that claim is meaningless.

Hmm, makes me feel awkward to see that even an educated guy like Ayende knows only a little about real world enterprise work. I agree with all those who identified his misconception what he wants to store in the tables in the first place.

Some more thoughts:

Updating 100 places means more lock. If you don't know this problem or not what a DB lock is, you surely lack real world experience in large scale applications.

If you pump billions of transactions per week you begin to care about storage even today.

Indeed you will denormalize for performance. Indexed / materialized views come in mind. It is sometimes needed to have a real table, but that requires triggers or similar which should be avoided as long as possible.

If you need historic data, consider implementing one of the known history patterns for sql databases.

I have the same discussion about the NoSQL in huge enterprises. It might work for some cases, but who has real world experience with it in the weird real work DBs with one gazillion processes you are not allowed to change, craftfully mapped to 200+ tables?

1) Re @Jeff Darcy: "Since the original claim about the purpose of normalization was false, the remainder based on that claim is meaningless."...

Very strange - we read SAME text, but for some reason READ it differently! :) WHERE EXACTLY you see that Ayende say that ONLY ONE "purpose of normalization" IS for example "compression" ???

Here is quotes from blog post:

"normalization in RDBMS had such a major role because storage was expensive. It made sense to try to optimize this with normalization." Yes, it's TRUE! Not only because of this sure thing (and we all know this), but ALSO because of this!

"In essence, normalization is compressing the data" - you do not agree with this??? Take 2 database and compare size of normalized and not normalized! :)

2) Re #Rocky Rocketeer:

"Updating 100 places means more lock. If you don't know this problem or not what a DB lock is, you surely lack real world experience in large scale applications.". Sure agree - more locks :) But maybe in whole a LOT of applications, MORE locks actually LESS problem than for example 100s of JOINs! :D :D :D Exactly in such situations (and a lot of others) denormalization work ;-)

Ah, and with Row level locks, it's actually become much less issue than for example some time ago, when say MyISAM support only whole table locks :)

@evereq

But you DO agree that it WAS one of his points, and that point is COMPLETELY INVALID, right?

No one is arguing that. But the easily inferred conclusion, that one of the major justifications for normalization is/was to save space, is false.

@Slappy

You're right, I should have defined the terms I used. I used the definition of a fact from a datawarehouse perspective, which need to be more scientific: it is a verifiable and provable statement. If your user is misspelled in the DB as "Swappy", it is not the truth, but is used as a fact. The problem in datawarehouses is that facts are useless since they aren't truth. s/fact/truth/ in my post. That might be clearer?

Facts are indeed local to specific applications (local truths). My customer/order/shipping example is indeed very straightforward and no one will probably argue. This highlight the facts that no one single database holds the "truth", just knowledge (or the layman definition of fact, which is just a statement: this user is called "Swappy".)

Today DBAs and architects in large enterprises wants to normalize ALL data and enforce data rules in ONE place. It doesn't mean they are right.

Let's take a DDD principle of boundaries (I'm not a DDD), Order and Customer are 2 different things. You should absolutely normalize what a customer is, 3rd normal form and all, and store it that way. Same for order. But individually and separately.

You'd probably end up with the same model as if you'd took everything together, normalized it to 3rd form and then denormalized it based on needs throughout the project. Most of denormalization cases are because you cross data/object/aggregate root boundaries (Orders history crosses the boundaries of Products and Customers for example).

In Ayende's example, I would have 3 boundaries:

Customer

Order

Product

I'd have a Customer, which is a nicely normalized customer (with multiple addresses with history, and such).

I'd have a Product with related normalized tables.

I'd have the Order, but linked to OrderCustomer(and not Customer) who probably have just a single Shipping Address and a single Billing Address, with only useful information needed for the order, no multiple tables with history and such. We can identified that a Customer and OrderCustomer are the same, even have some type of constraints, but they aren't normalized together, since a Customer is not an OrderCustomer.

Then OrderLine, with OrderLineProduct, and so on.

In my experience, unless you really have simple simple rules and no scalling and extensibility needs, this is a great way to normalize the DB, and reduces denormalization to a minimum. This principle served me well.

The awesome thing is... no, you DO NOT NEED to update 1000+ tables when you update the address of a Customer. Why? Because an OrderCustomer does not follow the same rule and do not need to be updated the same way, they aren't really linked. This is because an address in the context of a customer and in the context of an order, does not mean the same thing! Even if they are the same addresses, they are different because of context.

@Slappy, @evereq

Slappy is right in saying that normalization's main focus is NOT to save space, which makes Ayende reasoning invalid. It was a nice result, and probably a really appreciated one tho, that it saved space.

It was however done for integrity purposes mostly. However, integrity was important back then since, you have one central DB and many applications or processes accessing them. And often you didn't have applications, just a bunch of people accessing it directly. Putting rules and trusting each of them for integrity was not a good approach.

However today, in some fields at least, people are starting to view data as "embedded" in a single business process (and vendor lockins are contributing to this a lot). This data is served by a specific application. If you want to manage customer, use the Customer Management plateform. No you cannot come and play with the Customer data without going thru the app; we'll share data with out with an external process (services, data replication, batch import, etc.). In that kind of environment, normalization is important but have a much lesser scope and "raison d'etre".

This is why I believe Ayende had a valid point, but he missed the target with his reasoning.

@Karhgath: well, I don't want to argue back and forth about it but I still disagree with your definition of fact/truth/knowledge. You make it sound like they are all different concepts when (in terms of data) they are pretty much all the same. What gets stored in the database is (presumably) the best-known information at the time the data was written. If your fact tables have incorrect information in them, they are still fact tables, their contents are just not factual. If my name is in the database as Swappy, then the system thinks my name is Swappy. It's a "fact" as far as the system is concerned.

It doesn't even sound like we disagree on this necessarily, but I don't see how this information sheds any light on the larger discussion.

I don't think anyone is arguing with the method you describe with your example, except it's not denormalization at all (as MANY have pointed out--I'm not going to rehash that) . So if the DBAs and architects you work with would argue for NOT storing a snapshot address (or product price, or whatever) on the order/orderline, you are working with DBAs and architects who don't understand TNF.

Jim,

It isn't ORMs that I am talking about here, it is the relational model.

Admittedly, ORM can mask the cost of traversing the graph, and small objects in the OO world is an anti pattern in the relational world if you have 1:1 mapping.

That is why NHibernate allows you to split a single table into multiple objects, maintaining the best view in both worlds (using the component tag).

Jonathan,

snort, I worked extensively with temporal databases, and to be frank, you can't really get a better example of why RDBMS are painful than using them in that scenario.

Just to give you an idea, we had a system where every single property had to be temporal. And we had to track the change on each of them independently.

That was a PITA, to say the least. And query performance when you had to access those temporal data sucked (show me the employee's salary for Nov 2009, where you had to show the employee name as it was at that time, for example)

Frank,

You keep a reference to the id, obviously.

My point is that "let us make everything a reference" is usually something that requires consideration.

I usually like your posts but this is the first post in a long time where I had a wtf ayende what are you thinking :-).

As a db expert you don't really know about BNF? By the way, in your tiny model I would expect something like a product SKU to use instead of a name as it would be silly to use a name.

But your posts clearly shows to first look at your data. Then normalize it and possibly denormalize it for performance reasons. Which is quite logical in most environments.

@Frans,

a) except that normalization has been drilled so hard into people's head that it has became not only the default, but the "you must do so". That is something that requires thinking about, for scenarios such as the one that I mentioned in the post.

b) even with good ORM support, load data from a large number of table is going to be costly.

For example, consider:

Order.Payments

Order.OrderLines

Order.Discounts

Order.Payments.Transactions

Order.SupportCalls

Loading all of that is a costly thing, no matter how you do is.

Also, not having the foreignkey references explicit is quite normale. They are three seperate models with possible three different databases or even services.

Also very normal design pattern for apps being developed nowadays with concepts like composite views in f.e. the browser.

Stephane,

That works in some systems well, but that requires that you'll have two models.

And it doesn't help when you need to look at previous data (what was the name on Nov 2009 ?)

And I am actually more concerned with the read perf, not so much with how you store the data

Evereq,

I deal with denormalized data with NHibernate all the time, that is what the component tag is for.

Ramon,

BNF?? Backus–Naur Form ?

That is a way to define a syntax for a language, I think that you are talking about something else.

@Ayende,

Sorry :) sometimes I type faster then I think. I just ment NF. Like 1NF, 2NF, 3NF, etc.

Re @Ayende "I deal with denormalized data with NHibernate all the time, that is what the component tag is for."

Sure you deal :)

What I see from other comments that NOT everybody know how to dial with it and especially how to dial efficiently (especially with Updates in DB) :)

Maybe that's a reason why a lot of people refuse denormalization like strategies (or at least afraid to use it with ORMs?)

That is why I see sense to have more information (via your posts for example) about Denormalization in ORMs (at least NHibernate!).

Sure up to you :)

I'm not sure if someone has mentioned this already, but this is where separating the transactional db from the reporting db comes in. And this is why CQRS is gaining traction. The problem you bring up (regarding querying highly normalized data) can be solved by exporting the transactional data to a reporting DB.

I think that is the solution to the read/write compromise. DON'T! Write to a normalized transactional database (or even better an event store), export to and read from a de-normalized query database. It makes everything simpler. The transactional DB remains you're ONE TRUTH while the query DB provides quick reads.

I am definitely with Mr. Brown here.

There should definitely be a separation in data access strategies in analytical vs transactional approaches.

It would be much more common to require de-normalized historical information in a reporting context. That can be easily achieved with an analytical extract that is completely denormalized and THEN indexed.

For high volume transactional processing data integrity is a much bigger concern than space and even performance. Data integrity is the cornerstone of the normalized relational model advantage, not space considerations.

I understand Ayende too though. Any developer above average is usually obsessively concerned with performance, sometimes at expense of usability and maintainability, especially if he is part of a larger team that consists of a mix of different kinds of developers that are not necessarily above average, but do know how to copy and paste well. What works for Ayende because of his exceptional design skills will not work for the copyandpasters. They need simple rules that can be engraved on their skulls, not erotic DB arts and crafts that are featured here.

The problem with OrderLine and Product isn't caused by too much normalization. The problem is caused by taking an entity that should be a snapshot in time (OrderLine) and associating it with an entity that is not designed as a snapshot in time (Product). To make this correct, you would need to use something like a ProductVersion entity in place of Product that has a start and end date. Any time a change to the product is accepted by the people responsible for products, a new ProductVersion is created, and new OrderLine entities would use this new version of the product.

I agree to some extent regarding the fact this isn't normalisation, the price of an item on an order is not duplicate data of the "stock" price in the product table, because it can change.

However I wouldn't break the link between order line and product, you still need it there. Why ? simple - the boss says "tell me our top 10 products" - you can't do it because all you have to rely on to identify a product in the OrderLine table is the name - which could change.

If you kept the Product ID in OrderLine you'd be able to reliably and safely identify each product sold.

Taking it one step further, imagine you want to track purchases to offer other products (like amazon do for example).

In summary I'd say this was an over simplification, not de-normalisation.

It may be convenient for an ORM but I don't think its very useful in the real world.

The "ProductCost" (on the OrderLine table) would only need to be "normalized away" (to the Product table) iff there is a business rule stating that the ProductCost is uniquely determined by something less smaller than a candidate key of OrderLine (ProductId, in this case).

[And similarly for ProductName, of course]

In the absence of this business rule (e.g. where there is the contrary business rule that the ProductCost on an order line must stay constant even if the ProductCost in the Product changes later), removing ProductCost from OrderLine to Product in this way isn't normalizing -- its failing to implement the requirements properly.

The temporal aspects tend to bite people because the business users who are writing requirements will often think in terms of the rules that apply at a point-in-time -- so may well state that OrderLine.ProductCost is uniquely determined by the ProductId, but omit the implied qualification "at the time the order is made". Its the job of the requirements analyst to find out what the real requirement is.

[And, as MarkC noted, dropping the link to Product is crazy -- particularly as we are anticipating product name changes, so there would be no way to link the record back up again afterwards.]

Ayende's design may well be the right one for certain sets of requirements. Its basically a modified temporal model, where we only retain the historical values of ProductCost for products if there was at least one order using that value of ProductCost, and we physically denormalise that historical record to OrderLine, on the understanding that it is archive data and thus constant and therefore not problematic from an 'update to multiple copies of denormalized data' p-o-v.

But without understanding the requirements, its impossible to tell.

Guys, very exciting discussion here. You are for sure experts in your domains - massive websites are totally different to intranets and ERPs with predictable users loads and uncomparable for read/write ratios, but RDBMs are still the best known thing to data integrity and if someone invents better approach, it will be for at least Turings if not Nobels prize, I think.

@karhgath sed probably full truth and I like whats @Browns sed too. But also @evereq interrests me considering "immutability", as I posted similar question here bit.ly/9nPSXn (no wars please:-), related to "entity instances versioning". I simply dont know if some existing RDBMs supports this approach or at least if some ORMs are aware of this. Could someone reply with some theory and/or products which can do such data records timestamping to generic relations history support? I know only already dead one from MS-DOS (even CP/M era) to which I was almost addicted, and frustrated to develop larger DB apps without it :-)

According to "Customers name" issue, when TYPO occurs, I would like to update already existing (logically immutable) version, but when MARRIAGE occurs, then create new version with the same ID but new TS and appropriate changes. Possible somewhere??

Thanks a lot in advance!

Comment preview