Abstracting the persistence medium isn’t going to let you switch persistence abstractions

This came in as a comment for my post about encapsulating DALs:

Just for the sake of DAL - what if I want to persist my data in another place, different than a relational database? For instance, in a object-oriented database, or just serialize the entities and store them somewhere on the disk... What about then ? NHibernate cannot handle this situation, can it ? Wouldn't I be entitled to abstract my DAL so that it can support such case ?

The problem here is that it assume that such a translation is even possible.

Let us take the typical Posts & Comments scenario. In a relational model, I would model this using two tables. Now, let us say that I have the following DAL operations:

- GetPost(id)

- GetPostWithComments(id)

- GetRecentPostsWithCommentCount(id)

The implementation of this should be pretty obvious on a relational system.

But when you move to a document database, for example, suddenly this “abstract” API becomes an issue.

How do you store comments? As separate documents, embedded in the post? If they are embedded in the post, GetPost will now have the comments, which isn’t what happens with the relational implementation. If they are separate documents, you complicated the implementation of GetPostWithComments, and GetRecentPostsWithCommentCount now have a SELECT N+1 problem.

Now, to be absolutely clear, you can certainly do that, there are fairly simple solutions for that, but this isn’t how you would build the system if you started from a document database standpoint.

What you would end up with in a document database design is probably just:

- GetPost(id)

- GetRecentPosts(id)

Things like methods for eagerly loading stuff just don’t happen there, because if you need to eagerly load stuff, they are embedded in the document.

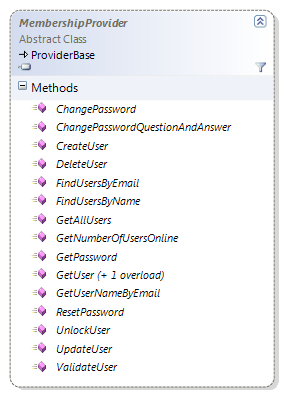

Again, you can make this work, but you end up with a highly procedural API that is very unpleasant to work with. Consider the Membership Provider API as a good example of that. The API is a PITA to work with compared to APIs that don’t need to work to the lowest common denominator.

When talking about data stores, that usually means limit yourself to key/value store operations, and even then, there is stuff that leaks through. Let me show you an example that you might be familiar with:

The MembershipProvider is actually a good example of an API that try to fit several different storage systems. It has a huge advantage from the get go because it comes with two implementations for two different storage systems (RDBMS and ActiveDirectory).

Note the API that you have, not only is it highly procedural, but it can also assume nothing about its implementation. It makes for code that is highly coupled to this API and to this form of working with data in procedural fashion. This make it much harder to work with compared to code using a system that enable automatic change tracking and data store synchronization (pretty much every ORM).

That is one problem of such APIs, it greatly increase the cost of working with it. Another problem is that stuff still leaks through.

Take a look at the GetNumberOfUsersOnline method. That is a method that can only be answered efficiently on a relational database. The ActiveDirectoryMembershipProvider just didn’t implement this method, because it isn’t possible to answer that question from ActiveDirectory.

By that same token, you can’t implement FindUsersByEmail, FindUsersByName on a key/value store (well, you can maintain a reverse index, of course), nor can you implement GetAllUsers.

You can’t abstract the nature of the underlying data store that you use, it is going to leak through. The issues isn’t so much if you can make it work, you usually can, the issue is if you can make it work efficiently. Typical problems that show up is that you try to map concepts that simply do not exists in the underlying data store.

Relations is a good example of something that it is very easy to do in SQL but very hard to do using almost anything else. You can still do that, of course, but now your code is full of SELECT N+1 and you can waive goodbye to your performance. If you build an API for a document database and try to move it to a relational one, you are going to get hit with “OMG! It takes 50 queries to save this entity on a database, and 1 request to save it on a DocDb”, leaving aside the problem that you are also going to have problems reading it from a relational database (the API would assume that you give back the full object).

In short, abstractions only work as long as you can assume that what you are abstracting is roughly similar. Abstracting the type of the relational database you use is easy, because you are abstracting the same concept. But you can't abstract away different concepts. Don’t even try.

Comments

I presume you mean "can't" in the last sentence?

Dennis,

Yes, fixed.

So, having accepted defeat on the idea of persistence ignorance, the inevitable question for every project will be: what is our persistence mechanism going to be?

Does this spell the beginning of the end for the RDBMS?

Jonty,

That is something that is only useful for RDBMS, with storage like DocDB or KV stores, it is entirely unneeded.

For BigTable storage, it isn't even _possible_.

@Ayende

Man, you really hit me with this article...

Still, we can abstract the way we are going to access a a persistent container (RDBMS, OODBMS, XML, etc.), as long as we do not want to switch it somewhere down the development/production line... So we could have all ORM under one framework (as Java Persistence API), all document based API under one framework and so on.

Actually it would be great if someone could actually come up with a solution for this problem. It is very very common for an larger application to have these requirements for various parts of the architecture. And the problem is often that for the "smaller" parts, a custom made architecture is usually used, instead of using some nice infrastructure component.

@Dennis: "a custom made architecture is usually used, instead of using some nice infrastructure component."

because it just can't be just an infrastructural solution...

Good post.

In the Ruby world, there are various adapters for the DataMapper O(R)M which link to non-relational stores, which suggests to people that they can switch between RDBMS and NoSQL persistence by changing a setting. However, for all but the simplest storage requirements, there is such a disconnect between the two paradigms that the classes you write for one are essentially unusable and meaningless with for the other.

In my own projects, I now use DataMapper or ActiveRecord for RDBMS mapping, and store-specific mappers like Mongoid for NoSQL backends.

BTW, I've yet to work on a project that has not required a combination of RDBMS and NoSQL.

My last post got spam filtered:

I disagree we shouldn't abstract this. What about a fetching strategy?

repository.Get <ipostwithcomments()

Whilst I agree that IPostWithComments is technically unneeded for DocDB, it immediately tells the consumer of the code about the design decision taken for the storage of that data. This is a good thing to abstract; we are abstracting from a use case (as opposed to technical) point of view. The fact that it works with RDBMS is an added advantage.

Urgh, how do you do angle brackets in the comments?

repository.Get < IPostWithComments > ()

Nice post, nice analyze, just some horrible wrong conclusions, sorry :)

First my reaction was just to not comment (maybe was really good idea :))

But then I decide - I should :)

a) You seems got Joel Spolsky horrible WRONG :)

He never say "don't even try" (at least I cannot find where, point me if I am wrong)

If guys couple of decades ago "don't try", we don't get strings in C++, we don't get now Internet (TCP/IP), we don't have ALL mathematics (as actually it's just leaky abstractions :D) and other brilliant (and not so brilliant) things! Same like if you don't try to develop NHibernate ("mapping" abstraction actually that works well for RDBMS) or Rhino Mocks, .NET developers will not get now both, just because abstractions are leaky!

Ayende, you really think that we don't need to TRY to create abstractions, because most of them (i.e. not trivial) are leaky !!!???? Correct this, PLEASE :) in post so guys near you continue to TRY or correct me if I get it wrong :)

b) I will repeat: Joel Spolsky was absolutely right - abstractions are leaky, the same way like I study 10+ years ago, that all "not trivial" sets of axioms in mathematics are "leaky" ! (read en.wikipedia.org/.../G%C3%B6del%27s_incompleten... if interested).

It's same like all developers probably know (at least professional) that there is no silver bullet etc.. All this "concepts" ("one full solution for all cases", "complete and not leaky abstractions" etc) are same!

The problem here that your readers should know that while abstractions can be leaky - they also can be useful! So TRY to create them, IF needed, even if it's hard sometimes :)

c) Here I want to go to our example with concrete methods, but my "energy' become too low :) So I will be brief:

it's completely possible to implement

GetPost(id)

GetPostWithComments(id)

GetRecentPostsWithCommentCount(id)

in almost ANY type of data storage efficiently!

why you think that it's a problem if both GetPost(id) and GetPostWithComments(id) will return SAME? OK, they return same for document database AND ??? What problem it generate? For regular database you do Joins, for document - you just get it all together :D with embedded comments in one trip to storage! :) Never see that somebody actually complain that he get too many data in one "step" :D Sure traffic increased (and maybe little operation time ), but if we be "pragmatic" and know that even best blog posts have say max like 100 comments I don't see big issue in this :D Ah, and if you want really, really to avoid this additional "traffic" - just create 2 "document" storage's - one that store ONLY posts (without integrated comments) and another that store both and use each one in own method :) I.e. Denormalize!

Regarding GetRecentPostsWithCommentCount(id) first I don't understand what it should do :D I.e. get recent posts with comments qty, but what is 'id'? Anyway, let's think it should return amount of posts with comments (i.e. say ONLY with comments) :) Agree that method here have small issues with "document" / noSQL storage's, BUT it's again possible to implement it efficiently! How you implement it in relational database? Yes, you use "count", "where" and "join" :) I.e. it's anyway "complex" (for database engine, not for developer). Now because "document" / noSQL storage's "thin" (i.e. usually lack features like Joins, Limit and Count) it's a bit more work for us developers! FOR EXAMPLE (only for example as sure algorithm is stupid): we create some key in say "hashtable1" storage, say "PostsWithCommentCount" and store here how many such posts we have that have comments :) So next, when comment created we looks up in another hashtable "PostsWithComments" id of just created post. If it's exists we don't do anything, if it does not exists, we add such key into PostsWithComments hashtable and increase value in "hasthable1" for key PostsWithCommentCount by 1 :) (sure locks etc still issue, but I skip it for now for simplicity).

At the end hashtable "PostsWithComments" will contain all posts that have at least one comment and we will have simple one trip to storage into "hashtable1" to retrive value for "PostsWithCommentCount" key. So to made it work, we just use actually 2 hashtables (can be actually one sure thing) and use some stupid (really very very stupid algorithm) that give us ability to calculate what we need :) So using a) denormalization b) parallel processing c) efficient hashing and other technologies we almost ALWAYS can implement almost ANY abstract interface efficiently (sure if this interface itself correct). Even such stuff like Paging is possible! Read memcached google group to get a lot of "algorithms" how to work efficiently with "hashtables" and implement here whole a LOT of things having only "key" and "value" in your hands :D

P.S. Please don't blame me on algorithm (I know it's stupid) - I think up it right when I type it after working 10 hours already and by effected by Azure Table Storage where it's really "cheap" to denormalize data and store twice more information, but really "expensive" to made twice more calls to storage engine (i.e. you pay much more for trips to ATS, then for data capacity!) :)

I am sure guys here will think up 1000s times better stuff :)

Comment preview