Paxos enlightment

Paxos is an algorithm used to reach consensus among a group of machines, which is resilient to failures. For a long time, I had a really hard time understand Paxos. Or, to be rather more exact, I didn’t have an issue with Paxos per-se, I understood the protocol. What I had a trouble with is its application.

My problem always was that I couldn’t figure out what you do with it. That had to do with a basic problem on my part, I failed to understand how to go from a shared consensus on a numeric value to something that is actually useful. After a while, I had enough of feeling stupid, and I started reading all the material that I could on that, including going through the source codes of available Paxos implementations (such as libpaxos). The main problem was that I had a huge misconception in my head.

I kept thinking that Paxos is a consensus algorithm to arrive at a value in a distributed system. It isn’t, and because I kept thinking that it is, I had a really hard time understand what it does and how to apply it.

Leslie Lamport [pdf], the original author of the algorithm, describe the goal of Paxos as follows:

Assume a collection of processes that can propose values. A consensus algorithm ensures that a single one among the proposed values is chosen. If no value is proposed, then no value should be chosen. If a value has been chosen, then processes should be able to learn the chosen value.

This is from the Paxos Made Simple paper, I don’t know about you, but I have a hard time going from proposed value to something useful. What finally made everything click was the Paxos Made Code, which describe the implementation of libpaxos. While reading the paper, I had a light bulb moment.

This is from the Paxos Made Simple paper, I don’t know about you, but I have a hard time going from proposed value to something useful. What finally made everything click was the Paxos Made Code, which describe the implementation of libpaxos. While reading the paper, I had a light bulb moment.

Paxos is an algorithm used to ensure consist ordering semantics over a set of values in a cluster of machines. Why is this important? Because if you have consistent ordering over a set of values, and the values are events (or commands, or states), you can be sure that all machines in the cluster have either the same state or a previous version of the state.

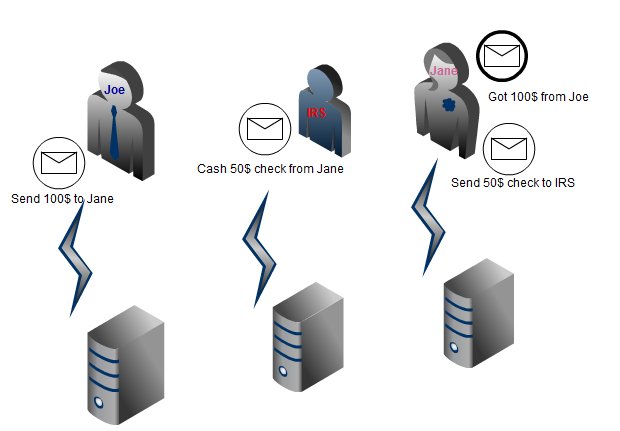

Let us see how this can be useful. We have the following scenario:

- Jane has no money in her back account.

- Joe sends 100$ to Jane

- When Jane is notified that it got the 100$, she send a 50$ check to the IRS

- The IRS cash the check and spend it on something stupid.

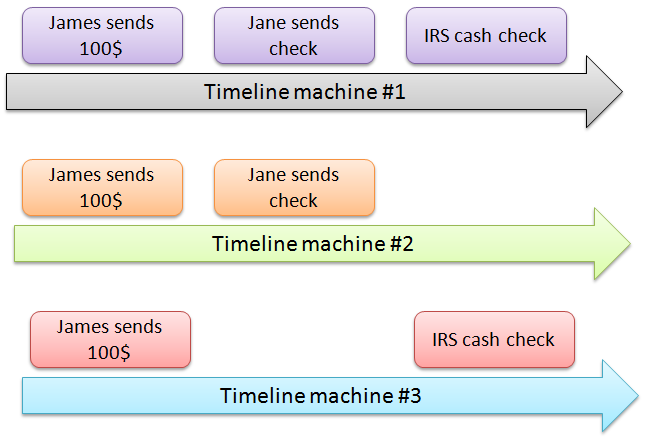

Without a consistent ordering, each machine in the cluster may view the events in any order, which means that the following three timelines are allowed:

As you can imagine, Jane isn’t very happy about that overdraft fee she was just charged with. It is important to note that Paxos sole use here is to ensure that all machines will have a consistent view of events across all machines. That view might not be the same as the order those events showed up. It is possible to give that guarantee as well, on top of Paxos, but that isn’t the topic of this post.

Now that we all (hopefully) understand what Paxos is and what it is used for, let us talk about the algorithm itself.

Paxos has three actor types (Usually, the different actors are all part of the same system, either all together or two of the roles together), we will assume that we have 3 of each in our cluster and that we are interested in events ordered by sequential integer event ids with no gaps:

When you want to make a change in the system, you go to the proposer and tell it that you want to add event SendCheck { To= “IRS”, Amount = 50 } to the system.

The proposer then check what is the latest event id that it knows about, increment it, and then ask all the acceptors in the cluster to reserve that event id for its use. (Please note that I intentionally skip the details of how this is done, I am trying to get a high level description here, you can read the actual algorithm description for all the details).

There are several options here:

- Another proposer is currently trying to reserve that event id.

- Another proposer successfully claimed this event id.

- Another proposer tried to claim this id and then crashed midway.

- Etc… :-)

What Paxos ensures is that in the end, even in the presence of failures of network and machines, the proposer will be able to write the SendCheck { To= “IRS”, Amount = 50 } to an event id in such a way that no other machine will see another value in that location and that eventually all machines in the cluster will see that value in that location.

It is important to understand that even with Paxos, the following timelines are possible:

That is, due to some error, we may not be fully up to date on some machines (as seen on machine #2) or that we have missing events (as seen on machine #3).

What Paxos provides is that there wouldn’t be a scenario in which we have missing events and are not explicitly aware of that. At that point, we can defer processing of cashing the check until we know what event we are missing, explicitly decide to ignore the discontinuity in the time line or something else that fit the business needs.

In order to understand how this all works, I had to write my own implementation of Paxos in C#. There doesn’t seem to be anything like that available to the public, and I (at least) find the code I wrote much easier to understand than libpaxos’ C or Erlang implementations. You can find the implementation here: http://github.com/ayende/Paxos.Demo

Comments

You might want to add that the "classic" two-phase commit is just a special case of paxos commits where the number of coordinators is 1 (general paxos can achieve consensus for 2n+1 coordinators with up to n failures)

Arnon

Hey,

how many hours has your day?

You are blogging, replying to mailing lists, and working on Raven. I'm spending 1/2 a day only for check your news.

:-)

Ty for your knowledge sharing.

A.

What's the learner's role in this story? You say the proposer asks the acceptors the reserve the event id. Is this also the learning part? Then, what is the difference between a learner and an acceptor?

I'm also curious about the practical application of this and where does it fits in the general technology stack?

Is this logic that's present inside message queue implementations or some application-level message bus?

i.e. Do all your services need to be paxos-aware in order to be able to take advantage of this?

Would be good if anyone can provide links to some architecture documentation / case study, etc. of any application where this is proven to be useful.

I'm more curious about which tier where this logic would be applied.

Peter,

Learners are notified by acceptors when a value is accepted.

Demis,

Paxos is important if you want to have global ordering in the face of failure.

When you have global ordering, you can be sure that there are no conflicts.

That means that you can use it to do things like selecting the master between a group of servers, ensure that a write will not be lost, etc.

I see, so it might be something useful in master-master replication scenarios? It does seem useful in detecting and recovering from faults, its just that it looks like it would introduce too much complexity to be included in your normal enterprise app.

Good example though, makes it easier for the rest of us to understand.

Demis,

You wouldn't use it for the main thing, no.

But you would use it as the backend for command pipelining, for example.

In general, I don't think that you need to be aware of it at the actual app level

james, joe, jane??? did you get yourself confused with the names startjng with j?

Awesome !! Any chance to get a Ayende Blog Entry (tm) about Bloom Filters ?

Oren, how about fully ordered messages with delivery guarantees.

Srdjan,

Paxos means that in a system with N machines, as long as more than N/2 machines are operating, you have delivery guarantee

Comment preview