RavenDB Performance Tweaks

Continuing my efforts to make RavenDB faster, I finally settled on loading all the users from Stack Overflow into Raven as a good small benchmark for Raven. This is important, because I want something big enough to give me meaningful stats, but small enough so I would have to wait too long for results.

The users data from Stack Overflow is ~180,000 documents, but it loads in just a few minutes, so that is very useful.

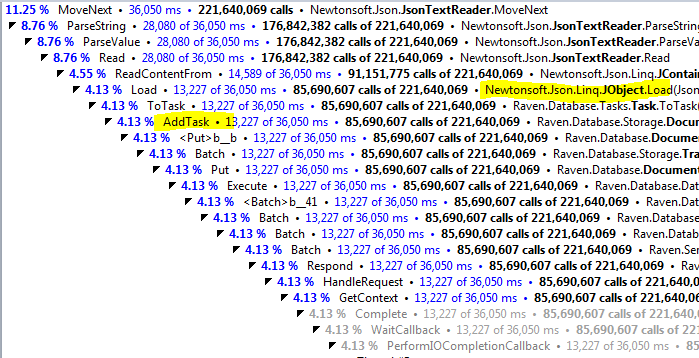

With that, I was able to identify a major bottleneck:

The AddTask takes a lot of time, mostly because it needs to update a Json field, so it needs to load it, update, and then save it back. In many cases, it is the same value that we keep parsing over & over again. I introduced a Json Cache, which could save the parsing cost. When introducing this, I was also able to make it useful in a few other places, which should hopefully improve performance in a few more places.

Current speed, when inserting ~183,000 documents (over HTTP on the local host) in batches of 128 documents is 128 milliseconds. I consider this pretty good. Note that this is with indexing enabled, but not counting how long it takes for the background indexes to finish processing.

When measuring that as well, after inserting hundreds of thousands of documents, it took another half a second (but note that my measuring granularity was 0.5 second) to finish updating the indexes on the background. I think it is time to try to throw the entire StackOverflow data dump at Raven and see how fast it can handle it.

Comments

Yeah I temporarily added a value cache for my serializer as well as there are certain things like Serializing Dates which are very expensive. Obviously caching gives you an instant perf boost - unfortunately as I was trying to compare benchmarks with other serializers it was unfair to tailor my serializer against the benchmark data (i.e. the Northwind database in a tight loop) so I ended up taking it out. Also meant I no longer have to worry about running out of / cleaning up memory.

Would you consider using BSON instead of JSON? BSON is specifically designed for processing performance - having lengths at the start of documents (and strings) makes scanning quite efficient. I know the JSON.NET library made significant performance improvements to their BSON stuff over the last month or so.

Karl,

I am not worried that much about the parsing cost, I am more worried about the re-parsing cost, which is something different.

@Karl

I also considered using BSON at one point, the problems I had with it was that it is less human readable/writeable and you won't be able to dump it directly from the data store onto the response stream which is very useful in Ajax apps.

I find BSON kind of lives in between JSON (slow, human read/writeable, version-able and interoperable) and Protocol Buffers which is very fast, fairly interoperable but more rigid and not human readable.

@Ken Egozi

Hey thx for the validation, I hope its proven useful!

With all the de/serializers out there I really thought .NET was missing something that was faster, smaller, readable and more resilient than JSON and XML which is why I decided to created the hybrid JSON+CSV (aka JSV format). As you've noticed the CSV-style escaping is both quicker to parse and ends up being more readable.

Basically values are not just strings or numbers (as they are in JSON) they are simply string-serialized values which are either CSV-escaped (if they contain any special chars) or not. As a bonus for controlling the source code I can ensure that it works just as good cross-platform on Mono.

In my experience, having written Json.NET, BSON is faster to read, JSON is faster to write.

If you do want to improve JSON read performance that perf test pretty much nails it: parsing strings is the slowest part. I've made it as fast as I know how but I'm sure someone could do it faster.

@James Newton-King

I think this is where CSV-style double-quote escaping is proving effective performance wise. I'm able to parse strings without a 'stack state' so I can parse simply by increment a char array, I also don't need to decode any un-escaped values and can use it as-is.

Although having said that, I believe the perf cost in deserialization is also largely due to instantiating and populating objects. I'm sure you're also avoiding any runtime reflection, but you may not be using 'generic static optimizations' to also eliminate any 'Type or cached-delegate lookups' which I've also found to provide a noticeable perf increase.

Feel free to examine the source code to see what I mean. The source code for the TypeSerializer is open source under the liberal new BSD Licence, quite short and hopefully easy to read, It all starts from this class:

code.google.com/.../TypeSerializer.cs

Although for the ultimate tips on perf optimizations I would also follow @Marc Gravell's .NET protocol buffers implementation:

http://code.google.com/p/protobuf-net/

As he is notorious of for doing any optimization known to .NET-land to squeeze every ounce of performance possible.

Comment preview