Rhino Divan DB – Performance

The usual caveat applies, I am running this in process, using small data size and small number of documents.

This isn’t so much as real benchmarks, but they are good enough to give me a rough indication about where i am heading, and whatever or not i am going in completely the wrong direction.

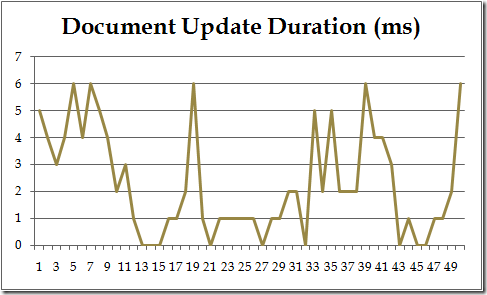

Those two should be obvious:

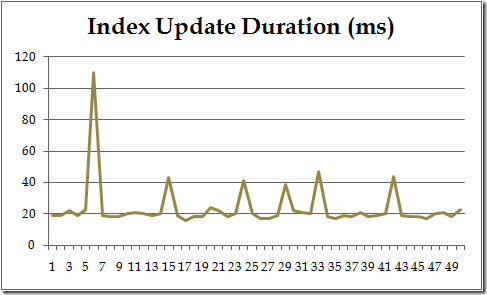

This one is more interesting, RDB doesn’t do immediate indexing, I chose to accept higher CUD throughput and make indexing a background process. That means that the index may be inconsistent for a short while, but it greatly reduce the amount of work required to insert/update a document.

But, what is that short while in which the document and the DB may be inconsistent. The average time seems to be around 25ms in my tests, with some spikes toward 100 ms in some of the starting results. In general, it looks like things are improving the longer the database run. Trying it out over a 5,000 document size give me an average update duration of 27ms, but note that I am testing the absolute worst usage pattern, lot of small documents inserted one at a time with index requests coming in as well.

Be that as it may, having inconsistency period measured in a few milliseconds seems acceptable to me. Especially since RDB is nice enough to actually tell me if there are any inconsistencies in the results, so I can chose whatever to accept them or retry the request.

Comments

The index update seems high for 5,000 documents.

If you scale it to let say 5 million documents,it wil take at least 27 seconds,and this is in the really good case that the indexing scale in a linear way.

As you said this is really a small dataset.

Uriel, probably there is a large constant cost, so you cannot multiply it by 1000.

Uriel,

There is a cost per indexing operation, which is pretty high, but that cost isn't affected by the size of the already indexed documents.

5,000 docs or 5 millions, doesn't matter for the indexing op

Oops,you are right i forgot that the cost is per a set of modified/added documents.

How many indices are you updating with those 5,000 docs? i guess if you have many indices(for many views) the cost per document will grow too,right?

It might be too soon, but would be cool to see performance benchmarks comparing RDB to NoSQL databases.

I understand the delay between changing the document and the time the change is reflected by an index is practically constant. Does it mean that no matter how many documents are updated, the index update delay will be more or less the same? What about a 'flood' of changes at maximum possible rate?

What about having several indexes? In many systems I have seen tables having 10-20 indexes for various kinds of search/sorting. What will be the index update delay if there are 20 indexes?

Did you measure the insert/update performance in a multithreaded environment?

Richard,

It is WAY too soon to do real benchmarks for Raven.

I only did this test to prove that I this approach is valid

Rafal,

Indexing time for a single document is practically zero, the cost is actually writing & flushing to the index.

A flood of changes doesn't really bother me, you can still access all the data, and the index will keep up with the work being done. The only bad affect is that the index will indicate that it is stale, but there is no real helping that here.

2) Each index is handled separately, more or less, so there would be some indexes that might take a bit longer, but I don't believe that significantly so.

3) Index update is a single threaded operation by nature.

Isn't a large number of small writes pretty hard to optimise with Lucene, as it means you need to drop and reopen any existing index readers?

How are you handling index optimisation?

Ryan,

As I said, this is the absolute worst possible scenario

Comment preview