When mini benchmarks are important

"We should forget about small efficiencies, say about 97% of the time: premature optimization is the root of all evil" - Donald Knuth

I have expressed my dislike for micro benchmarks in the past, and in general, I still have this attitude, but sometimes, you really care.

A small note, while a lot of namespaces you are going to see are Google.ProtocolBuffers, this represent my private fork of this library that was customized to fit UberProf’s needs. Some of those things aren’t generally applicable (like string interning at the serialization level), so please don’t try to project from the content of this post to the library itself.

Let me show you what I mean:

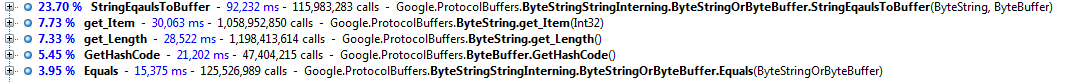

The following is profiling this piece of code:

private static bool StringEqaulsToBuffer(ByteString byteString, ByteBuffer byteBuffer) { if(byteString.Length != byteBuffer.Length) return false; for (int i = 0; i < byteString.Length; i++) { if(byteString[i] != byteBuffer.Buffer[byteBuffer.Offset+i]) return false; } return true; }

This looks pretty simple right?

Now, it is important to understand that this isn’t some fake benchmark that I contrived, this is the profile results from testing a real world scenario. In general, methods such as Equals or GetHashCode, or anything that they call, is likely to be called a lot of times, so paying attention to its performance is something that you should think about.

This are a couple of very easy things that I can do to make this easier, remove the call to the ByteString indexer (which show up as get_Item in the profiler results) to a direct array access and consolidate the calls to the ByteString.Length property.

After applying those two optimizations, we get the following code:

private static bool StringEqaulsToBuffer(ByteString byteString, ByteBuffer byteBuffer) { var strLen = byteString.Length; if(strLen != byteBuffer.Length) return false; for (int i = 0; i < strLen; i++) { if(byteString.bytes[i] != byteBuffer.Buffer[byteBuffer.Offset+i]) return false; } return true; }

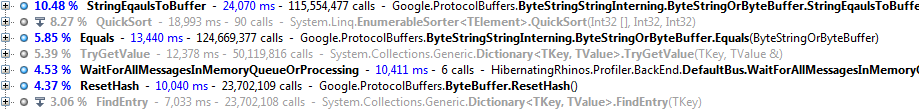

And this profiler result:

You can see that the this simple change resulted in drastic improvement to the StringEqualsToBuffer mehtod. As it stands now, I don’t really see a good way to optimize this any further, so I am going to look at the other stuff that showed up. Let us take a look at ByteBuffer.GetHashCode() now:

public override int GetHashCode() { var ret = 23; for (var i = Offset; i < Offset+Length; i++) { ret = (ret << 8) | Buffer[i]; } return ret; }

The problem is that I don’t really see a way to optimize that, instead, I am going to cache that in a field. There is some problem here with the fact that ByteBuffer is mutable, but I can handle that by forcing all call sites that change it to call a method that will force hash recalculation. Note how different this decision is from the usual encapsulation that I would generally want. Placing additional burdens on call sites is a Bad Thing, but by doing so, I think that I can save quite significantly on the hash code calculation overhead.

Next, let us look at the DoCleanupIfNeeded method and see why it is taking so much time.

private void DoCleanupIfNeeded() { if (strings.Count <= limit) return; // to avoid frequent thrashing, we will remove the bottom 10% of the current pool in one go // that means that we will hit the limit fairly infrequently var list = new List<KeyValuePair<ByteStringOrByteBuffer, Data>>(strings); list.Sort((x, y) => x.Value.Timestamp - y.Value.Timestamp); for (int i = 0; i < limit/10; i++) { strings.Remove(list[i].Key); } }

From the profiler output, we can see that it is an anonymous method that is causing the holdup, that is pretty interesting, since this anonymous method is the sort lambda. I decided to see if the BCL can do better, and changed that to:

private void DoCleanupIfNeeded() { if (strings.Count <= limit) return; // to avoid frequent thrashing, we will remove the bottom 10% of the current pool in one go // that means that we will hit the limit fairly infrequently var toRemove = strings.OrderBy(x=>x.Value.Timestamp).Take(limit/10).ToArray(); foreach (var valuePair in toRemove) { strings.Remove(valuePair.Key); } }

This isn’t really what I want, since I can’t take a dependency on v3.5 on this code base, but it is a good perf test scenario. Let us see what the profiler output is after those two changes:

This is much more interesting, isn’t it?

First, we can see that the call to ByteBuffer.GetHashCode went away, but we have a new one, ByteBuffer.ResetHash. Note, however, that ResetHash only took half as much time as the previous appearance of GetHashCode and that it is called only half as many times. I consider this a net win.

Now, let us consider the second change that we made, where previously we spend 11.1 seconds on sorting, we can see that we now spend 18 seconds, even if the number of calls is so much lower. That is a net lose, so we will revert that.

And now, it is the time for the only test that really matters, is it fast enough? I am doing that by simply running the test scenario outside of the profiler and checking to see if its performance is satisfactory. And so far, I think that it does meet my performance expectation, therefore, I am going to finish with my micro optimizations and move on to more interesting things.

Comments

(Nitpick: Equals is spelled wrong in StringEqualsToBuffer)

With ResetHash, are you recalculating the hash immediately, or simply clearing the hash value, allowing it to be calculated again when needed, lazily?

These posts are interesting, thanks for making them!

Are you sure the first problem with get_Length/get_Item isn't caused by running the code under the profiler (a Heisenbug, in effect)? In optimised code running normally I'd expect to see the property accessor methods inlined by the CLR. Often profilers disable inlining to get more 'accurate' results which can lead to false positives like this when the method call overhead becomes significant; you may want to check that your profiler settings and ensure that it was not disabling method inlining.

In addition, your GetHashCode function doesn't appear to provide a true hash. As you're simply left-shifting all the previous data by 8, you're actually just discarding all but the last four bytes of data. I'd consider replacing the aggregation term with a multiply/xor combination, e.g. "(ret * 23) ^ Buffer[i]", which will give you a true hash.

Micro-optimizations are needed I think:

codebetter.com/.../...need-micro-optimization.aspx

I wrote also concerning optimization on for loop:

codebetter.com/.../...e-net-code-performances.aspx

Btw, you can still have a win on the first example by creating a temporary reference to byteBuffer.Buffer (if it is a reference type):

Some others tips on Micro optimization I found btw:

codebetter.com/.../...to-increase-performance.aspx

Andrews

yes I fond that ot after I did the profiling and dht want to redo it

and I recalc immediately

Greg

I haven't considered the effect of running under the profiler, you are right. But the perf boost was noticable even without the profiler

you are correct about the hash func as well, in my defence, I am matching the behaviour of a third party

If you have to use that stupid hash function, surely you can implement it by looking only at the last 4 characters? That would save that loop which can get expensive for long strings.

In fact, if we're talking x86 assembly, GetHashCode() for Length>=4 can be implemented in a few instructions

// given EBX=Buffer+Offset and ECX=Length

MOV EAX, EBX[ECX-4]

BSWAP EAX // convert little endian to big endian

RET

I don't know if there's a way to make the .NET JIT output something like that, I would try something like

public override int GetHashCode()

{

}

}

Bad hash function will make hashtable operations more costly, so probably its better to fix the function than try to run the bad version faster

The JIT has optimizations for array access in a loop when the loop variable is bound to the array size (no bounds checking). If ByteBuffer.Buffer is actually an array, and if the entire buffer is used commonly (Offset = 0, Length = buffer.Length), you could try:

private static bool StringEqaulsToBuffer(ByteString byteString, ByteBuffer byteBuffer)

{

}

You'd have to make the Offset-using version a special case though, so if you use it often this will be no optimization at all...

I agree with Daniel and Rafal. This hash function is horrible and will cost you. Here's a good, fast and tested hash function - it will give different results on 32-bit and 64-bit machines but that doesn't matter. Hope you don't mind it's unsafe.

public override unsafe int GetHashCode()

{

}

Greg is right, most of the time the JIT compiler is really good at inlining property accessor for a loop.

I also agree with the commenters, C and Assembly programmers are extremely good at bit manipulation so I would study their code to see how they do it. Perhaps do a search on bit masking, bit shifting and bitwise operation will get you to the right place. Something similar to what configurator is doing. Though his is specific so you might want to come up with your own.

Nice you started to care about such benchmarks ;)

For exactly this case the optimization suggested by configurator must be ideal:

Strings are more frequently differ in the end, so better to compare them in reverse order. If you'd take a look at built-in string comparers in .NET, you'll find they use this assumption.

It's really better to use pointers accessing integer values, especially if you're going to use above assumption (JIT compiler does not recognize backward array enumeration + ~ it is anyway a bit faster then byte-by-byte comparison).

Hmm... Just noticed he wrote GetHashCode example - so it's not clear why he uses reverse enumeration in this case. But what I wrote above is still intact for comparison.

Your hash function is really bad because it only includes the last 4 bytes. If that is enough for you you can optimize this method to be a lot faster^^

@Alex: This example is the actual implementation of string.GetHashCode(), so I'm not the person to ask questions about it. I will note though that the enumeration is not reverse; only 'i' is reversed, and that is to prevent accessing the Length property multiple times. The pointer is incremented in each step though, so the enumeration is actually forward.

Note that this is an implementation for a char array. A similar one for bytes (This should be checked before using it, it's notepad code):

public override unsafe int GetHashCode()

{

fixed (byte* str = ((byte*) Buffer))

{

byte* bytePtr = str;

int num = 0x15051505;

int num2 = num;

int* numPtr = (int*) bytePtr;

for (int i = Buffer.Length; i > 0; i -= 8)

{

num = (((num << 5) + num) + (num >> 0x1b)) ^ numPtr[0];

if (i <= 4)

{

break;

}

num2 = (((num2 << 5) + num2) + (num2 >> 0x1b)) ^ numPtr[1];

numPtr += 2;

}

return (num + (num2 * 0x5d588b65));

}

What's changed? 'char' changed to 'byte', 'i -= 4' changed to 'i -= 8' (because a char is two bytes), and '(i <= 2)' changed to '(i <= 4)'. I think that's pretty much it and this should work for byte arrays.

RE: Custom has function, its probably also worth checking how even the distribution is between buckets in hashtables/dictionaries etc.

Perhaps there's a performance problem somewhere because the hashcode is too similar for many strings etc.

private static bool StringEqaulsToBuffer(ByteString byteString, ByteBuffer byteBuffer)

{

var bytestr = byteString.bytes;

var bufferstr = byteBuffer.Buffer;

var offset = byteBuffer.Offset;

}

But how is byteBuffer.Buffer[] implemented? That might allow for further optimization

Greg, Rafal & Daniel,

I was able to fix the hash function on both locations, thanks.

Configurator,

I implemented something similar, but note that I can't do it in StringEqaulsToBuffer, for the simple reason that the byte buffer has an offset that would kill that optimization

Dennis

I ended up doing something very much like yours, and byteBuffer.Buffer is an array of bytes.

Andrew,

Agreed, and it was changed.

In that case you can get it about double as fast with unsafe code (or 5% of total runtime according to your profile):

Dennis,

That is a nice impl, although I 'll admit it took me a moment to understand it.

Wouldn't it be just as fast to do?

[ReliabilityContract(Consistency.WillNotCorruptState, Cer.MayFail)]

private static unsafe bool UnSafeArrayEquals(byte[] strA, byte[] strB)

{

}

Another quick optimization due to your getHashcode optimization (and since they use the same implementation) is to compare those two if they have a current valid precomputed value. But it is only useful if the common case is that they are NOT equal.

I'm not sure how smart the JIT is...

If it is really intelligent, it can use the repl cmpb instruction in this case, which would make it pretty much as fast as your memory is.

But even without that, the 32 byte version will allow the CPU to fill all the ALUs (assuming 4 ALUs with 2op/clock) with useful stuff to do. The 8-version has some overhead in loop accounting.

For my benchmarking the 32byte one is about 20% faster in the TRUE case for 38 values, your luck may vary depending on your input. But I would say that in general for longer strings the 32byte will win.

And the x64 version is alot faster than the x32 version (for obvious reasons, but may also be since the x64 JIT is optimized for performance rather than quick compilation).

Using ints instead of longs on the 32bit is not any faster.

Sorry, but the hashcode should be constant for the live of the object. This is a very basic guideline. Am I missing something?

On a side note Dennis, to avoid dead code, instead of

case 6: YYY

case 7: XXX

default: return false; //dead code

I usually do

case 6: YYY

default:

Debug.Assert(length == 7);

XXX

break;

Jose,

There are reasons to violate this rule, in this case, ByteBuffer is a Fly Weight, which I use to reduce memory consumption

Ayende, May we know which hash function you're using now that you changed it?

Comment preview