Uber Prof analysis pipeline

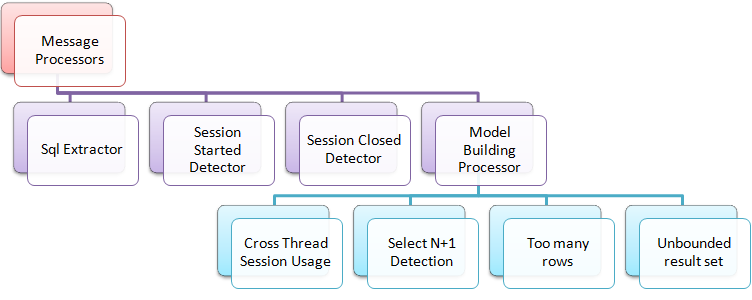

I have a set of message processors, which work of low level event messages, detect patterns in those messages and turn them into high level events. Next, I have the Model Building part, which takes the high level information and handle correlation between the different events. I then have analyzers, which are branched out of the model building processor to perform the various analysis actions that I need.

The idea is to try, as much as possible, to build the system out of identical parts, and when I need additional functionality in a given location, I create another extension point and plug in more single use classes at that place.

All of this is being orchestrated by a container that can provide those dependencies, so the act of creating a new class is also the sole act required to add the new feature to the system.

Comments

Comment preview