Emergency fixes & the state of your code base

One of the most horrible things that can happen to a code base is a production bug that needs to be fixed right now. At that stage, developers usually throw aside all attempts of creating well written code and just try to fix the problem using brute force.

Add to that the fact that very often we don’t have the exact cause of the production bug, and you get an interesting experiment in the scientific method. The devs form a hypothesis, try to build a fix, and “test” that on production. Repeat until something make the problem go away, which isn’t necessarily one of the changes that the developers intended to make.

Consider that this is a highly stressful period of time, with very little sleep (if any), and you get into a cascading problem situation. A true mess.

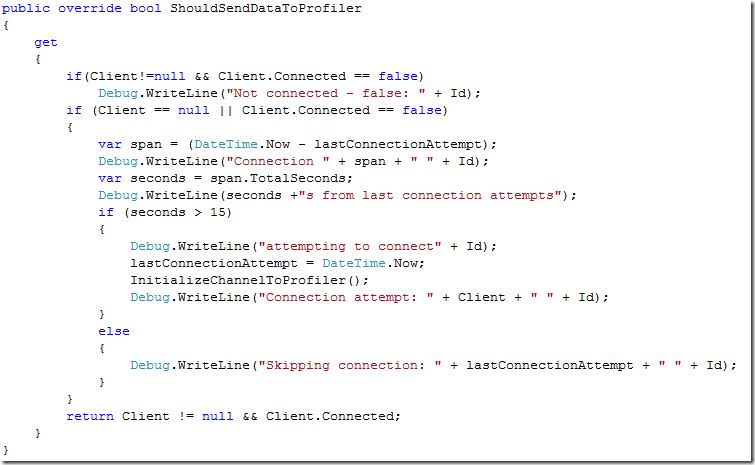

Here is an example from me trying to figure out an error in NH Prof’s connectivity:

This is not how I usually write code, but it was very helpful in narrowing down the problem.

But this post isn’t about the code example, it is about the implications on the code base. It is fine to cut corners in order to resolve a production bug. One thing that you don’t want to do is to commit those to source control, or to keep them in production. In one memorable case, during the course of troubleshooting a production issue, I enabled full logging on the production server, then forgot about turning them off when we finally resolved the issue. Fast forward three months later, and we had a production crash because of a full hard disk.

Another important thing is that you should only try one thing at a time when you try to troubleshoot a production error. I often see people try something, and when it doesn’t work, they try something else on top of the modified code base.

That change that you just made may be important, so by all means save it in a branch and come back to it later, but for production emergency fixes, you want to always work from the production code snapshot, not on top of increasingly more franticly changed code base.

Comments

Lack of sleep is the #1 contributor to crappy code.

I found it to be very valuable to have an up to date copy of the production database so i can make experiments that potentially destroy data.

...and crappy code is the #1 contributor to lack of sleep.

Reminds me of this. blog.hasmanythrough.com/2009/9/3/circle-of-death

For tracing problems in applications I use "Debugging Tools for Windows" more and more often. Instead of just guessing about the problem, I actually just manage to get a memory dump and later analyze that one. Works wonders in cases where exceptions only occur once in a while and noone has any idea how to reproduce them. Or when applications use up alot of CPU usage for no reason. Hangs. And the list goes on.

I find it really maddening when programmers make speculative changes to make the problem go away rather than to identify, isolate and fix the problem! After the 10th speculative change, the problem vanishes and is deemed "fixed", where in fact it's been moved somewhere else and the programmer has caused 3 other bugs!

It's especially easy for people to fall into this trap when submitting one line fixes. After all, it's just one line; what could go wrong? :)

Ayende, enabling full logging at production server is not a bug, not even a programming issue. That's maintenance... If no one has noticed in 3 months time that disk is running out of space it means the system wasn't that important anyway.

But I'm digressing. Regarding the quick hacks on production, I think many systems are riddled with them. Everyone speaks of them as quick&dirty temporary solution that will be soon replaced with the professional one and it stays there forever (especially after it gets sucked into code repository). Is it bad? I think not too bad - if it's still there after few years it means only that it has caused no problems and no one cares if looks nice or not.

On the other hand quick fix on production saved my ass many times. That's why I like scripts so much and love Boo with Rhino.DSL - I can modify business logic on production in no time and go back to previous version if something goes bad.

My least favorite fixes are what I call "Panadol" fixes. (or "Aspirin") These are cases with data or state where the cause of the intermitent bug cannot be determined, but the symptom can, so the fix detects and fixes the result of the bug. Usually these are cases where I find a way to reproduce the problem, but when consulting with the client it isn't something they've done which means there's at least 1 more way to trigger the same buggy result. If I'm running out of time, I build the "Panadol" and make sure it does it's job, then fix the bug I know about.

Rafal,

I full agree that this is maintenance/monitoring issues.

Alas, he is human ;) I call this thrashing. It's messy and horrible, but instinctively the best way to dig yourself out of a hole.

if I've learned one thing in doing 6 years of support on a software product it's that there are no bugs which need a fix so desperately you've to drop everything immediately. (well, except the small group of bugs which cause a corporate website go belly up).

That's not to say there aren't severity levels, of course there are. But unless someone dies because of the bug, it's better to take a step back and take a little bit of extra time to come up with a better approach to test what could be wrong.

Also, some customers of software products are simply over-reacting drama-queens, that's a fact of life: the issue is so severe for them that everything in the world has to stop and everyone/thing has to put into gear to fix their problem. There's nothing wrong with that, it might be the issue is indeed that serious. But, unless the person is on the edge of losing his/her job because Big Corp Inc.'s website is showing a yellow screen of death, it's not that serious that you should go in head-first.

You'll see that if you take 1-2 hours extra, take it easy and consistent, it will get the same result: fixing the bug, and not the mess.

For a small ISV, it's hard to learn about a potential big bug which could potentially affect everyone: these things have to be fixed now (it seems) as otherwise everyone who downloads the trial will likely give up with a "this is crap" remark. But that's not matching reality: these kind of severe issues are almost always already caught with your own testing, so severity is likely lowered by the fact these things are edge cases. Still not nice for people who run into these edge cases, but hardly a reason to drop everything.

Because dropping everything to go in to fix an issue immediately not only causes mess around the bugfix, it also makes you lose extra time because you'll likely have to start over with the process you were working on (and which you dropped to fix the bug).

The sloppy reader will now think I find people who ran into severe bugs should not get support at that moment. That's not true: every customer is king and should get support, as soon as possible. However, every customer also should take into account that the issue also might be their own fault and no-one wins with a head-first hasty 'fix'.

All I can say is, yeah, right there with you. :-)

Comment preview