Fixing test failures using binary search

With Rhino Queues, I have a very serious problem. While all the tests would run if they were run individually, running them in concrete would cause them to fail. That is a pretty common case of some test affecting the global state of the system (in fact, the problem is one test not releasing resources and all other tests failing because they tried to access a locked resource).

The problem is finding out which. By the way, note that I am making a big assumption here, that there is only one test that does so, but for the purposes of this discussion, it doesn’t matter, the technique works for both options.

At the heart of it, we have the following assumption:

If you have tests that cause failure, and you don’t know which, start by deleting tests.

In practice, it means:

- Run all unit tests

- If the unit tests failed:

- Delete a unit test file with all its tests

- Go to first step

- If the unit tests passed:

- Revert deletion of a unit test file in reverse order to their deletion

- Go to step 1

When you have only a single file, you have pinpointed the problem.

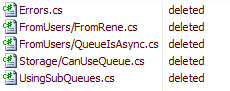

In my case, unit tests started to consistently pass when I deleted all of those ( I took a shortcut and delete entire directories ):

So I re-introduced UsingSubQueues, and… the tests passed.

So I re-introduced Storage/CanUseQueue and… the tests passed.

This time, I re-introduced Errors, because I have a feeling that QueueIsAsync may cause that, and… the tests FAILED.

We have found the problem. Looking at the Errors file, it was clear that it wasn’t disposing resources cleanly, and that was a very simple fix.

The problem wasn’t the actual fix, the problem was isolating it.

With the fix… the tests passed. So I re-introduced all the other tests and run the full build again.

Everything worked, and I was on my way :-)

Comments

You could make your code less dependent on global state, replacing a static class with a normal class and a single static variable. Normal code uses the static but tests use the normal class, which is created for each test.

In my code I usually have conflicting tcp ports. You can let the OS chose the port for a listening socket with Socket.Bind.

Lastly, I think that if you have hard to pinpoint failures in your tests, you have tested and failed in the debuggeability of your system. It's time to fire up serious debuggers like WinDbg and make changes in your program so as to make determining the problem easier. If it's hard to debug unit tests is surely hard to debug the same program in production, so it pays off to have better debug procedures in place.

This is a code smell anyway. Any tests that effect the actual outcome of other test results should be looked at and made more isolated!

Bruno,

Tests do things that the real app never does.

In that example, the problem was that one of the tests wasn't releasing a file. That never happens in the app, because that file it used throughout the lifetime of the app

Billy,

Duh!

The problem was a bug in the tests

Ayende, I have some similar tests (not surprisingly on a similar problem, a transaction file to be specific).

I ended up supporting temp files to avoid this so there would not be dependencies between my tests.

Cheers,

Greg

@Ayende

I think you may have missed an abstraction. Have you thought of getting rid of the specific dependency (an interface) on the file for these tests and application?

From the follow on comments you stated that the application maintains the file during it's lifetime. Do you not want your tests to reflect their real-world usage?

Dependencies of resources and primitives in tests, to me have a code-smell of state based testing.

I believe that the failing tests were indicating changes to the code are required. Fixing the tests IMHO seems a pretty anemic solution. It may come back and bite you in the ass.

The last time I had a problem like this, I made a change to the test runner I was using (in this particular case, python's unittest module) to let me binary search my full set of tests. It ended up being worthwhile since this sort of problem can come up often, and having to do the search manually is pretty painful.

I wouldn't be surprised if it was pretty easy to do the same in NUnit (or whatever runner you happen to be using).

I wonder why didn't you spot the problem when you wrote (and ran) the test that didn't release the file. It seems, from your description, that that particular test was written some time ago.

Joe,

Those are integration tests.

Gerardo,

Order, that was the problem.

That test went last on the queue, for some reason.

And other tests went after it didn't use the same thing.

It was only when I created some tests that did use it that it started failing, and that was long after that.

I'm not surprised that running your tests in concrete would cause them to fail :)

Sounds like it might be a good feature for test frameworks to not run their tests in a pre-determined order?

Actually, it is a horrible idea.

The problem is that when you DO have such a problem, you have a really hard time figuring it out.

I thought you said you used binary search? I mean it's pretty obvious but some people may find the title confusing... The logic above is linear: you just remove a test after test. Instead your logic should be "recursively disable half of the tests and see if that helped", right?

zvolkov,

It means disable a big parts of the tests, run the test.

Enable some tests, run the test

Comment preview