The devil is in the details

Quite often, I hear about a new approach or framework, and they are interesting concepts, with obviously trivial implementations.

In fact, the way I usually learn about new approach is by writing my own spike to explore how such a thing can work. (As an alternative, I will go and read the code, if it is an OSS project).

The problem is that obviously trivial implementations tends to ignore a lot of the fine details. Even for the trivial things, there are a lot of details to consider before you can call something production ready.

Production ready is a tough concept to define, but it is generally means that you have taken into account things like:

- Performance

- Error handling

- Security

And many, many more.

One of the release criteria of Rhino Mocks is the ability to mock IE COM interfaces, as a simple example. That is actually much tougher than it looks (go figure out what RVA is and come back to tell me), as a good example;

But mocking is a complex issue as it is, let us take unit testing as an example, it is very simple concept, after all. How hard is it to build? Let us see:

public class MyUnitTestingFramework { public static void Main(string[] args) { foreach(string file in args) { foreach(Type type in Assembly.Load(file).GetTypes()) { foreach(MethodInfo method in type.GetMethods()) { if(IsTest(method)==false) continue; try { object instance = Activator.CreateInstance(type); method.Invoke(instance, new object[0]); } catch(Exception e) { Console.WriteLine("Test failed {0}, because: {1}", method, e); } } } } } public static bool IsTest(MethodInfo method) { foreach(object att in method.GetCustomerAttributes(true)) { if(att.GetType().Name == "Test") return true; } return false; } }

Yeah, I have created Rhino Unit!

Well, not hardly.

I can write an OR/M, IoC container and Proxies in an hour (each, not all of them). Knowing how to do this is important, but doing so for real is generally a mistake. I am currently using a homegrown-written-in-an-hour IoC container, I really don't like it. I keep hitting the limitations, and the cost of actually getting a container up to par with the standard expectation is huge. I know that I can't use a written-in-an-hour-OR/M without sedation.

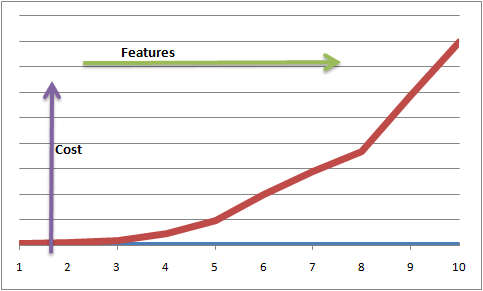

Here is the general outline of the cost to feature ratio for doing this on any of the high end tools:

There really isn't much of a complexity involved, just a lot of small details that you need to take care of, which is where all the cost goes.

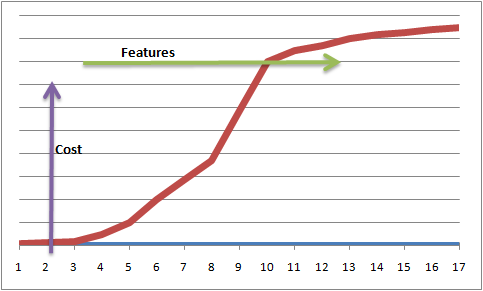

One thing that I am not telling you is that I didn't show the entire graph, here is the same graph, this time with more of the timeline shown. After a while, the complexity tends to go away (if it isn't, the project fails and dies, see previous graph).

Nevertheless, the initial cost for rolling your own like this is significant. This is also the reason why I wince every time someone tells me that they just write their own everything. (yeah, I know, I am not one that can point fingers. I think that I mentioned before that I feel no overwhelming need to be self consistent).

My own response for those graphs tend to follow the same path almost invariably:

- Build my own spike code to understand the challenges involved in building something like this.

- Evaluate existing players against my criteria.

- Evaluate comparability with the rest of my stack.

If it passes my criteria, I'll tend to use that over building my own. If it doesn't, well, at least I have a good reason to do it.

Programming to learn is a good practice, one that is important not to lose. At the same time, it is crucial to remember that you shouldn't take out the toys to play in front of the big boys unless you carry a big stick and are not afraid to use it.

Comments

An ORM in an hour? I'd like to see what you could come up with. You could call it Rhinobernate :D

Thanks for the great post Oren, I really liked this one.

heh.

i like philosophical stuff. and if it is a "development philosophy", even better. so i created a new subfolder in my favorites just now and i wanted to use a single word for it...

... it came out "Devilosophy" ;)

Comment preview