Start playing with RavenDB 5.0, today

I announced the beta availability of RavenDB 5.0 last week, but I missed up on some of the details on how to enable that. In this post, I’ll give you detailed guide on how to setup RavenDB 5.0 for your use right now.

For the client libraries, you can use the MyGet link, at this time, you can run:

Install-Package RavenDB.Client -Version 5.0.0-nightly-20200321-0645 -Source https://www.myget.org/F/ravendb/api/v3/index.json

If you want to run RavenDB on your machine, you can download from the downloads page, click on the Nightly tab and select the 5.0 version:

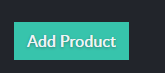

And on the cloud, you can register a (free) account and then, add a product:

Create a free instance:

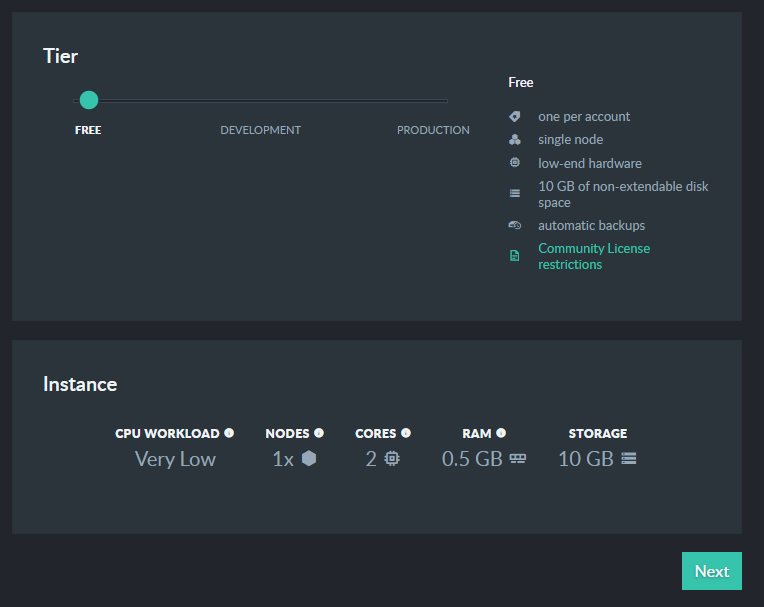

Select the 5.0 release channel:

And then create the RavenDB instance.

Wait a few minutes, and then you can connect to your RavenDB 5.0 instance and start working with the new features.

You can also run it with Docker using:

docker pull ravendb/ravendb-nightly:5.0-ubuntu-latest

Comments

While time series supports seems to be the headline new feature, since this is a major release, is there a comprehensive list of all "major" new features ?

I would guess that NET Core 3.1 upgrade with associated performance benefits would be one...

Self comment: Just checked, yes NET Core 3.1 upgrade is in.

Bernhard,

The major items are:

Any plans for Auto-sharding in this version?

James,

That is planned for the next major released. The design work is here: https://github.com/ravendb/book/blob/v5.0/Sharding/Sharding.md

I would love to hear your scenario and any feedback you have.

Here's what I have. Consider a multi-national bank:

100,000 or more transactions a day, 2 journal entries minimum per transaction.

Each transaction is stored in a Transaction Collection with a type discriminator, every journal entry in a JournalEntries Collection that has a Transaction normalization object because the transactions once posted cannot change.

I need to handle millions of transactions and at least 2 journal entries per transaction (averages about 3.2 per transaction)

Ideally this would work with auto-sharding and auto-scaling within Kubernetes using some form of controller within Kubernetes that could orchestrate the demand for scaling based on load and requirement. I wouldn't have to do anything, and I'd be able to search across all of the shards transparently similar to how map reduce works but automatically in Spark etc.

An added wrinkle is that I need to be able to store data geographically based on geolocation of the account and user/business. That is, because of GDPR, I need all European citizens to be stored in Europe, and all of the American ones in the US etc. but I need to be able to allow transactions between accounts in multiple geolocations. I envision this right now to be some sort of programmatically involved replication between clusters or at least api interaction between each cluster. I also anticipate having to have at least 2 clusters per jurisdiction for up-time guarantees required and those would blind replicate between those clusters.

I'm aware that the geolocation conditional stuff will have to be coded but hoping to be able to make as much of the rest as transparent to code as possible. Hence interest in Cosmos DB, but I'm not keen on an Azure dependency at the moment hence why we're evaluating Raven and Mongo as well.

Does that help?

James,

Thanks for the details, that is very interesting.

The first question to ask, though. Why do you think that you are going to want or need sharding?

Let's talk about your scenario. You have 100K tx per day, let's say that you need to deal with 25 years of data.That puts us at about: 912,500,000 transactions and 2,920,000,000 journal entries.I would expect that the total size of this database would be ~15 - 20 TB.

However, you aren't going to need all of them in a single location, because of data locality issues. So let's say that you have half in each?That is a data size that would be very comfortable to handle in a single database (with replication, HA, and the usual stuff). That would greatly simplify your situation, because you won't need to manage cross partition work.

Note that sharing of some data between EU and US in this case is easy with RavenDB, using the ETL feature. You can "tag" transactions that need to be sent to the other side, and that will just happen.

Comment preview