Running a RavenDB cluster with two nodes

Recently we got a couple of questions in the mailing list about running a RavenDB 4.x cluster with just two nodes in it. This was a fairly common topology in RavenDB 3.x days, because each of the nodes was mostly independent, but that added a lot of operational complexity to the system. In RavenDB 3.x you had to do a lot of stuff on each of the nodes in the system. RavenDB 4.x has brought us a unified cluster management and greatly simplified a lot of operational tasks. But one of the results of this change is that we now have a cluster rather than just a bunch of nodes.

Recently we got a couple of questions in the mailing list about running a RavenDB 4.x cluster with just two nodes in it. This was a fairly common topology in RavenDB 3.x days, because each of the nodes was mostly independent, but that added a lot of operational complexity to the system. In RavenDB 3.x you had to do a lot of stuff on each of the nodes in the system. RavenDB 4.x has brought us a unified cluster management and greatly simplified a lot of operational tasks. But one of the results of this change is that we now have a cluster rather than just a bunch of nodes.

In particular, in order to be able to operate correctly, a RavenDB cluster needs a majority of the voting nodes to operate successfully. In a typical cluster setup, you are going to have three to five nodes and you’ll need two or three of them to be accessible for the cluster to be healthy.

However, in a cluster of only two nodes, a curious problem arises. The get a majority of the nodes, you need… all the nodes. In other words, if you are running a cluster of just two nodes, and one of them is inaccessible, your cluster is not available.

RavenDB’s distributed nature is built on multiple layers. Even while the cluster itself is not available, you can still load and save documents to the database, perform queries, etc. Most of the normal operations that you would do on a day to day basis will work just fine without the cluster available.

However, management functions require that the cluster be up. These include operations such adding or removing nodes to the cluster, creating or deleting a database, creating an index (including creating an auto index) or using advanced features such as ETL or Subscriptions.

In both of the cases that were raised in the mailing list, we had a two node cluster and one of the nodes was down (VM was shutdown). That led to the inability to remove the down node from the cluster (we have emergency operations that allow that, but they are not meant for normal use) or errors during queries that required us to introduce a new index to the system.

It is important to understand that this isn’t actually an error. This is the system operating as designed and is a predictable (and desirable) part of how it is supposed to work in such failure modes.

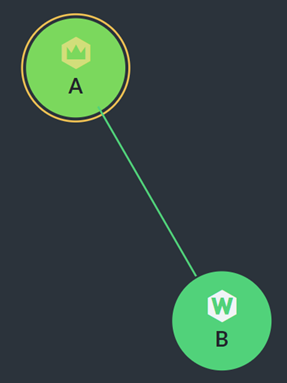

However, that is true only if you are running your cluster with all the nodes as full voting member nodes. There are other alternatives. If you have a two nodes cluster, a single node being down will take the whole cluster down. At this point, you can usually designate one node as the primary and chose a different topology. Look at the cluster image on the right. As you can see, we have the leader A as well as node B. You might notice that node B is marked with a W. Usually a member node in the cluster will be marked with M (for Member). But the W marking stands for Watcher.

In this case, a watcher node is a silent participant in the cluster. It can listen, but doesn’t interfere in the cluster itself. Node A is the sole node in the cluster that can make decisions. So if node B is down, the cluster is still functional. However, if node A is down, node B is going to operate without the cluster. Given that this would be the same situation anyway if you are running with both A and B as full members, that is a net benefit. And from experience, users who want to run a dual node cluster typically already have pretty firm ideas about which of the nodes is the primary.

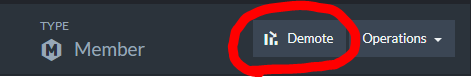

You can demote a node from a full member to a watcher (and vice versa) dynamically, in the cluster management page in the Studio. However, remember that this is an operation that requires a majority of the cluster to be available.

You can also add a node to the cluster as a watcher immediately, which is probably a better idea.

Aside from not being counted for cluster votes, watcher nodes in RavenDB behave in the exact same manner as other nodes. You can assign them tasks, the cluster manage them as usual, they host databases and in general behave just like any other RavenDB node.

The other use case for watcher nodes are in very large clusters. If your cluster grows being seven nodes, you’ll typically start adding watcher nodes to the cluster, instead of full member nodes. This is to avoid having to get majority vote from a large number of nodes.

Comments

You could also have two clusters of one node each and set up external replication across the databases, its a lot more to maintain but you could achieve the same behavior as a Master-Master relationship as in v3.x

Comment preview