The perf optimization that cost us

There is a lock deep inside RavenDB that has been bugging me for a while. In essence, this is a lock that prevents a read transaction from opening during a particular section of the commit process of a write transaction. The section under lock does no I/O and only manipulate in memory data structure. Existing read transactions aren’t impacted, it is only the opening of a read transaction that is blocked. It isn’t usually visible in production systems. The only scenario you might expect to see it is if you have a very high degree of writes at the same time as you have a lot of read requests. In that case, RavenDB’s lock contention would rise and you would be able to process only about 5,000 – 10,000 read requests per second at the same time as you can process 30,000 write requests per second. In practice, because of the way we process requests, you’ll very rarely be able to hit that level of concurrency in anything but a benchmark. But it bugged me. It was one of those scenarios that stood up like a sore thumb when we looked at the performance metrics. In any other case, we are orders of magnitudes faster, but here we hit a speed bump.

So I fixed it. I changed the code so there would be no sharing of data between the write and read transactions, which eliminated the need for the lock. The details are fairly simple, I moved the structured being modified to a single class and then atomically replace the pointer to this class. Easy, simple and much much cheaper.

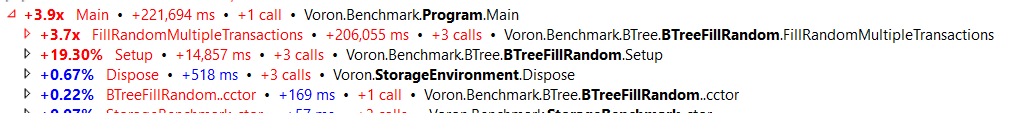

This also had the side affect of slowing us down by a factor of 4. Here are the profiler results after the change:

That… is not a desirable property for a performance optimization.

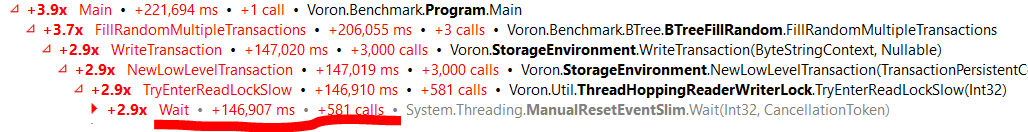

Luckily, I was able figure out from the profiler what was causing the slowdown:

As it turned out, there was another lock there. This one was used to avoid starving a particular portion of the code under high write scenario. Until we removed the first lock, this worked beautifully. But when the first lock was removed, that actually sped us the rest of the system which meant that we’ll acquire the second lock a lot more often. That, in turn, results in a rare occurrence becoming very common and the contention on the lock cause a major slowdown.

We were able to figure out that the lock is now no longer needed, due to some other changes. We just removed this lock entirely, giving us over 10% perf over the original code, without even mentioning the actual scenario we are trying to test.

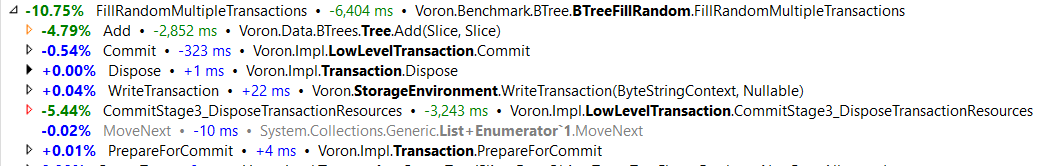

Now, you might have noticed that this benchmark I’m running is for pure writes, right? Let’s see what happens when we have more complex workloads, and are testing things without the profiler:

| Reads | Writes | 2/3 Reads & 1/3 Writes | |

| Original | 385,510 | 85,688 | 91,984 |

| Optimized | 416,468 | 90,380 | 92,323 |

This table shows the number of scenarios and the requests per second on each. We can see just under 10% improvement for reads, over 5% improvement for writes (which are excellent numbers). However, the mixed workload, where we expected to see the most performance boost has a difference that is pretty much a sampling error.

I’m not sure how I feel about that.

Comments

Comment preview