Performance optimization starts at the business process level

I had an interesting discussion today about optimization strategies. This is a fascinating topic, and quite rewarding as well. Mostly because it is so easy to see your progress. You have a number, and if it goes in the right direction, you feel awesome.

Part of the discussion was how the use of a certain technical solution was able to speed up a business process significantly. What really interested me was that I felt that there was a lot of performance still left on the table because of the limited nature of the discussion.

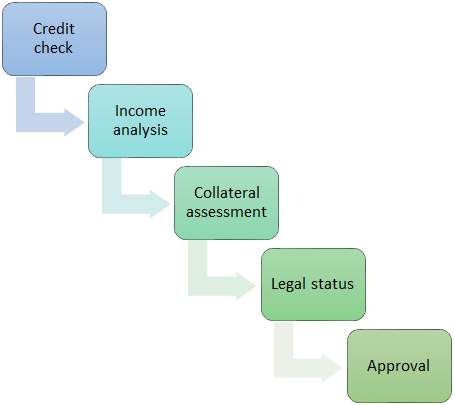

It is easier if we do this with a concrete example. Imagine that we have a business process such as underwriting a loan. You can see how that looks like below:

This process is setup so there are a series of checks that the loan must go through before approval. The lender wants to speed up the process as much as possible. In the case we discussed, the operations performed were mostly in the speed in which we can move the loan application from one stage to the next. The idea is that we keep all parts of the system as busy as possible and maximize throughput. The problem is that there is only so much that we can do with a serial process like this.

From the point of view of the people working on the system, it is obvious that you need to run the checks in this order. There is no point in doing anything else. If there is not enough collateral, why should we run the legal status check, for example?

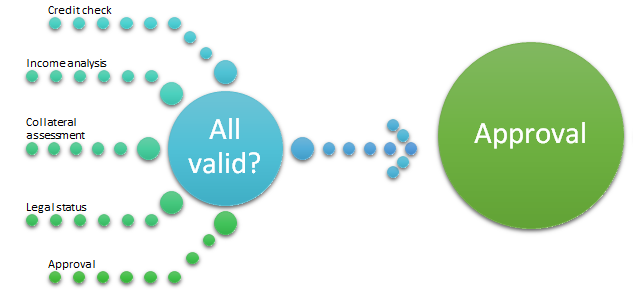

Well, what if we changed things around?

In this mode, we run all the check concurrently. If most of our lenders are valid, this means that we can significantly speedup the time for loan approval. Even if there is a significant number of people who are going to be denied, the question now becomes whatever it is worth the trouble (and expense) to run the additional checks.

At this point, it is a business decision, because we are mucking about with the business process itself. Don’t get too attached to this example, I chose it because it is simple and obvious to see the difference in the business processes.

The point is that not thinking about this from that level completely block you from what is a very powerful optimization. There is only so much you can do within the box, but if you can get a different box…

Comments

How is this going to maximize throughput? Imho the Numbers of cases handled per day will stay exactly the same and equal to the throughput of the slowest operation in the process. But for sure you’re reducing the average processing time by doing some things in parallel

Rafal, Throughput is bounded by the slowest operation. But latency is bounded by how much time you are waiting idle. If the slowest operation is in the middle, it "starves" the rest of the steps in the pipeline. There is also the case that if a fast process failed a loan early, we don't need to even enter the slow part.

I couldn't agree more. I work in automating complex business processes. However often all we can do is to replace human elements with machine counterpart, yet without ability or consent to question the sense of bigger parts of the process. If the process requires, as it often does, lots of human sign off and approval steps (in business hours only) faster processing of parts doesn't really improve performance of a whole system.

Ayende, you know, i just played some nitpicking here - in first sentences you mentioned optimization for throughput, later switched to optimizing the latency, and these dont seem to be the same thing. The best highway throughput is achieved with speeds about 20 MPH, if you increase speed you lose some throughput

Comment preview