Transactions, request processing and convoys, oh my!

You might have noticed that we have been testing, quite heavily, what RavenDB can do. Part of that testing focused on running on barely adequate at ridiculous loads.

You might have noticed that we have been testing, quite heavily, what RavenDB can do. Part of that testing focused on running on barely adequate at ridiculous loads.

Because we setup the production cluster like a drunk monkeys, a single high load database was able to affect all other databases. To be fair, I explicitly gave the booze to the monkeys and then didn’t look when they made a hash of it, because we wanted to see how RavenDB does when setup by someone who has as little interest in how to properly setup things and just want to Get Things Done.

The most interesting part of this experiment was that we had a wide variety of mixed workload on the system. Some databases are primarily read heavy, some are used infrequently, some databases are write heavy and some are both write heavy and indexing heavy. Oh, and all the nodes in the cluster were setup identically, with each write and index going to all the nodes.

That was really interesting when we started very heavy indexing loads and then pushed a lot of writes. Remember, we intentionally under provisioned, and these machines are 2 cores with 4GB RAM and they were doing heavy indexing, processing a lot of reads and writes, the words.

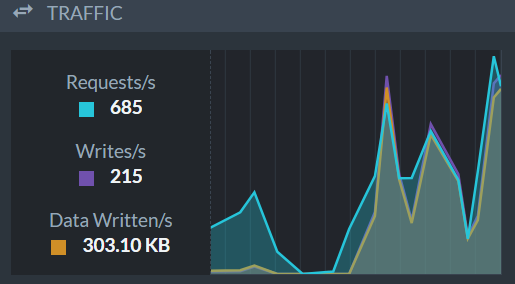

Here is the traffic summary from one of the instances:

A very interesting wrinkle that I didn’t mention is that this setup has a really interesting property that we never tested. It has fast I/O, but the number of tasks that are waiting for the two cores is high enough that they don’t get a lot of CPU time on an individual basis. What that means is that it looks like we have I/O that is faster than the CPU.

A core concept of RavenDB performance is that we can send work to the disk to run and in that timeframe we will be able to complete more operations in memory, then send a batch of them to disk. Rinse, repeat, etc.

This wasn’t the case here. By the time we finished a single operation we’ll already have the previous operation completed, and so we’ll proceed with a single operation every time. That killed our performance, and it meant that the transactions merger queue would grow and grow.

We fixed things so we’ll take into account the load on the system when this happens, and we gave a higher priority to the transaction merging thread over normal request processing or indexing. This is because write requests can’t complete before the transaction has been committed, so obviously we don’t want to process further requests at the expense of processing the current requests.

This problem can only occur when we are competing heavily for CPU time, something that we don’t typically see. We are usually constrained a lot more by network or disk. With enough CPU capacity, there is never an issue of the requests and the transaction merger competing for the same core and interrupting each other, so we didn’t consider this scenario.

Another problem we had was the kind of assumptions we made with regards to the processing power. Because we tested on higher end machines, we tested with some ridiculous performance numbers, including hundreds of thousands of writes per second, indexing, mixed read / write load, etc. But we tested that on high end hardware, which means that we got requests that completed fairly quickly. And that led to a particular pattern of resource utilization. Because we reuse buffers between requests, it is typically better to grab a single big buffer and keep using it, rather than having to enlarge it between requests.

If your requests are short, the number of requests you have in flight is small, so you get great locality of reference and don’t have to allocate memory from the OS so often. But if that isn’t the case…

We had a lot of requests in flight. A lot of them because they were waiting for the transaction merger to complete its work, and it was having to fight the incoming requests for some CPU time. So we have a lot of inflight requests, and they intentionally got a bigger buffer than they actually needed (pre-allocating). You can continue down this line of thought, but I’ll give you a hint, it ends with KABOOM.

All in all, I think that it was a very profitable experiment. This is going to be very relevant for users on the low end, especially those running Docker instances, etc. But it should also help if you are running production worthy systems and can benefit from higher utilization of the system resources.

Comments

What about the priority of the write thread? Was that also raised, is it lower than the merger thread priority? I'm thinking if the write thread competes with request threads for CPU it might be stalled.

Pop Catalin, The merger thread priority is higher. The actual writer thread, the one issuing the write, is a standard thread pool thread. The major reason for this is that it isn't just doing a write. It is doing things like diffing and compressing data, potentially high CPU stuff. We do not want that to happen as high priority. Instead, the tx merger monitors the situation, if we see that there is enough work there that we start lagging because of this., we will start aborting outstanding operations and return timeout to users (which will trigger a failover to another node).

Note that in practical terms, requests don't do a lot more than read from network, then queue an operation to the tx merger, so they don't hold up a thread for too long. On the other hand, the write operation is purely compute bound (memory is hot, etc) for the duration it needs to prepare the write, and then completely I/O bound. That is a really good way to burn through all outstanding requests and then get to the write operation.

And if it is too slow, we need this for back pressure.

From your description, I'm imagining that when the request threads create allot of work for the merge thread, the merge thread being the highest priority will take most % of CPU (OS gives it priority to finish), which in turn will take CPU % from write thread, which can potentially "stall it" (At this point the writes are not happening as fast as IO allows it, but slower due to thread stalling) then at some point the the Merge thread will notice the backpressure and abort the calling threads, which wil lreduce presure on the merger thread wich will reduce stalling on the write thread. However, at this point, the stalling happened anyway. Wouldn't it just be better if the write thread is a high priority and even on high CPU situations the writes are done at IO speed? instead of the write thread being CPU starved by competing with other threads?

(P.S. Not knowing the exact internals of Raven, this is more a "what if" philosophical discussion with the purpose of better understanding the internals of Raven 4)

Pop Catalin, No, the writes are I/O bound, not CPU bound. There is a component there that is CPU bound (compression, mostly), but tend to be pretty small compared to the cost of I/O in general. Note that the key part here is that the merge thread, assuming it has a chance to run, is typically stalled for I/O, not compute resources. So it merge a few transactions, then send them to disk, and while this is happening, it is processing additional requests (but not committing them). The key here is that we need to have the merger thread keep everything moving.

Another problem is that we don't have a dedicated writer thread, that tend to be a lot rarer, and it is usually not worth dedicating a full thread for that.

Ayende, I understand that writes are IO bound. However ...

If there is CPU contention then the write thread can get stalled (delayed) and actually issue the write later than it could have simply because the scheduler places it in the same queue with the request threads (ignoring priority boost for now)

From MSDN:

This means if there is CPU contention form some reason and the write thread is the same priority as the request threads it can get stalled (delayed). By how much? and whether it has any effect, it depends on the specifics of the system, the number of threads in the same priority class vs hardware cores vs IPC vs MHz, etc. The stalling from milliseconds to dozens or hundreds of milliseconds, ( it might be a non-issue in the end) if all the thread in the queue fully utilizes their time slice (10-15ms). So only 10 requests threads fully using their 15 ms timeslice can stall the write thread by 150ms on a Single core machine.

But on a more basic note, if the write thread only issues write requests, and consumes a small amount of CPU (basically not stalling any other threads) then why shouldn't it be a higher priority (not to wait at the end of the request thread queue) in a high CPU usage scenario?

Pop Catalin, The write thread can do non trivial amount of CPU work. In particular, diffing and compression (and maybe encryption) are all CPU bound. Because we can do that all in an async manner, we would rather do that than stall request processing threads too much.

Comment preview