Handling tens of thousands of requests / sec on t2.nano with Kestrel

This blog post was a very interesting read. It talks about the ipify service and how it grew. It is an interesting post, but what really caught my eye was the performance details.

In particular, this talks about exceeding 30 billions of requests per month. The initial implementation used Node, and couldn’t get more than 30 req/second or so and the current version seems to be in Go and can handle about 2,000 requests a second.

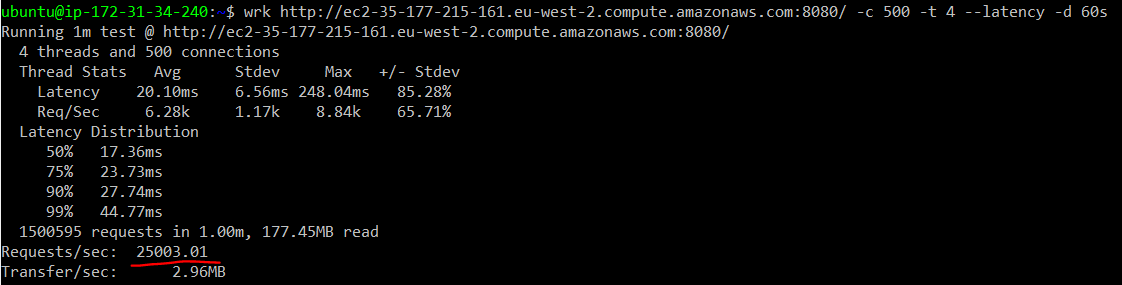

I’m interested in performance so I decided to see why my results would be. I very quickly wrote the simplest possible implementation using Kestrel and threw that on a t2.nano in AWS. I’m pretty sure that this is the equivalent for the dyno that he is using. I then spun another t2.small instance and used that to bench the performance of the system. All I did was just run the code with release mode on the t2.nano, and here are the results:"

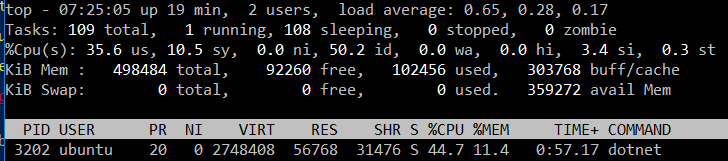

So we get 25,000 requests a second and some pretty awesome latencies under very high load on a 1 CPU / 512 MB machine. For that matter, here are the top results from midway through on the t2.nano machine:

I should say that the code I wrote was quick and dirty in terms of performance. It allocates several objects per request and likely can be improved several times over, but even so, these are nice numbers. Primarily because there is actually so little that needs to be done here. To be fair, a t2.nano machine is meant for burst traffic and is not likely to be able to sustain such load over time, but even when throttled by an order of magnitude, it will still be faster than the Go implementation ![]() .

.

Comments

It's impressive! But I've always wondered about benchmarks such as this one... I remember seeing numbers being quoted for Erlang servers that were in the millions of request/sec. They seemed ridiculous to me when I couldn't even reach 1% of that in my apps (Delphi w/ Indy sockets at the time).

Eventually, I realized these numbers are just the raw TCP-level throughput. That is to say, employing all sorts of caching optimizations without doing anything useful or remotely real-world at all. I understand this might indicate a baseline for deciding on the tech stack (i.e. evaluating overhead of the stack itself), but the numbers are surely going to drop drastically once we cross into application layer!

Bottom line, I just don't see any value in a number that doesn't represent at least a basic real-world request - one that users are likely to encounter themselves while using the app. But then again, I don't write marketing material, either. :)

Does the benchmark use http pipe-lining? If yes then the numbers are greatly inflated compared to real world.

Aleksander, It is quite doable to do a million req/sec, even on commodity hardware. See the Tech Empower benchmarks. This isn't usually useful for most things, until it really is. That said, it most certainly put a lower barrier for entry for a lot of stuff. For example. ASP.Net in the .NET framework cannot do more than a few thousands requests a second on good hardware. The machines you see doing a lot of req/sec are typically doing I/O, typically use some sort of async model (Erlang, Node) and very little computation. The core parts are also tend to be written in C or equivalent, and have very little to do with the actual usage scenario.

Erlang in particular, mind, is very good for network because it can do almost zero cost string handling (for the kind of string handling it does) with the use of vectored I/O. Node.js, however, fall rapidly the moment you do something interesting with it beyond just forwarding calls.

Pop Catalin, No, there is no use of pipelining here. I could probably get to over 100K req / sec if I did that, on the

t2.nanomachine, but there is no real point doing that.After reading this, I could not believe that a nano instance could handle 25k requests. Especially with .NET. I do realize these are semi-synthethic bechmarks and real life performance might not be as good. I tried to do apples vs apples comparison with a couple of GO web servers(namely Iris and Gin).

These performed as well as Kestrel did, albeit Kestrel was a couple of % faster. On the other hand both Iris and Gin had only a fraction of memory usage of dotnet. One thing to note that my EC2 nano instance could only do 21508 requests/second, which indicates that your nano is faster than mine :(

Anyway, If you'd like to see the full results, I've posted them on my blog.

Vik, That is really interesting. Note that the Kestrel code is pretty wasteful of memory, with at least 4 allocations per requests that are completely unnecessary. .NET also have a higher minimum amount of memory used, roughly around 15 - 20 MB. That said, note that I'm pretty sure that in this context, Go is able to do escape analysis on the values and just stack allocate them and avoid any GC costs.

Hi, out of curiosity to learn something new, your statement "with at least 4 allocations per request" is meant for the kestrel pipeline, not your gist code, right?

Other than the .ToString() (which I wonder if there would be another way with our new Span<T> overlord) I can't see any allocations in your usercode (other than maybe hidden Tasks/closures/ async statemachine thingies).

HannesK, The

WriteAsyncmethod will allocate a buffer to convert thestringtobyte[], there is also the allocation of theX-Forwarded-Forstring. Another allocation is the async task, yes. If there is noX-Forwarded-For, we allocate a string for the ip address.What causes peeformance is not the aws classification of the instance, it's the cpu and memory speed. In such a simple code, there is not a lot of memory requirements and the cpu is still a top

(continuing) the cpu is still a top xeon processor. If you enable t2 unlimited, you can probably use that in production for many cases with no downsides, and the cost will be lower than a higher instance type.

We look at 1 cpu/512mb ram and forget it's ~2.8 billion operations per second on high speed memory, on a top level server hardware. If you think about it, just for a glorified ping, 25000 is still poor performance for what

(continuing) it could do. (This editor sucks on mobile =))

Natan, The problem is the limits imposed if you don't have the unlimited, yes. And yes, the speed of the CPU itself is very fast. As stated, this is not optimized in any shape or form, and the probable limit is network, not CPU time. I was never able to go over 50% CPU sustained with this.

I agree. It was more an observation to the fact that some commenters are surprused t2.nano can do real work. I use some t2 nano instances in production for specific tasks with t2 unlimited, and the cpu credit cost is ridiculous, about a dollar for an entire month to get rid of the cpu limitation.

Comment preview