The state of RavenDB

A couple of weeks ago we were done with the development of RavenDB, all that remains from now is to get it actually out the door, and we take that very seriously.

A couple of weeks ago we were done with the development of RavenDB, all that remains from now is to get it actually out the door, and we take that very seriously.

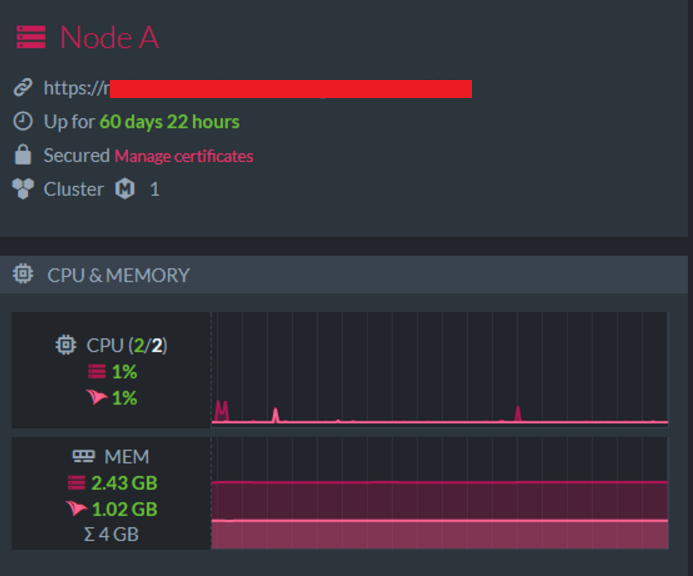

To the right you can see one of our servers, that has been running a longevity test for the two months in a production environment. We currently have a few teams doing nasty stuff to network and hardware to see how hostile they can make the environment and how RavenDB behaves under this conditions. For the past few days, if I have to go to the bathroom I need to watch out for random network cables strewn together all over the place as we create ad hoc networks and break them.

We have been able to test some really interesting scenarios this way and uncover some issues. I might post about a few of these in the future, some of them are interesting. Another team has been busy seeing what kind of effects you can get when you abuse the network at the firewall level , doing everything from random packet drops to reducing quality of service to almost nothing and seeing if we are recovering properly.

One of the bugs that we uncovered in this manner was an issue that would happen during disposal of a connection that timed out. We would wait for the TCP close in a synchronous fashion, which propagated an errors that was already handled into a freeze for the server.

Yet another team is working on finishing the documentation and the smoothing of the setup experience. We care very deeply about the “5 minutes of out of the gate” experience, and it takes a lot of work to ensure that it wouldn’t take a lot of work to setup RavenDB properly (and securely).

We are making steady progress and the list of stuff that we are working on grows smaller every day.

We are now in the last portion, running longevity, failure tests and basically taking the measure of the system. One of the things that I’m really happy about is that we are actively abusing our production system. To the point where if there there was Computer Protective Services we would probably have CPS take them away, but the overall system it running just fine. For example, this blog has been running on RavenDB 4.0 and the sum total of all issues there after the upgrade was no handling the document id change. The cluster going down, individual machines going crazy, taken down, network barriers, etc. It just works ![]() .

.

Comments

Any change we will see a write-up about the impact of Meltdown and Spectre OS patches on Raven?

Neat. Have you thought about writing a "chaos monkey" service that randomly injects failures into components, like Netflix documented Chaos monkey (randomly terminates EC2 instances in production), and its other "simian army" characters (one now takes down entire availability zones of servers) at https://medium.com/netflix-techblog/the-netflix-simian-army-16e57fbab116

Pop Catalin, We haven't tested a before / after setup yet. We do a lot of calls for the network, so I expect there will be some effect.

Andrew, Yes, that is more or less what we do.

Comment preview