Distributed computing fallacies: There is one administrator

Actually I would state it better as “there is any administrator / administration”.

Actually I would state it better as “there is any administrator / administration”.

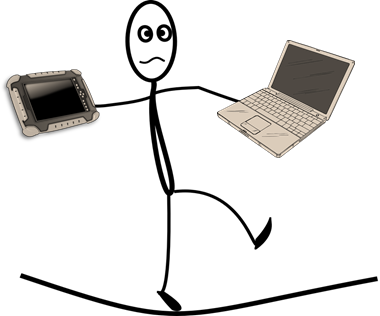

This fallacy is meant to refer to different administrators defining conflicting policies, at least according to wikipedia. But our experience has shown that the problem is much more worse. It isn’t that you have two companies defining policies that end up at odds with one another. It is that even in the same organization, you have very different areas of expertise and responsibility, and troubleshooting any problem in an organization of a significant size is a hard matter.

Sure, you have the firewall admin which is usually the same as the network admin or at least has that one’s number. But what if the problem is actually in an LDAP proxy making a cross forest query to a remote domain across DMZ and VPN lines, with the actual problem being a DNS change that hasn’t been propagated on the internal network because security policies require DNSSEC verification but the update was made without one.

Figure that one out, with the only error being “I can’t authenticate to your software, therefor the blame is yours” can be a true pain. Especially because the person you are dealing with has likely no relation to the people who have a clue about the problem and is often not even able to run the tools you need to diagnose the issue because they don’t have permissions to do so (“no, you may not sniff our production networks and send that data to an outside party” is a security policy that I support, even if it make my life harder).

So you have an issue, and you need to figure out where it is happening. And in the scenario above, even if you managed to figure out what the actual problem was (which will require multiple server hoping and crossing of security zones) you’ll realize that you need the key used for the DNSSEC, which is at the hangs of yet another admin (most probably at vacation right now).

And when you fix that you’ll find that you now need to flush the DNS caches of multiple DNS proxies and local servers, all of which require admin privileges by a bunch of people.

So no, you can’t assume one (competent) administrator. You have to assume that your software will run in the most brutal of production environments and that users will actively flip all sort of switches and see if you break, just because of Murphy.

What you can do, however, is to design your software accordingly. That means reducing to a minimum the number of external dependencies that you have and controlling what you are doing. It means that as part of the design of the software, you try to consider failure points and see how much of everything you can either own or be sure that you’ll have the facilities in place to inspect and validate with as few parties involved in the process.

A real world example of such a design decision was to drop any and all authentication schemes in RavenDB except for X509 client certificates. In addition to the high level of security and trust that they bring, there is also another really important aspect. It is possible to validate them and operate on them locally without requiring any special privileges on the machine or the network. A certificate can be validate easy, using common tools available on all operating systems.

The scenario above, we had similar ones on a pretty regular basis. And I got really tired of trying to debug someone’s implementation of active directory deployment and all the ways it can cause mishaps.

Comments

This is one of the core principles I have been pushing for during 15 years of my enterprise software career.

Funny enough, majority of enterprise software aggressively opposes this principle: all the in-house "frameworks" I've seen don't bat an eyelid adding a dependency on minute little things like Tibco v1.XYZ or specific quirk of HTTP handling in specific server etc.

Sadly, this enterprise thinking spreads nowadays to the major Open Source hub - NPM. Bizarrely deep and broad dependency trees lead to more and more complex tools (webpack, yarn, angular-cli) that feed back into insane complexity of interdependencies and brittleness.

Of course knowing all this cycle of complexity is wrong gives you a huge competitive advantage when designing your own. Your system will survive where others will crumble in most embarrasing ways. Tottally appreciate your pride in doing the right thing here! Investment well worth it.

Also look at Chrome installer on Windows, hints of the same ideology: they've hijacked some less-known OS and .NET Framework installing kinks in order to allow Chrome to be installed BOTH with admin rights and without too. Why the user should bother with this junky irrelevant questions, when all they want is feature X?

As a software developer you've got to deal with complexity as much as you can, witholding it from end-user by any means possible (keeping results sane too!) That applies to security, to UX, to error messages etc.

The NPM example is good and wrong at the same time. Good because you never know what simple 'npm install' will download together with the thing you asked for, and sometimes you just raise your brows in disbelief like 'who the hell needs Python and Ruby here? isn't it supposed to be javascript framework?'. And bad? Bad because despite this hidden entanglement of everything with everything else, this thing seems to work OK for most cases and you reach a point of saturation when you have 90% of frequently used libraries already downloaded to your system.

And Java/Maven infrastructure is where i don't see same advantages - there are many libraries and frameworks available, just like in NPM, but the dependencies are more often conflicting and difficult to resolve, plus the application servers bring their own dependencies/requirements to the table and in result you spend countless hours trying just to figure out how to run the bloody thing. And finally, from a developer perspective: if it's javascript, keep it limited to javascript. If it's .Net, make sure .Net is all what's needed to run it. But there are some geniuses out there who require you to install Ruby to be able to run some scripts that create files for some Python utility that will run a compiler to build .Net libraries.

I wouldn't call NPM working OK, as I wouldn't call usual enterprise software working OK, despite what your usual corporate citizen says.

The hidden cost (sheer size, risk, maintenance) is quite high. Yes, it's hidden and it's made to be implicitly accepted -- just as it is in the use case in the article. Pick usual DB, and it provides bunch of authentication options, coming with quirks and smallprints like "no fucking way it will ever work on Debian above vXYZ because of that tiny little abcde.SO file".

Mind you, there are tools like TypeScript on NPM that sidestep the muddle completely: no dependencies are required AT ALL. Precisely the secret sauce we're talking about here: leave the ignorant people to their own mud, be an exception.

Comment preview