Unique constraints didn’t make the cut for RavenDB 4.0

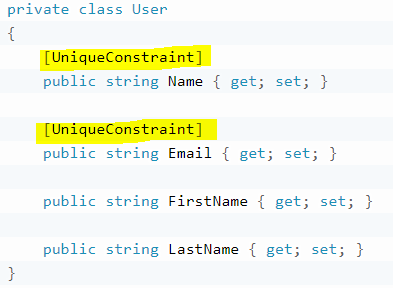

Unique Constraints is a bundle in RavenDB 3.x that was allowed you to… well, define unique constraints. Here is the classic example:

It was always somewhat awkward to use (you had to mess around with configuration on both the server and the client side, but it worked pretty well. As long as you were running on a single server.

With RavenDB 4.0, we put a lot more emphasis on running in a cluster, and when the time came to discuss how we are going to handle unique constraints in 4.0 it was very obvious that this is going to be neither easy nor performant.

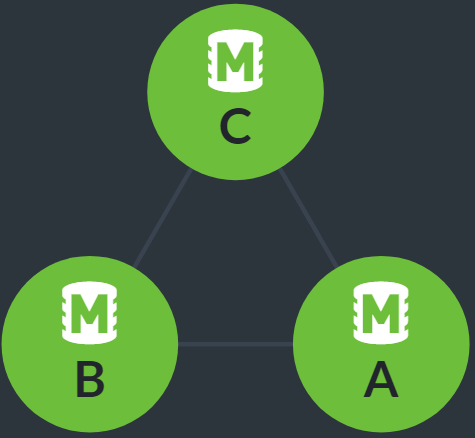

The key here is that in a distributed database using multi masters, there is no real good way to provide a unique constraint. Imagine the following database topology:

Now, what will happen if we’ll create the two different User documents with the same username in node C? It is easy for node C to be able to detect and reject this, right?

But what happen if we create one document in node B and another in node A? This is now a much harder problem to deal with. And this is without even getting into the harder aspects of how to deal with failure conditions.

The main problem here is that it is very likely that at the point we’ve discovered that we have a duplicate, there were already actions taken based on that information, which is generally not a good thing.

As a result, we were left in somewhat of a lurch. We didn’t want to have feature that would work only on a single node, or contain a hidden broken invariant. The way to handle this properly in a distributed environment is to use some form of consensus algorithm. And what do you know, we actually have a consensus algorithm implementation at hand, the Raft protocol that is used to manage the RavenDB cluster.

That, however, led to a different problem. The process of using a unique constraint would now broken into two distinct parts. First, we would verify that the value is indeed unique, then we would save the document. This can lead to issues if there is a failure just between these two operations, and it puts a heavy burden on the system to always check the unique constraint across the cluster on every update.

The interesting thing about unique constraints is that they rarely change once created. And if they do, they are typically part of very explicit workflow. That isn’t something that is easy to handle without a lot of context. Therefor, we decided that we can’t reliably implement them and dropped the feature.

However… reliable and atomic distributed operations are now on the table, and they allow you to achieve the same thing, and usually in a far better manner. The full details will be in the next post.

Comments

Could unique constraints be implemented manually by saving a document with the unique stuff in the ID (e.g. email) and asking for a replication factor of 3? (in this example) Would that confirm the save if none of the 3 servers had that document and reject it if any of them had it?

(I can't wait for the next post :P)

Mircea, No, not quite. The problem in this case is that you might have conflicts happening at the same time. What would happen if you have two concurrent operations against different servers? Having replication factor of 3 doesn't mean that it didn't get conflicts, just that it was persisted 3 times. There is a solution for that, though, just not this.

Comment preview