Dynamic nodes distribution in RavenDB 4.0

This is another cool feature that is best explained by showing, rather than explaining.

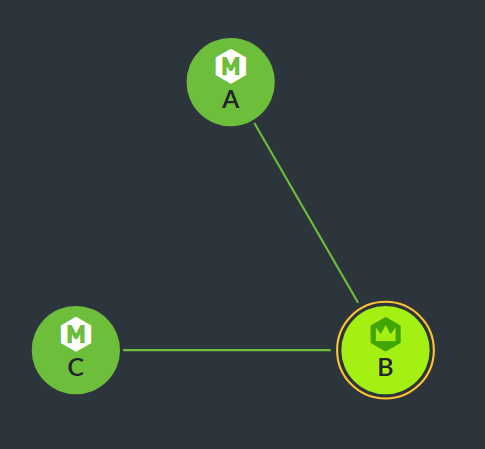

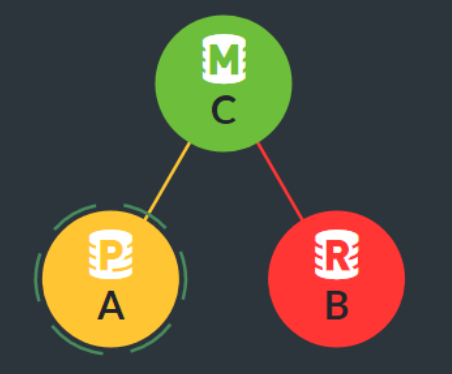

Consider the following cluster, made of three nodes.

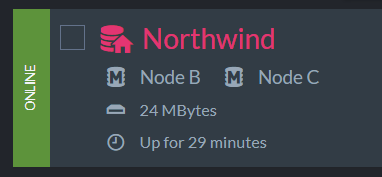

On this cluster, we created the following database:

This data is hosted on node B and C, so we have a replication factor of 2, here is how the database topology looks like:

And now we are going to kill node B.

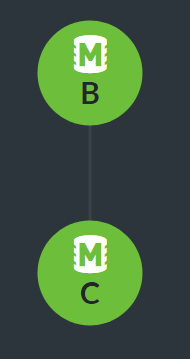

The first thing that is going to happen is that the cluster is going to mark this node as suspect. In this case, the M on node C stands for Member, and the R on node B stands for Rehab.

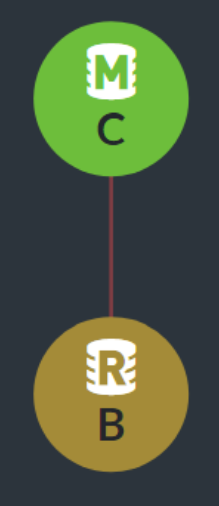

Shortly afterward, we’ll detect that it is actually down:

And this leads to a problem, we were told that we need to have a replication factor of 2 for this database, but one of them is down. There is a grace period, of course, but the cluster will eventually decide that it needs to be proactive about it and not wait around for nodes long past, hoping they’ll call, maybe.

So the cluster is going to take steps and do something about it:

And it does, it added node A to the database group. Node A is added as a promotable member, and node C is now responsible for catching it up with all the data that is in the database.

Once that is done, we can promote node A to be a full member in the database. We’ll remove node B from the database entirely, and when it come back up, we’ll delete the data from that node.

On the other hand, if node B was able to get back on its feet, it would be now be a race between node A and node B, to see who would be the first node to become up to do and eligible to become full member in the cluster again.

In most cases, that would be the pre-existing node, since it has a head start on the new node.

Clients, by the way, are not really aware that any of this is happening, the cluster will manage their topology and let them know which nodes they need to talk to at any given point.

Oh, and just to let you know, these images, they are screenshots from the studio, you can actually see this happening live when we introduce failure into the system and watch how the cluster recovers from it.

Comments

It's amazing!

Comment preview