With performance, test, benchmark and be ready to back out

Last week I spoke about our attempt to switch our internal JS engine to Jurassic from Jint. The primary motivation was speed, Jint is an interpreter, while Jurassic is compiled to IL and eventually machine code. That is a good thing from a performance standpoint, and the benchmarks we looked at, both external nd internal, told us that we could expect anything between twice as fast and ten times as fast. That was enough to convince me to go for it. I have a lot of plans for doing more with javascript, and if it can be fast enough, that would be just gravy.

So we did that, we took all the places in our code where we were doing something with Jint and moved them to Jurrasic. Of course, that isn’t nearly as simple as it sounds. We have a lot of places like that, and a lot of already existing code. But we also took the time to do this properly, of making sure that there is a single namespace that is aware of JS execution in RavenDB and hide that functionality from the rest of the code.

Now, one of the things that we do with the scripts we execute is expose to them both functions that they can call and documents to look at and mutate. Consider the following patch script:

this.NumberOfOrders++;

This is on a customer document that may be pretty big, as in, tens of KB or higher. We don’t want to have to serialize the whole document into the JS environment and then serialize it back, that road lead to a lot of allocations and extreme performance costs. No, already with Jint we have implemented a wrapper object that we expose to the JS environment that would do lazy evaluation of just the properties that were needed by the script and track all changes so we can reserialize things more easily.

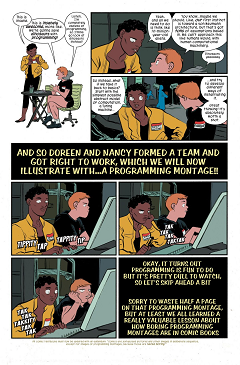

Moving to Jurassic had broken all of that, so we have to re-write it all. The good thing is that we already knew what we wanted, and how we wanted to do it, it was just a matter for us to figure out how Jurassic allows it. There was an epic coding montage (see image on the right) and we got it all back into working state.

Along the way, we paid down a lot of technical debt around consistency and exposure of operations and made it easier all around to work with JS from inside RavenDB. hate javascript.

After a lot of time, we had everything stable enough so we could now test RavenDB with the new JS engine. The results were… abysmal. I mean, truly and horribly so.

But that wasn’t right, we specifically sought a fast JS engine, and we did everything right. We cached the generated values, we reused instances, we didn’t serialize / deserialize too much and we had done our homework. We had benchmarks that showed very clearly that Jurassic was the faster engine. Feeling very stupid, we started looking at the before and after profiling results and everything became clear and I hate javascript.

Jurassic is the faster engine, if most of your work is actually done inside the script. But most of the time, the whole point of the script is to do very little and direct the rest of the code in what it is meant to do. That is where we actually pay the most costs. And in Jurassic, this is really expensive. Also, I hate javascript.

It was really painful, but after considering this for a while, we decided to switch back to Jint. We also upgraded to the latest release of Jint and got some really nice features in the box. One of the things that I’m excited about is that it has a ES6 parser, even if it doesn’t fully support all the ES6 features. In particular, I really want to look implementing arrow functions for jint, because it would be very cool for our usecase, but this will be later, because I hate javascript.

Instead of reverting the whole of our work, we decided to take this as a practice run of the refactoring to isolate us from the JS engine. The answer is that it was much easier to switch from Jurassic to Jint then it was the other way around, but it is still not a fun process. There is too much that we depend on. But this is a really great lesson in understanding what we are paying for. We got a great deal of refactoring done, and I’m much happier about both our internal API and the exposed interfaces that we give to users. This is going to be much easier to explain now. Oh, and I hate javascript.

I had to deal with three different javascript engines (two versions of Jint and Jurassic) at a pretty deep level. For example, one of the things we expose in our scripts is the notion of null propagation. So you can write code like this:

return user.Address.City.Street.Number.StopAlready;

And even if you have missing properties along the way, the result will be null, and not an error, like normal. That requires quite deep integration with the engine in question and lead to some tough questions about the meaning of the universe and the role of keyboards in our life versus the utility of punching bugs (not a typo).

The actual code we ended up with for this feature is pretty small and quite lovely, but getting there was a job and a half, causing me to hate javascript, and I wasn’t that fond of it in the first place.

I’ll have the performance numbers comparing all three editions sometimes next week. In the meantime, I’m going to do something that isn’t javascript.

Comments

Ouch, painful to discover that at the end of the process. At least you got a good refactoring out of it.

With regards to the new Jint, ES6 features like arrow functions would be very much welcomed, both for the ease of writing them and the "this" capturing.

Sidenote: I had no idea you could do user.Address.City.Street.Number.StopAlready in patches. I just tried it in 3.5 Studio and sure enough it works, heh. I'm unsure whether to rely on that, though; I've always just used standard JS in my patches.

Judah, Yes, that was painful. I might give arrow functions a try when I have a free weekend, right now I realized that I'm looking into variable name resolution inside JS functions, and that is scary enough.

This is also show our indexes work in C#, you can do the same in the index, and it will not throw. This is really important for schema free db. That isn't something you can generally rely on, and many users will likely go with the standard approach, but this give us better behavior for the common scenario. In particular when you look at the actual query as in the previous post. Try imagining needing to pull a value from two levels down and doing that with null checking.

Have you considered doing your own language similar to what Elasticsearch does? They have a language called Painless and it is just forwards calls to a whitelisted set of Java APIs. The biggest reason they did that is because a language like JavaScript has a lot of backdoors that can cause a lot of security issues. Also, they are trying to keep control of performance issues like stackoverflows. I have thought about creating a .NET version of Painless. I'm curious on your thoughts.

He he. Love the paragraph end statements.

Eric, I haven't checked how that is implemented, but if you don't do an interpreter, it is very hard to make the system secure. One of the things we tried to do with Jurassic is to plug some of that, and we were told that we missed at least three different ways to do that without even realizing it. The other problem is that building a language from scratch is _hard_, if there is an issue with the JS code, a user can take that, put it in a browser, and debug that. That means that I don't have to do anything to get it working. Hell, I can probably offer them a debugger right there in the studio if they need it.

That all sounds like a rough ride! What are your thoughts on switching from JavaScript to C# for scripting, using Roslyn?

@Oren: "if there is an issue with the JS code, a user can take that, put it in a browser, and debug that. That means that I don't have to do anything to get it working. Hell, I can probably offer them a debugger right there in the studio if they need it". Yes, except that even with just the null propagation it is not (strictly speaking) normal JS anymore. How about that?

@Oren: just to be clear, i love the idea of null propagation in a JS environment (even more so in one like this RavenDB usage), it's a really great feature to have.

@ Andrew Davey: I'm not convinced that would help. Roslyn is fairly heavy-weight and Roslyn scripting specifically has some significant performance issues (and wasn't the focus of the Roslyn team recently, so it probably won't improve much in the near future).

Andrew, There is no good way to give a C# based scripting language that isn't also a major security hazard And given the fact that we need to run this as part of our queries, we cannot allow that.

njy, Actually, no. In that case, what we'll pass to your code would be a Proxy, not a standard object. See here: https://medium.com/@andv/js-alternative-of-rubys-method-missing-78dfe600fe31 This would just work, pretty much.

@Oren oh, I see, that probably makes sense.

@Oren, I know you probably looked into this, but what was the reason you couldn't cache or reuse the instance of the engine ?

Ian, I most certainly did cache and reuse the instance.

@Oren, guess I am miss-understanding the post. I read it as Instantiating the engine was much more expensive. I think this part of the post does not make much sense to me

Ian, Creating the engine (and parsing / generating IL, which we count in engine creation) is expensive. However, what you are doing with the JS matters. In Jurassic, every time you make a call, you do a pretty expensive operation, and we had to do a lot of these. Sending data to Jurassic from the outside was expensive and getting data from Jurassic was expensive. And calling from inside Jurassic was expensive. Now, remember that I'm talking about this in the sense that I'm counting CPU instructions, and that I'm usually looking at this for orchestrating, not actual execution, so the integration cost was major for us.

Comment preview