RavenDB 4.0Unbounded results sets

Unbounded result sets are a pet peeve of mine. I have seen them destroy application performance more then once. With RavenDB, I decided to cut that problem at the knees and placed a hard limit on the number of results that you can get from the server. Unless you configured it differently, you couldn’t get more than 1,024 results per query. I was very happy with this decisions, and there have been numerous cases where this has been able to save an application from serious issues.

Unfortunately, users hated it. Even though it was configurable, and even though you could effectively turn it off, just the fact that it was there was enough to make people angry.

Don’t get me wrong, I absolutely understand some of the issues raised. In particular, if the data goes over a certain size we suddenly show wrong results or error, leaving the app in a “we need to fix this NOW”. It is an easy mistake to make. In fact, in this blog, I noticed a few months back that I couldn’t get entries from 2014 to show up in the archive. The underlying reason was exactly that, I’m getting the number of items per month, and I’ve been blogging for more than 128 months, so the data got truncated.

In RavenDB 4.0 we removed the limit. If you don’t specify a limit in a query, you’ll get exactly how many results there are in the database. You can ask RavenDB to raise an error if you didn’t specify a limit clause, which is a way for you to verify that you won’t run into this issue in production, but it is off by default and will probably better match the new user expectations.

The underlying issue of loading too many results is still there, of course. And we still want to do something about it. What we did was raise alerts.

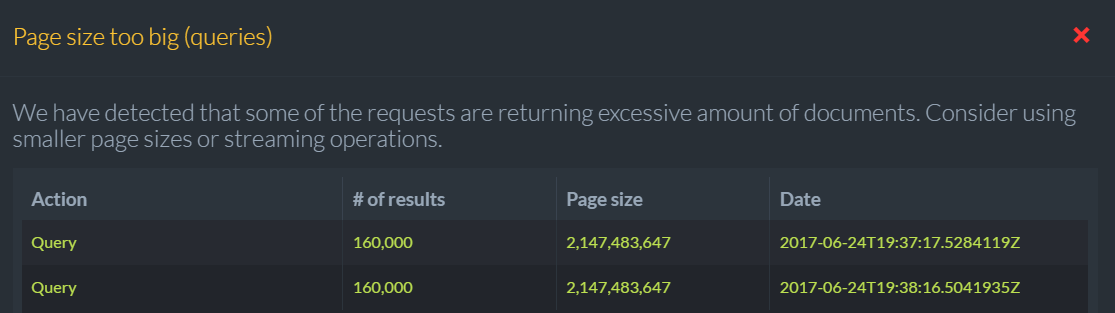

I have made a query on a large set (160,000 results, about 400 MB in all) and the following popped up in the RavenDB Studio:

This tells the admin that it have some information that it needs to look at. This is intentionally non obtrusive.

When you click on the notifications, you’ll get the following message.

And if you’ll click on the details, you’ll see the actual details of the operations that triggered this warning.

I actually created an issue so we’ll supply you with more information (such as the index, the query, duration and the total size that it generated over the network).

I think that this gives the admin enough information to act upon, but will not cause hardship to the application. This make it something that we Should Fix instead Get the OnCall Guy.

More posts in "RavenDB 4.0" series:

- (30 Oct 2017) automatic conflict resolution

- (05 Oct 2017) The design of the security error flow

- (03 Oct 2017) The indexing threads

- (02 Oct 2017) Indexing related data

- (29 Sep 2017) Map/reduce

- (22 Sep 2017) Field compression

Comments

Seems like you've decided on another way to handle them now in contrast to what you wrote back in september.

It think this is a good decision. Whenever you chose "fast but possibly wrong" behavior instead of "slow but accurate" it should always be conscious and deliberate decision that you make. Even if you design for BASE and you allow yourself to be wrong, you have set limits about it.

Yes , this is better I think. the 128 max results in Raven 3.5 has annoyed me a number of times. If there is a limit it should be in KB not number of results and then, throw an error if result set is to large. It never made sense to me, especially as when returning a large number of results I will always use projection and only return the few fields I need. I would actually appreciate a max result size of say 1-2MB, that is user configurable.

@Ian having a KB limit is even worse than number limit, now you never know when the system will truncate or raise an error. Happy debugging partial results!

@Pop Catalin, I said for it to throw an error if it goes over the limit!

Daniel, I still think that it is the right technical decision. It is just that the overwhelming reaction from users is "don't touch that".

Big change indeed. But, probably the right one from a user's perspective. I know this has been discussed many times, so I won't rehash any of those arguments.

I will say, having used Raven for several years now, it has forced me to always consider unbounded results. Even to the point where if I omitted a .Take(...) clause before a .ToListAsync(), I'd add a comment to the effect of "the results of this query are domain-limited; there will be no more than 20".

Now I never get bit by the unbounded results limit in Raven 3 simply because it's trained me to think about it ahead of time.

Still, this change in Raven 4 is pragmatic. New users won't be surprised by breakage at runtime, but users will still know about their issue via the Studio alert. Good compromise. +1

If you "ask RavenDB to raise an error if you didn’t specify a limit clause" can you still opt in per query for unbounded result sets?

Daniel, No, it is a global setting. If you specify this, it doesn't make sense to "just allow it over here".

@Oren

"Daniel, No, it is a global setting. If you specify this, it doesn't make sense to "just allow it over here".

Acutally, it makes perfect sense to allow bypassing the default behavior per query.

Moti, We accept PRs.

I liked this constraint very much because it teached me to think about "unbound results". Nodoby could ignore my PR comment on query().ToList() because with prod data the result will be wrong.

The only problem with it is that it is too easy to introduce a bug, often a little bit later when the useres entered enough data. Thats why I welcome the new way of dealing with it!

Is there a way to get this error through client api for logging purposes (for example a health check)?

Thank you for listening!

I don't really see how the result count is relevant? Currently Raven will happily give me a 128 1MB results but refuse to give me 2000 1kb results??? Sometimes I just want a list of id's just to populate a dictionary or do a hash intersect but I have to either change a global setting to allow me to do it or use streaming.

@Oren these restrictions are more about controlling the size in KB of the response yes?

Also I think in it's current form Raven should throw an error if I make an unbounded query with no skip or take etc.. and the result set is greater than 128 this has thrown me a few times when I first started development with Raven.

Tobias, I'm not sure that I'm following you here. If you set the flag, the exception will be thrown and you can handle it.

Ian, Typically the size of the request matters a lot, yes. We also have created an issue to add the same warning for very large requests. Note that the expected use case is that you'll either have this flag set for dev only or have it for all you queries. Note that with this flag, you can say

.Take(int.MaxValue), which won't throw, but won't add a limit.Hi oren, I probably didnt explain myself well enough.

If it's configured to just do warnings and allow unbound resultsets, is there a way to query the server to see if there are any warnings? (Not through the raven mgmt studio) This would allow to add the warnings into our custom health check dashboards/monitoring.

Tobias, Yes, of course. Everything in RavenDB is exposed over REST. For example, see: http://4.live-test.ravendb.net/databases/test2/notification-center/watch

Oren,

Hallelujah!

Pure Krome, Streaming gives you streaming access to the data, both on the server and the client. This is a much more efficient system for large requests.

You can ping the notification center endpoint for such things, but nothing in the code, and yes, you can set it globally.

Comment preview