Reviewing Noise Search EnginePart III

After the previous two parts, I think that I have a good high level understanding of what is going on in the Noise codebase, as a reminder, I’m reviewing 48d808375e541eca383826ea928272976bce064d.

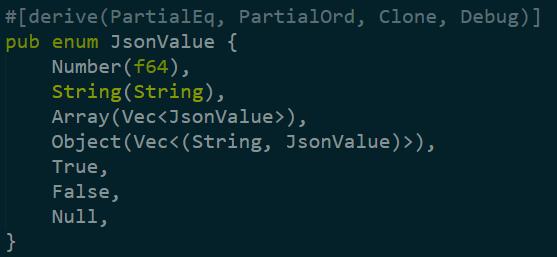

Now I’m reading through the JsonValue file. The first interesting thing here is:

Given all the code I have read so far, I think that this is a really elegant way to handle JSON, both in terms of how you can define such a recursive structure in 7 lines of code and in how this allow you to express relatively complex scenario quite nicely.

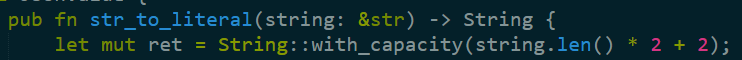

One thing that bugs me is that there are a lot of things like this:

This is done to have enough space for escaping. The problem is that this waste quite a bit of space. The last few blog posts I had were about 2KB of text each. Imagine doing a batch of a few thousands of them, just this types of calls can take up a lot of extra memory. Now, I’m used to managed memory, where this kind of thing hangs around and need to be collected. This code is in Rust, and it will clean itself up as soon as it is out of scope. But given that we hold on to it for a non trivial amount of time, I think that it is a concern. It might actually be cheaper to go over the string multiple times to figure out exactly how many escape chars we need.

One of the first optimizations we did when we wrote our own JSON parser was get rid of the costly escape char handling. It took literally more time than anything else, because we kept having to scan the string and break it over and over again.

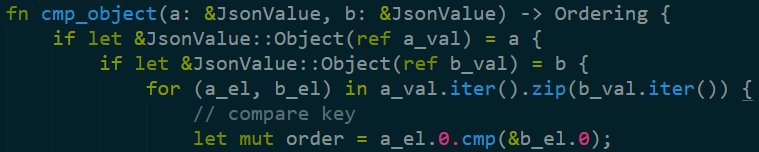

Another issue that popped up is here:

A silent assumption here is that the order of properties in the two objects is the same. Two identical objects that have different property order will sort unequal. That is likely to be a surprise to the user.

The next file is parser.rs, which is over 1,300 lines of code (almost 20% of the entire codebase of Noise!), this is pretty typical parsing code, low level, nasty and quite tedious. I skimmed it briefly, but I don’t think that there is anything really important going on there. Its main purpose is to take a query string and produce a filter, next.

The next file is query.rs and I’m going to assume that this will lead me to also jump to filter.rs which I previous skipped.

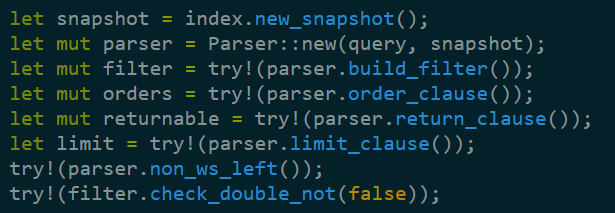

The code here start using terms that I’m familiar with from Lucene. We’ll start where the money is, in Query::get_matches, which starts by building the query itself:

Basically, create a snapshot of the index, parse the query. We need to pass the snapshot to the parser so it can pass it on to the filters that it builds internally. The order of operations in this code also determines the required order of appearances of the clauses in the Noise query syntax.

The rest of the method goes over the query and build it, setting up ordering, aggregation and such. There is close cooperation between query.rs, filter.rs and snapshot.rs. The query is a high level concept of everything that must happen, filter is a much lower level, just finding the right stuff. On top of that the query layer boosting, scoring, ordering and aggregation. The filter uses the snapshot to access the stored information.

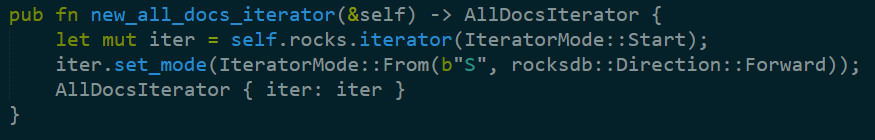

For example, the AllDocsFilter is using the snapshot.new_all_docs_iterator, which is defined as:

Basically, scan all the values with the prefix of S, which I think stands for sequence number.

More complex stuff can be seen in the Scorer, as a good example. We have terms prefixed with C, which I think stands for Count, which gives the number of times that a particular term appear in a particular position. And K is the number of times a particular property has shown up in general. This seems to be the basis of tf-idf calculation. Those are merged together using RocksDB merge command and the custom merge policy that we looked at in the index.rs.

At this point I looked at the clock and realized that this is now after midnight and that I got to the most interesting parts that I wanted to see, the rest are pretty familiar details, since a lot of this follows the Lucene construction.

I’m going to have another blog post about my thoughts about this project, which will show up tomorrow.

Comments

Comment preview