A thread per task keep the headache away

One of very conscious choices we made in RavenDB 4.0 is to use threads. Not just run code that is multi threaded, but actively use the notion of a dedicated thread per task.

One of very conscious choices we made in RavenDB 4.0 is to use threads. Not just run code that is multi threaded, but actively use the notion of a dedicated thread per task.

In fact, most of the work in RavenDB (beyond servicing requests) is done by a dedicated thread. Each index, for example, has its own thread, transactions are merged and pushed using (mostly) a single thread. Replication is using dedicated threads, and so does the cluster communication mechanism.

That runs contrary to advice that I have been told many times, that threads are expensive resource and that we should not hold up a thread if we can use async operations to give it up as soon as possible. And to a certain extent, I still very much believe it. Certainly all our request processing is using this manner. But as we got faster and faster we observed some really hard issues with using thread pools, since you can’t rely on them executing a particular request in a given time frame, and having a mix bag of operations in a thread pool is a mix for slowing the whole thing down.

Dedicated threads give us a lot of control and simplicity, from the ability to control the priority of certain tasks to the ability to debug and blame them properly.

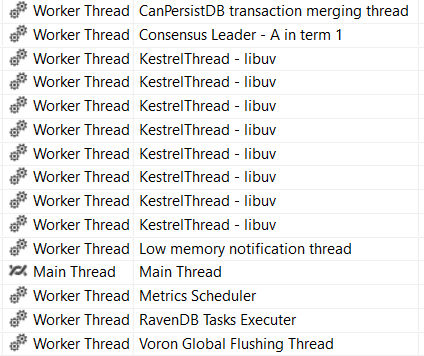

For example, here is how a portion of the threads on a running RavenDB server look like in the debugger:

And done of our standard endpoints can give you thread timing for all the various tasks we do, so if there is an expensive thing going on, you can tell, and you can act accordingly.

All of this has also led to an architecture that is mostly about independent thread doing their job in isolation, which means that a lot of the backend code in RavenDB doesn’t have to worry about threading concerns. And debugging it is as simple as stepping through it.

That isn’t true for request processing threads, which are heavily async, so they are all doing mostly reads. Even when you are actually sending a write to RavenDB, what is actually happening is that we have the request thread parse the request, then queue it up for the transaction merging thread to actually process it. That means that there is usually little (if any) contention on locks throughout the system.

It is a far simpler model, and it has proven itself capable of making a very complex system understandable and maintainable for us.

Comments

One can identify the similar shift in higher level software architecture, especially in the world of containers. Seeing a dedicated process (just like one dedicated thread in your scenario) doing the thing it is supposed to and doing it right, running as a separate Docker container is very common these days. I do not know if these two things are related though - probably similar forces in the act.

I applaud that transition. I feel in the next 5 years a lot of people are going to wake up to some hardship associated with async.

Does that not mean that you had to backport some async code to a sync model?

tobi, Yes, we had to. For example, if a replication thread needs to make an HTTP call, the only API we have in CoreCLR is async. So we need to wrap that properly. For the most part, we are using direct TCP connections, so we have the ability to sync API without too much wrapping.

Thread-per-task certainly makes things easier to keep track of. I've been using that approach for a while in our system because it makes it relatively easy to control the balance of CPU resource between different types of background tasks.

But that only really works if said tasks are CPU-bottlenecked, and these days we spend longer waiting on data over the network from our RDBMS. So I'm now looking to use TPL

asyncto queue up multiple 'threads of execution' on a mini-pool of 1-3 threads (hidden behind a TaskScheduler), which lets us keep a logically-sequential programming model but run X instances on Y threads, and then maintain separate small pools of threads for each type of task (updating indexes, deferred update of DB tables, background data migrations, etc). This also gives us two levers for balancing each job instead of one very big one.I seem to recall a previous blog post of yours from a few months/years ago talking about a similar setup, but I can't find it right now. I get a lot of ideas from this blog, but rarely have the opportunity to put them into practice immediately! We don't have anything like the throughput requirements of RavenDB but there's always something useful to learn.

Alex, A thread per task is not useful solely for CPU bound tasks. Sure, if you have a LOT of tasks all doing I/O, you should be using async. That is what we are doing for request processing, which are using pure async and that is how we can handle hundreds of thousands of requests / sec with about 40 request processing threads.

But a lot of long running tasks also do I/O. For example, writing to the disk, or replicating over the network. The key here is that we don't have too many of those, and we want them to block the thread while the I/O operation is running. This is because it is much easier to figure out what is going on (oh, replication to XYZ is stuck) from the thread status then it is from trying to follow the state of async tasks in the air.

Hi Alex, Have you considered using TPL Dataflow ActionBlock to implement your mini-pools / queues ? It sounds as if an ActionBlock using the MaxDegreeOfParallelism could be useful.

@Stig, most of our code in this area actually predates TPL, and migrating to a 'framework-based' approach was rather difficult. Separating the CPU-heavy and IO-heavy parts of each type of job is not feasible in the short term so we're stuck with a mixed workload per 'thread' for the moment. It's something I'll take another look at though, thanks! Could make the refactor quite a bit simpler.

@Oren: agreed. The problem we have is that the workload of our tasks was split fairly evenly between CPU-heavy and IO-heavy work and predated TPL by a couple of years. The CPU-heavy side has 'shrunk' over the years as CPUs get faster and our code gets tighter, so once we're properly async here we can probably get away with a single thread.

Incidentally, debugging async-related issues has been on my mind a lot lately. Memory dumps yield stack traces but those don't tell you much about async waits. But it should be possible to inspect a memory dump and build a graph of all waiting tasks and their continuations. I've not found any existing tools which do this though.

Alex, VS can do some of that, it is tracking tasks, but it is nothing near thread stacks, and I'm not aware of anything that can come close to making sense of pending tasks. That can be critical, because a task that didn't return may hold up a lot of stuff (resources, requests, etc), but there is no easy way to track it.

Indeed not. It seems that most of the 'postmortem' stuff around Tasks relies on being able to trace the running process, or at least execute code in its memory space to get the Task to tell you about its continuations.

My attempt is here. It's just about barely useful, able to list all incomplete live Tasks and a tree of their continuations, but it can't yet properly interpret every type of continuation or link all delegates to lines of code. Unfortunately it depends on .NET Framework class internals (names of members, etc) but I'm hoping that those don't change often. My heapwalking tools are being refined in isolation on another branch at the moment.

Hopefully that will be useful to someone!

Comment preview