RavenDB 4.0Working with attachments

In my previous post, I talked about attachments, how they look in the studio and how to work with them from code. In this post, I want to dig a little deeper into how they are actually working.

In my previous post, I talked about attachments, how they look in the studio and how to work with them from code. In this post, I want to dig a little deeper into how they are actually working.

Attachments are basically blobs that can be attached to a document, a document can have any number of attachments attached to it, and the actual contents of the attachment is actually stored separately from the document. One of the advantages of this separate storage is that it also allows us to handle de-duplication.

The trivial example is needing to attach the same file to a lot of documents will result in just a single instance of that file being kept around. There are actually quite a lot of use cases that call for this (for example, imagine the default profile picture), but this really shines when you start working with revisions. Every time that document changes (which include modifications to attachments, of course), a new revision is created. Instead of having each revision clone all of the attachments, or not have attachments tracked by revisions, each revisions will simply reference the same attachment data. That way, you can get a whole view of the document at a point in time, implement auditing and tracking, etc.

Another cool aspect of attachments being attached to documents is that they flow in the same manner over replication. So if you modified a document and added an attachment, that modification will be replicated at the same time (and in the same transaction) as that attachment.

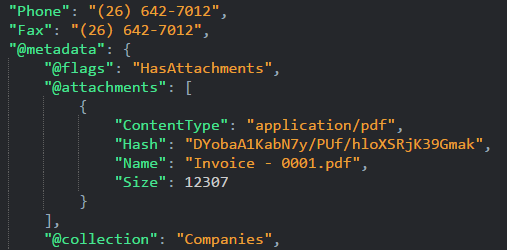

In terms of actually working with the attachments, we keep track of the references between documents and attachments internally, and expose them via the document metadata.

This is done so you don’t need to make any additional server calls to get the attachments on a specific documents. You just need to load it, and you have it all there.

This looks like this, note that you can get all the relevant information about the attachment directly from the document, without having to go elsewhere. This is also how you can compare attachment changes across revisions, and this allow you to write conflict resolution scripts that operate on documents and attachments seamlessly.

Putting the attachment information inside the document metadata is a design decision that was made because for the vast majority of the cases, the number of attachments per document is pretty small, and even in larger cases (dozens or hundreds of attachments) it works very well. If you have a case where a single document has many thousands or tens of thousands of attachments, that will likely be a very high load on the metadata, and you should consider splitting the attachments into multiple documents (sub documents with some domain knowledge will work).

Let us consider a big customer, to whom we keep issuing invoices. A good problem to have is that eventually we’ll issue enough invoices to that customer that we start suffering from very big metadata load just because of the attachment tracking. We can handle that by using (customer/1234/invoices/2017-04, customer/1234/invoices/2017-05 ) as the documents we’ll use to hang the attachments on.

This was done intentionally, because it mimics the same way you’ll split a file that has an unbounded growth (keeping all invoicing data for a big customer in a single document is also not a good idea, and has the same solution).

More posts in "RavenDB 4.0" series:

- (30 Oct 2017) automatic conflict resolution

- (05 Oct 2017) The design of the security error flow

- (03 Oct 2017) The indexing threads

- (02 Oct 2017) Indexing related data

- (29 Sep 2017) Map/reduce

- (22 Sep 2017) Field compression

Comments

Ok, sounds great... a questions:

Let's say I have a User called Bob and I attach a profile picture to Bob I use the new 'clone' feature to clone Bob and create a user called Sally - they both have Bob's picture because it points at the same attachment right? Sally now looks like Bob but she is not happy with that and uploads a new picture of herself

Does that also overwrite Bob's picture so he now looks like Sally?

Another question - If I happen to attach the same binary to two completely different documents (without a clone or revision being involved), is this somehow de-duplicated?

Ian, Bob & Sally have the same content, so it points to the same thing. Sally change her image (retaining same name), it points to different data, doesn't impact Bob.

If you attach the same binary twice independently, we'll still only store a single copy.

Fantastic - good work.

My first review of Raven 4.0 has been very encouraging. We have imported and exported a DB with large documents / indexes in minutes. This would normally take hours if you include the index rebuilding. Really looking forward to the beta and RTM.

Ian Cross, That is awesome to hear, I would really like to hear any feedback you have on it. Now is the time :-)

I noticed the content hash in your example is 32 base64 characters, or 192 bits. That seems plenty for practical collision resistance (2^96), even though cryptographers like to see 2^128 as a minimum security bar in new applications.Is the hash function SHA-256 truncated to 192 bits, in order to take advantage of the SHA2-256 acceleration instructions on modern x64 machines? If not, what was the rationale behind using a different hash function?

Ryan, We are currently using a Metro128 hash as well as XXHash64. This should ensure no duplicates. Blake2b is one the table, to see if it would be viable in terms of performance, not decided yet, and anyway that would be an implementation detail.

Oren, I would definitely use a cryptographic hash for duplicate detection. This is what all popular deduplication systems do, even Windows NTFS deduplication, and for good reason.

Neither of the functions you describe are collision resistant to malicious input. Meaning an attacker can craft an input attachment to cause collisions in your deduplication process. At best this means logical corruption and data loss. At worst it means remote code execution to the retriever of the malicious document that collided with a "good" document. Note also this is not a theoretical attack: the SHA-1 collision test documents from Google broke Subversion repositories when committed because of a collision in the SVN deduplication process. Finding a collision in your ad-hoc concatenation of two insecure hash functions is likely trivial compared to finding a SHA-1 collision.

Ryan, Yes, those are all good points. We mostly did it because we had an implementation for that lying around and it was easily done. I'll bump up the priority on replacing it.

Comment preview