Low level Voron optimizationsTransaction lock handoff

There are some features that on completion, just made my day/week/month. This is one of them. I’ve only just started recovering from the marathon of build this feature (this is written on Friday, the feature was completed on Sunday, around 11 AM, after about 12 hours or so of work).

Why am I so excited, and why did it merit such efforts? Transaction lock handoff is a more accurate name to our version of early lock release, which is a feature that I have been wanting for over four years.

Let me try to explain why this is important. Voron is a single writer storage engine, which means that there can only be a single write transaction at any given point in time. A lot of that is mitigated by transaction merging, which means that we can do a lot of the preparation work ahead of time, and only send the processed work to be done as part of the transaction. But it does mean that there a single write transaction, and under load, it means that we have the following pattern:

The wavy line is # of writes / sec, and you can see that it is going up & down like crazy. The reason for that is that whenever we actually need to commit a transaction, we can’t continue processing requests. They have to wait for the next transaction to start . And that means that the old one has to complete, which require us to finish doing a write all the way to the disk.

So basically, the drops in performance happens whenever we have to wait for I/O. But we have to wait for the transaction to complete before we can start the next one, so we are effectively bottlenecked.

Early lock release is a technique which alleviate the problem. In effect, instead of waiting for the I/O to complete before starting the next transaction, we start it immediately, in parallel with the I/O work required to commit the previous transaction. The key part here is that we don’t report success on the first transaction until the commit has been successful, and that the 2nd transaction may fail because the first one had (this sounds bad, until you realize that failure to write to disk is pretty much always catastrophic for a database).

If you look at the previous post (from Jan 2014!) about this, you’ll see that we actually implement that at the time, and rolled it back because it wasn’t doing much for us. I’ll have another post to explain what we are doing different now that allows us to take full advantage of this.

The idea with early lock release is that the transaction will free its lock as soon as it is done, and allow additional transactions to hold that lock while waiting for I/O. This isn’t actually what we have done.

The idea of transaction merging is deeply rooted into the design of RavenDB 4.0, and it isn’t something that we can (or want) to change. About 98% of all write work in RavenDB will always go through the transaction merger. That means that just releasing the lock isn’t really going to do much for us. The transaction merger thread will be busy waiting for the I/O to complete and then start a new transaction (re-acquiring the lock), so there isn’t actually any benefit here.

The idea of transaction merging is deeply rooted into the design of RavenDB 4.0, and it isn’t something that we can (or want) to change. About 98% of all write work in RavenDB will always go through the transaction merger. That means that just releasing the lock isn’t really going to do much for us. The transaction merger thread will be busy waiting for the I/O to complete and then start a new transaction (re-acquiring the lock), so there isn’t actually any benefit here.

Instead, we implemented a different system. When a transaction (let’s call it tx #1) is over, it checks whatever there is additional work pending, and it there is, tx #1 generate a new transaction (tx #2). The second transaction has the same in memory state as tx #1, including all the modifications that tx #1 has made. More crucially, tx #1 also hand off all of the locks that it holds to tx #2, and then triggers the async process of writing tx #1 data to the journal.

In the meantime, tx #@ gets to run and operate (and doesn’t have to compete for any locks). Tx #2 will process work until tx #1 has completed its I/O work. At that point, tx #2 will call back into tx #1, letting it complete its commit process, and then we can the cycle repeats, if there is even more work pending, tx #2 will generate tx #3, transfer the lock to it and initiate an async process of writing to the journal. Tx #3 will run until tx #2 is done with its I/O, and so forth.

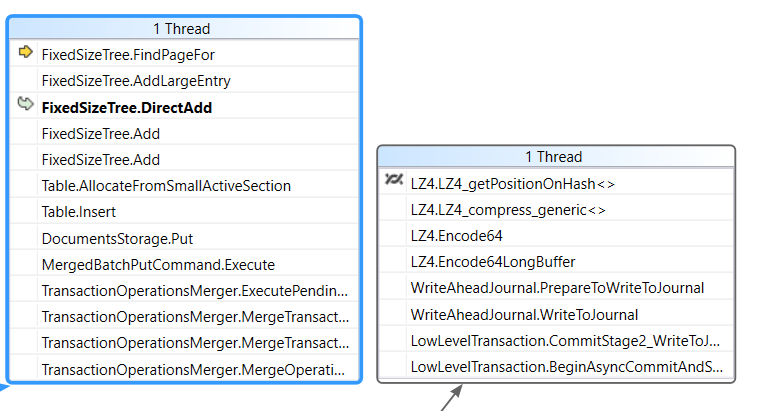

Here it what this looks like:

The thread on the left is the transaction merger, processing incoming write requests. The thread on the right is the one doing the async write process. It is interesting to note that while we call it an async write process, the actual time we spend writing to disk is relatively low, we spend most of our time actually preparing to write. That involves running diffs against old version, compressing the data, etc.

The end result is that we get several very important properties:

- We split the transaction processing work and the writes.

- We get automatic adjustment of the system based on actual load (if the disk is slow, we’ll try to do more work and have larger merged transactions, for example).

- The transaction merger doesn’t have to compete for the transaction lock.

- We have managed to increase parallelism in a previously highly serial process.

The details of the change are gnarly, because we had to make sure that pieces of the code that assumed that we are running in a serial fashion can run concurrently, but the performance boost is over 45% under heavy load, and the behavior will auto adjust to handle the specific circumstances at hand, trying to keep all pieces of the system running at full throttle.

More posts in "Low level Voron optimizations" series:

- (02 Mar 2017) Primitives & abstraction levels

- (28 Feb 2017) Transaction lock handoff

- (20 Feb 2017) Recyclers do it over and over again.

- (14 Feb 2017) High data locality

- (08 Feb 2017) The page size bump

Comments

even if i dont use Raven, i really admire the journey from its beginning to where it is now

but i dont understand one thing -if, at the point of commit, TX#1 clones itself into TX#2 and starts writing to journal, won't TX#2 write the same data again when it completes? I mean the data inherited from TX#1? Or it knows to start writing modifications from after the forking point? Or is this why the callback from TX#2 to TX#1 happens - to establish that TX#1 has commited and the inherited changes in TX#2 dont need to be written?

Rafal, When TX #2 starts, the write to the journal is currently happening in an async manner. When TX #2 is over, it waits for the previous journal write to complete, then start it own journal write, after TX #1 write.

There isn't a callback, it is just that TX #2 will continue working as long as TX #1 is on flight, then call the end method on TX #1, and then starts its own I/O, potentially handing off to TX #3.

This transaction hand-off reminds me of smart batching so frequently used in high-performance libraries (Disruptor, Aeron). Do I understand it correctly that you'll have effectively max two ongoing "transactions"? The first that is being written and the second that tx merger appends to till the IO for the first is done?

Schooletz, Yes, that is correct.

Comment preview