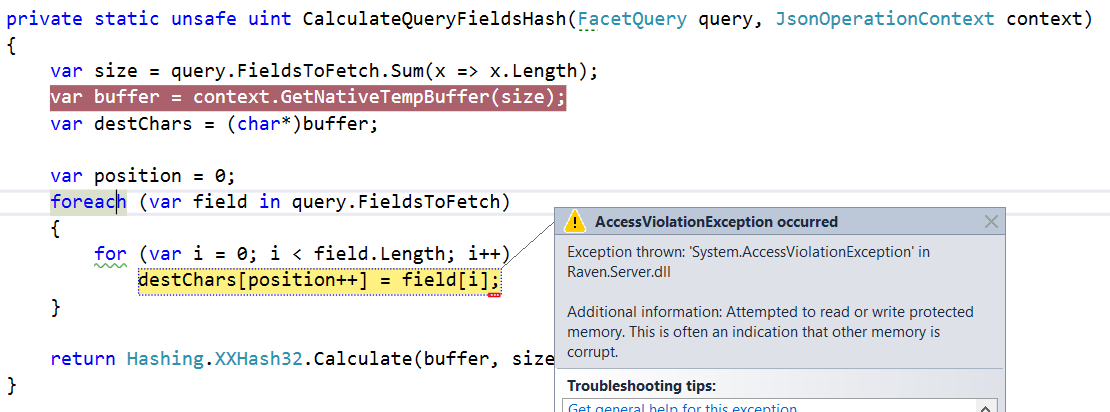

Electric fenced memory results

A few days ago I posted about electric fence memory and its usages. Here is one problem that if found for us.

Do you see the bug? And can you imagine how hard it would be for us to figure this out if we didn’t hotwire the memory?

Comments

Quick guess. The allocation is in bytes, but the indexing is in char.

Encoding issue?

the exception indicates that you somehow overflow the destChars, hence the size is probably wrong. so the only issue i can think is there might be a problem with the linq Sum, if its lazy it might give wrong calculation, but I'm not sure.

GetNativeTempBuffer allocates less than Size bytes?

Assuming that

query.FieldsToFetchis a series ofIList<char>, then: you are assembling the total length of all characters to put them into a single buffer area to hash. The problem is thatcharis two bytes wide. You are counting the number ofchars, butcontext.GetNativeTempBuffer()is expecting the number ofbytes, which is twice the number ofchars. This means you overrun your buffer exactly halfway through loading the string into the buffer.This would definitely take a while to identify without your electric fence. Nice catch!

Stuart, Yes, that is indeed the issue. The actual problem is even worse, leaving aside the fact that the code read correctly. The native buffer works in power of 2, so most of the time, this would be fine, and then we'll have silent heap corruption...

This ought to be a trivial matter for a static code analyzer to find. Isnt there any good ones available for unsafe c# code?

Dennis, I haven't seen any for C#, no.

Nice catch. I would love to see you more going into the BlittableJson stuff. How do you deal with updates to a large document and will be there a BlittableJsonWriter?

Alois, There is :-)

https://github.com/ravendb/ravendb/blob/v4.0/src/Raven.NewClient/Json/BlittableJsonWriter.cs

Can you explain about large documents?

I have played around with the Blittable classes a bit. The managed heap is basically empty if I deserialize a 200MB json file. That is great. Even when I access the strings as LazyStrings it is still quite fast. But what is the story when you have deseralized the json into a BlittableJsonReaderObject and you want to keep it? You cannot add or change items in it since it is read only. Is this class meant as intermediary only to later materialize a normal CLR object or is it possible to mutate the BlittableJsonReader..... objects. If you still need to copy things into normal objects you loose the gained speed while reading very quickly. My current test looks like

which uses this definition

Alois,

Yes, blittable is meant to be immutable, that make both the structure and working with it much simpler. You are not meant to really mutate a blittable, you would typically send it to the user (CLR class, network, etc) and then get a whole new object back.

While we do have support of applying mutations, they require re-generating the blittable.

Note that stuff like searching inside the blittable like you do would typically be the other way around. Take the CLR string and turn that into a

LazyString, then compare it directly to the blittable value.Ahh ok. So this whole Blittable thing is only there to make queries over many small or some large documents cheap for the managed heap to prevent long GC pauses if the next Gen2 or Gen1 from the finalizer due to low memory kicks in? I was hoping to get something faster than Protocol Buffers or JSON.NET which also allows mutation of the read objects. Of course it is possible to come up with hybrid objects which store the modifications in normal objects but that is not something for the generic case. No matter how hard I try I seem to hit a wall at ca. 50-80 MB/s with managed code. The only thing left would be to deserialize at different locations from the stream in parallel. But that would impose severe limitations on the object design.

Alois, No, blittable is how we work with json in 4.0 Server & client side. However, we generate don't mutate json objects directly, we either pass them around (From server to client, etc) or we build them directly and sending them over the network.

Alois, Let us go back a few steps. What is it that you are trying to do ?

I currently have a large (up to 200 MB) Xml document serialized with DataContracts on disk which needs to be deserialized. I am searching for something significantly faster than plain DataContracts. If I redesign that stuff I want to use the fastest library out there. Blittable Json seems like a good idea if I can easily round trip the data with modifications. I care less about serialization but mostly about deserialization performance. So far it looks like JSON.NET would be significantly faster without requiring many changes to the current object model. While reading Blittable JSON is very fast I would loose the gained speed when I need to convert the Blittable JSON into the original object model back again. Since Blittable JSON is read only I cannot toss the original object model out of the window since the whole thing needs to be modifyable as well.

Do you need to read & write, or just read? Note that we provide APIs to both read & write, but it is meant for streaming, not ongoing mutation

Hard to tell since ther are quite some types serialized into that container. It will be mostly read only but for some state machine states I am not so sure if they will not change anymore after they have been deserialized back. I mainly edit other peoples code where I am seldom 100% sure what it exactly does ;-).

Comment preview