Stack arena memory allocation context

Controlling memory allocations is something that any performant service need to do. In RavenDB 4.0 we have taken that to heart, and most of our memory usage is manual, instead of relying on the GC.

Now, there are a lot of really good reasons to want to avoid manual memory management. We have decades of usage telling us that this is a hard problem, with some nasty issues associated with it. But when we are talking about performance different of orders of magnitude, I’ll accept that burden.

The first thing that we did when making this decision is to see if we can change the way we approach unmanaged memory management to make it easier to avoid all of the common issues. We made several decisions:

- All native allocations must go through a context.

- A context is a unit of work that is single threaded.

- A context is bounded in scope.

- A context is reusable.

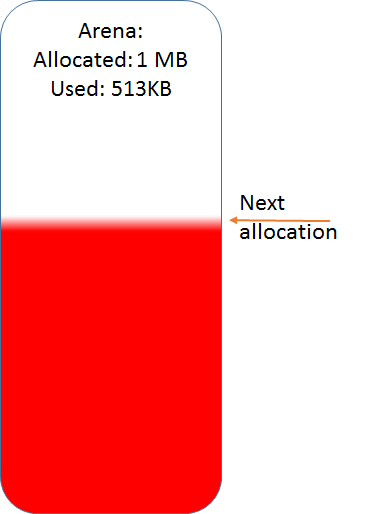

By placing those sort of limits on the native memory usage, we managed to gain a lot of simplicity. To start with, because the context is single threaded, we don’t have to implement an allocation strategy that is thread safe. Instead, we can just allocate a bunch of memory in the context, and parcel the memory from there. This looks like this:

The arena has allocated a certain size from the operation system. Allocating from that point is just a matter of bumping the next allocation pointer and handing that memory to the caller.

The nice thing about is that we don’t have the possibility for memory leaks, when the context scope ends, we can reset the next allocation pointer to the start, and we have “freed” all that memory. And we can start using it again, without having to release it to the OS and get it back again. There are a whole bunch of edge cases (what happens when the arena is completely full, etc, but they are pretty obvious).

Not having to deal with multiple threads also present an interesting optimization. Once we are done with the memory, we can return it to the arena, but instead of maintaining free lists, etc, we do the following. If the returned memory is at the top of the arena (the last thing allocated), then we just bump the next allocation down, and the next allocation can reuse that memory. If that isn’t the case, we’ll do nothing, and rely on the context scope to handle this.

That, in turn, means that if I’m careful to free in the reverse order of allocations, I can get, within a single scope, memory reuse very cheaply. That is important, as we’ll see in a bit.

The context is also scoped to a certain operation, such as processing a request or an index batch. Because of that, even if we need to grow the arena, that is limited to however many operations we have to do inside that scope. And once the scope is over, we aren’t disposing of the context and releasing the memory, instead, we pool them so the next action will be able to use them. So a single OS level allocation is used across many actions, and the memory free cost is just setting a pointer.

But some scope need to do a lot of work, consider an index that is processing a big batch, in order to reduce the amount of memory that is being used, that is where the ability to free the top memory allocation comes in handy, because even inside the same scope, we can get memory reuse.

All in all, the allocation strategy was chosen to play to our strengths, and allow us to avoid doing multi threaded work in the hot paths, it also means that we get quite a lot of memory reuse, both within a scoped context and in different scopes, which reduce the overall amount of memory that we need to allocate. And the really great thing, it doesn’t come with a big pile of time spent in GC.

Comments

This also seems to assume that a context / scope is expected to be (relatively) short lived, i.e. tied to a specific limited time job or task. What approach are you using for allocations not bound to such a short-term scope?

Deallocation in the reverse order of allocations tends to be a rather natural pattern for many tasks. For those tasks where this isn't a natural pattern, and I am not sure if that is (sufficiently) relevant for RavenDB, could you not stack / nest allocators, with different allocation strategies within a context. E.g. obtain a "buddy allocator" instance from a context that can support a different recycling scheme using the context's remaining available memory. When disposing this nested buddy allocator reset the "next allocation pointer", as in:

How do you know/track whether an allocation is at the top? You'd have to either know the length of the buffer being returned or track them somehow unless the arena allocates fixed size buffers.

@alex yes, that is what is called a generalized allocator. We have several different strategies dependent of the use, and we are in the process of generalizing the different allocators to be able to just declare what kind of allocation strategy we want to use. We currently have arena allocators, stack allocators, high throughput and small values allocation, and a few others. The problem is how to avoid the performance impact of among others virtual dispatching and branching in very hot code (as allocators typically are).

It's funny that the more performance constraints and targets are placed on a piece of business software the more it's starts to look like "games programming" than "enterprise software development". This is one of my favorite video about what it takes to squeeze all performance you can and the change of mindset required, from Mike Acton @CppCon 2014 : https://www.youtube.com/watch?v=rX0ItVEVjHc&index=8&list=PLZhGU5qcEqmCR2-YOITSQrlYRiyuwSPyY . Even if's a C++ talk, there's lots to learn.

Ups, didn't mean to share a list, here's the link: https://www.youtube.com/watch?v=rX0ItVEVjHc

Hm, the deallocation optimization can blow up if there is

somewhere. That breaks all deallocations downwards from that point on. That way a constant space workload can grow unboundedly under rare circumstances. If you are relying on deallocation in any way you are now obliged to deallocate everything. It's no longer optional.

The same applies if you break the deallocation reverse order in any way.

alex, That was the original though, then we run into indexing under load :-) In the scenario in question, we process roughly 32 million entries in a single context, and we can't wait until the end to recover.

Reverse de-allocation is pretty common, and was actually relatively easy to add in the couple of places where it didn't already happen.

We already use several types of allocators. We have the stack arena, a slab (all the same size) and another arena allocator that keep track of freed memory. But the heavy use is with the stack arena.

Philippecp, The allocation return the pointer + size from the allocation, and get them back on deallocation, so that is easy.

Pop Catalin, I wouldn't call RavenDB standard business software :-) But yeah, this is very different mindset

tobi, Yes, it can break, but there is the fallback for the context scope to cover us in that case

Comment preview