The tale of the intermittently failing test

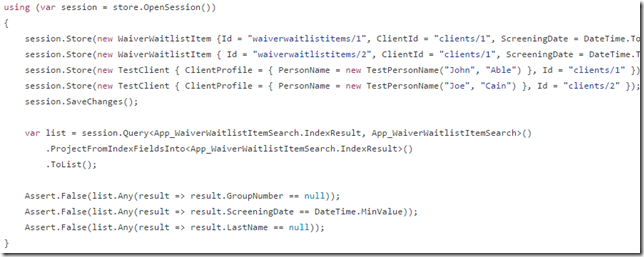

We recently started seeing a failing test in our RavenDB 4.0 test suite. This test was a relatively simple multi-map/reduce test. Here it is:

I checked the history, and this test has been part of our test suite (and never failed us) since 2012. So I was a bit concerned when it started failing. Of course, it would only fail sometimes, which is the worst kind of failures.

After taking a deep breath and diving directly into the map/reduce implementation and figuring out all the parts that were touched by this test, I was stumped. Then I actually sat down and read through the test and tried to figure out what it is doing. This particular test is one that was sent by a user, so there was business logic to penetrate too.

The strange thing is that this test can never pass, it is inherently flawed, on several levels. To start with, it isn’t waiting for non stale results, which was the obvious racy issue. But once we fixed that, the test always failed. The problem is probably a copy/paste error. There supposed to be two lines for clients/1 and two lines for clients/2. But there are three lines for clients/1 and only one for clients/2. So this test should always fail.

But, because we didn’t have WaitForNonStaleResults, it will always return no results (it didn’t have time to finish indexing from the SaveChanges to the index) and the test would pass with empty result set.

This has been the case since 2012(!), mind you.

I fixed the copy/paste issue and the WaitForNonStaleResults, and the test consistently pass now.

The most interesting observation that I have here is that RavenDB is now able to run a full map/reduce cycle in the time it takes the test to move from the SaveChanges line to the query itself. And that is a damn impressive way to find bugs in your tests.

Comments

Similar to doing some SQL operations with isolation level ReadCommitted - received results depend on timing relationship between transactions So this brings a question - if Voron is going to be fully ACID, are you guys going to make session behave in more ACIDic way, and, for example, guarantee query results will not depend on any races? R

Rafal, RavenDB queries are continue to be BASE. Voron isn't actually used to run those queries, Voron indexes are ACID, but we are using a background process for indexing. It is just that we are now much faster in 4.0

I think this highlights very well the value that testing should indeed go red, green, refactor. If you don't ensure a test actually fails when it should, bugs like this (in this case in the test) slip through.

Johannes, You can't really build a red test for performance of this kind. Consider this feature: "Changing the way we store data so we won't need to parse when loading from disk" How do you write a red test for that? Or "ensure that we have zero copy of the data from disk all the way to the network"

The original test wasn't about performance if I recall correctly, improved performance was only the cause that it eventually failed. If it were written using red geen refactor, the user that submitted the test would have failed at the first step and noticed the bug in the test code (i.e. Test always passes, no red).

Testing performance is hard, would absolutely love to see a post on how you approach performance testing, i.e. Is it all one offs or do you have a test suite you run on every major change? How do you deal with variance and significance?

Johannes, Users routinely submit tests that are passing to us. Usually because some sort of issue in the test that we fixed, then added to our suite to make sure that this scenario is covered.

Perf testing is done outside of regular testing. We have a perf team that works based on scenarios. We have a set of scripts that we run nightly, that give us a broad look on our performance across a large number of situations. For example, bulk insert, indexing, etc. That tell us where we are, and allow us to detect regressions early.

Comment preview