Peeking into Lucene indexing

Continuing my trip into the Lucene codebase, now I’m looking into the process indexing are happening. Interestingly enough, that is something that we never really had to look at before.

It is quite clear that Lucene is heavily meant for utilizing additional threads for improving overall indexing speed. You can see it in the number of per thread state that exist all over the indexing code. That is also meant to reduce memory consumption, as far as I can see.

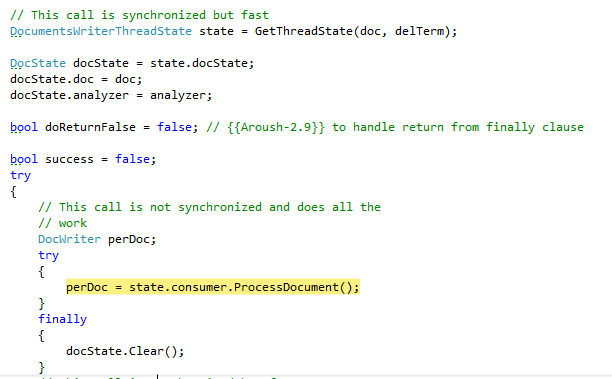

The real stuff happens in the ProcessDocument() where we have a chain of DocFieldConsumerPerThread and TermsHashConsumerPerThread which actually do the work.

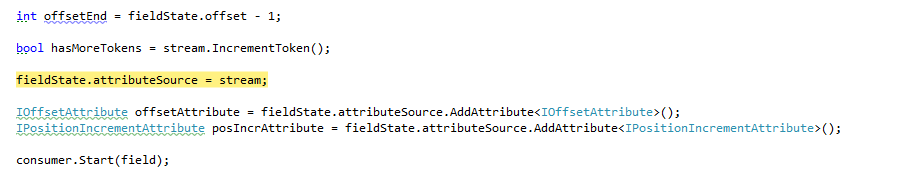

Then the real work is happening on a per field level in DocInverterPerField, where the analyzer is actually called. The process in which the analyzers return values for the text is interesting. There are “attributes” that are added to the stream, per token, and they are used to get the relevant values. I assume that this allows to have different levels of analyzers.

This way, you can have analyzers that don’t deal with offesets or positions, etc. And the actual processing of this is done in a chain that appears to be:

- Freq Prox

- Term Vectors

But that isn’t something that I really care about now. I want to see how Lucene writes the actual terms. This is being written in the TermsInfoWriter, which took some time to find.

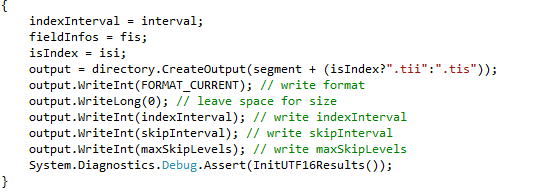

Terms are stored in a prefix compressed mode (sorted, obviously), and there is the actual terms, and an index into the terms, which allows for faster seeking into the file. This is actually done here:

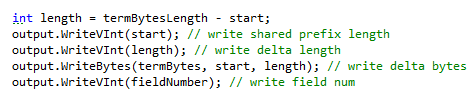

This is a single term written to the file. A lot of the stuff Lucene does (prefixes, VInt, etc) are done in the name of conserving space, and it reminds me greatly of LevelDB’s SST. In fact, the way terms are stored is pretty much an SST, except that this happens to be on multiple files. Pretty much the entire behavior and all the implications are the same.

It also means that searching on this is fast, because the data is sorted, but pretty complex. And it also explains a lot about the actual exposed API that it has. I think that I have a pretty good idea on how things work now. I want to now go back up and look at specific parts of how it works… but that is going to be the next post.

Comments

I see that many posts lately goes about performance, reads writes and databases, so would be interesting if you could review https://github.com/robconery/biggy one day, tell us what you think.

Giedrius, Biggy keeps things in memory, it is about as interesting as a hashtable for this purpose. For large data set (even just a few millions), it is going to be very slow for queries (no indexes), it has poor safety guarantees, etc. It is great for showing very fast reads on very small data sets, and to be fair, there are a lot of sites that have small data sets, but... that isn't really an interesting story from my point of view.

I get, that biggy is not a competitor to RavenDB in any sense, but it would be interesting how it looks for a person that has written own database engine - I mean such person should see possible traps from several quick looks, not something that is limited from the requirement for it to be lightweight, but something that is overlooked and may be fixed without loosing lightweight. Not sure what is status of TekPub TV after PluralSight was acquired, but I think it would be an interesting episode.

Giedrius, The data isn't indexed. The data isn't safe when you are using the file persistence. There are no transactions to speak off. Those are just from reading the initial page. There isn't really a point in going deeper.

Comment preview