Are you tripping on ACID? I think you forgot something…

After going over all the options for handling ACID, I am pretty convinced that the fsync approach isn’t a workable one for high speed transactional writes. It is just too expensive.

Indeed, when looking at how both SQL Server and Esent handle this, they are using unbufferred write through writes to handle this. Now, those are options that are available to us as well. We have the FILE_FLAG_WRITE_THROUGH and FILE_FLAG_NO_BUFFERING options with Windows (I’ll discuss Linux in another post).

Ususally FILE_FLAG_NO_BUFFERING is problematic, because it requires you to write with specific memory alignment. However, we are already doing only paged writes, so that isn’t an issue. We can already satisfy exactly what FILE_FLAG_NO_BUFFERING requires.

However, using FILE_FLAG_NO_BUFFERING comes with a cost. If you are using unbuffered I/O, you cannot be using the buffer cache. In fact, in order to test our code on cold start, we do an unbuffered I/O to reset it, and the results are pretty drastic.

However, the only place were we actually need to do all of this is in the journal file. And we only have a single active one at any given point in time. The problem is, of course, that we want to both read & write from the same file. So I decided to run some tests to see how the system will behave.

I wrote the following code:

1: var file = @"C:\Users\Ayende\Documents\visual studio 11\Projects\ConsoleApplication3\ConsoleApplication3\bin\data\test.ts";2: using (var fs = new FileStream(file, FileMode.Create))3: {4: fs.SetLength(1024 * 1024 * 10);// 10 MB file5: }6:7: var page = new byte[4096];8:9: new Random(123).NextBytes(page);10:11: using (var fs = new FileStream(file, FileMode.Open, FileAccess.ReadWrite, FileShare.ReadWrite))12: {13: var memoryMappedFile =14: MemoryMappedFile.CreateFromFile(new FileStream(file, FileMode.Open, FileAccess.ReadWrite, FileShare.ReadWrite, 4096, FileOptions.None),15: "foo", 1024 * 1024 * 10, MemoryMappedFileAccess.ReadWrite, null,16: HandleInheritability.None, false);17: var memoryMappedViewAccessor = memoryMappedFile.CreateViewAccessor();18:19:20: fs.Position = 4096 * 2;21: fs.Write(page, 0, page.Length);22:23:24: memoryMappedViewAccessor.ReadByte(4096 * 2 + 8);25:26: fs.Position = 4096 * 4;27: fs.Write(page, 0, page.Length);28:29: memoryMappedViewAccessor.ReadByte(4096 * 2 + 8);30:31: memoryMappedViewAccessor.ReadByte(4096 * 4 + 8);32:33: }

As you can see, what we are doing is actually writing to a file using standard File I/O, and reading via memory mapped file. I’m pre-allocating the data, and I am using two handles. Nothing strange happening here.

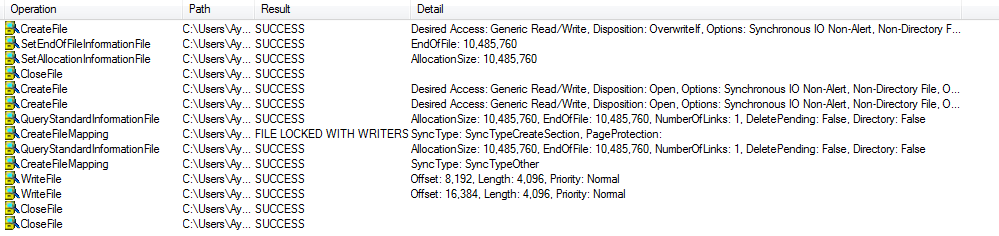

And here is the system behavior below. Note that we don’t have any ReadFile calls. The answer to the memory mapped reads were done directly from the file system buffers, no need to touch the disk.

Note that this is my baseline test. I want to start adding write through & no buffering and see how it works.

I changed the fs constructor to be:

1: using (var fs = new FileStream(file, FileMode.Open, FileAccess.ReadWrite, FileShare.ReadWrite, 4096,FileOptions.WriteThrough))

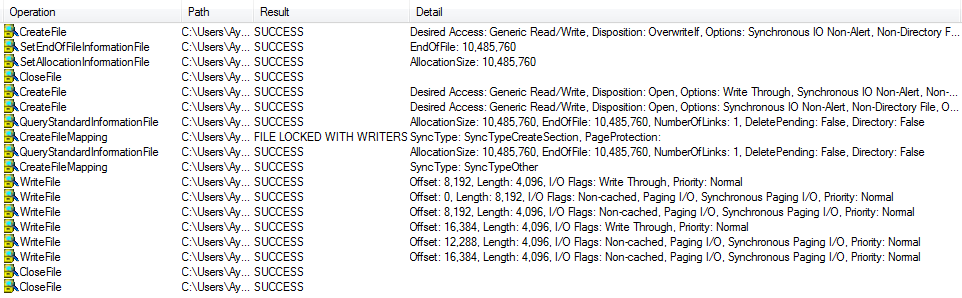

Which gave us the following:

I am not really sure about this behavior, but I am guessing that what actually happened here is that we are seeing several levels of calls (probably we have unbufferred write followed by a memory map write?). Our write to send a page ended up writing a bit more, but that is fine.

Next, we want to see what is going on with no buffering & write through, which means that I need to write the following:

1: const FileOptions fileFlagNoBuffering = (FileOptions)0x20000000;2: using (var fs = new FileStream(file, FileMode.Open, FileAccess.ReadWrite, FileShare.ReadWrite, 4096, FileOptions.WriteThrough | fileFlagNoBuffering))

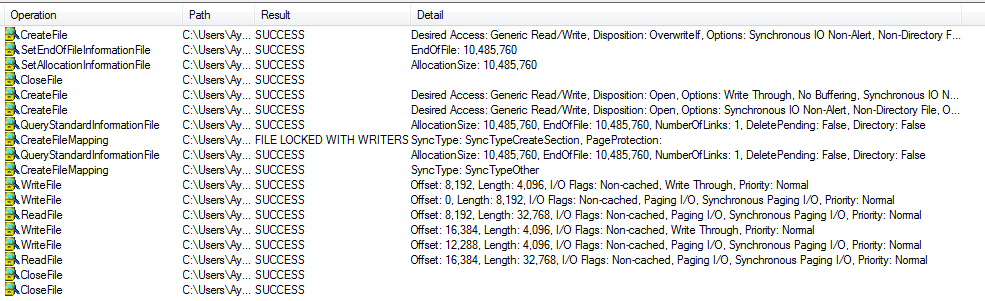

And we get the following behavior:

And now we can actually see the behavior that I was afraid of. After making the write to the file, we lose that part of the buffer, so we need to read it again from the file.

However, it is smart enough to know that the data haven’t changed, so subsequent reads (even if there have been writes to other parts of the file) can still use the buffered data.

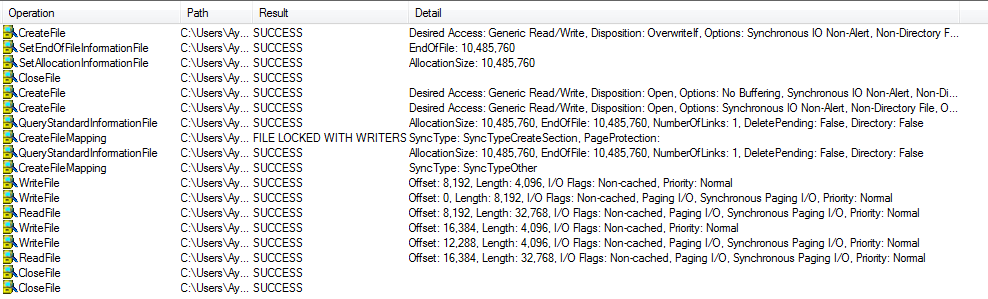

Finally, we have the final try, with just NoBuffering and no WriteThrough:

According to this blog post, NoBuffering without WriteThrough had a significant performance benefit. However, I don’t really see this, and both observation through Process Monitor and the documentation suggests that both Esent and SQL Server are using both flags.

In fact:

All versions of SQL Server open the log and data files using the Win32 CreateFile function. The dwFlagsAndAttributesmember includes the FILE_FLAG_WRITE_THROUGH option when opened by SQL Server.

FILE_FLAG_WRITE_THROUGH

This option instructs the system to write through any intermediate cache and go directly to disk. The system can still cache write operations, but cannot lazily flush them.

The FILE_FLAG_WRITE_THROUGH option ensures that when a write operation returns successful completion the data is correctly stored in stable storage. This aligns with the Write Ahead Logging (WAL) protocol specification to ensure the data.

So I think that this is where we will go for now. There is still an issue here, regarding current transaction memory, but I’ll address it in my next post.

Comments

You can look at the stacks of IO events to find out who issued them. You can also tun on "Enable Advanced Output" to see what raw IOs the kernel issues to drivers.

Tobi, How do I see that?

For stacks you configure symbols and look at the properties of any event. "Enable Advanced Output" is just a checkbox that formats the output differently. It shows what the kernel asks the file system drivers to do (which is different from what the app asks the OS to do).

Tobi, Where is the "enabled advanced output" checkbox? In the Process Monitor?

Yeah, Process Monitor -> Filter -> Enable Advanced Output. The output is hard to interpret sometimes without knowledge of how the kernel works.

Ayende, unfortunately using FileStream you can't specify FILE_FLAG_NO_BUFFERING which makes the difference. Process Monitor is wrong tool to see metadata updates in $Mft "file". Running xperfview.exe from Windows Performance Toolkit can show you actual disk arm movements. Perfview (http://www.microsoft.com/en-us/download/details.aspx?id=28567 don't be confused by name similarity) is another tool to see real io operations. Again, the difference is only in to update metadata (last write time, file length etc) on every call or not. This is what makes your access pattern trully append only or not. And don't forget to defragment your drive before testing.

Ayende, please disregard my previous comment - I missed your trick with FileOptions.

Ayende, removing FileOptions.WriteThrough makes difference if file is not preallocated (https://gist.github.com/DmitryNaumov/7637216). Sometimes MongoDb decides not to preallocate, which is another issue. And keep in mind that number of bytes you're writing should be no less then bufferSize specified in FileStream ctor, otherwise no matter what flags are FileStream.Write will copy bytes to internal buffer without actually writing them to disk

Dmitry, We always preallocate the files. And we are always writing full pages.

Comment preview