Deleting ain’t easy

We started getting test failures in Voron regarding to being unable to cleanup the data directory. Which was a major cause for concern. We thought that we had a handle leak, or something like that.

As it turned out, we could reproduce this issue with the following code:

1: class Program2: {3: static void Main(string[] args)4: {5: var name = Guid.NewGuid().ToString();6: for (int i = 0; i < 1000; i++)7: {8: Console.WriteLine(i);9: Directory.CreateDirectory(name);10:11: for (int j = 0; j < 10; j++)12: {13: File.WriteAllText(Path.Combine(name, "test-" +j), "test");14: }15:16: if (Directory.Exists(name))17: Directory.Delete(name, true);18: }19:20: }21: }

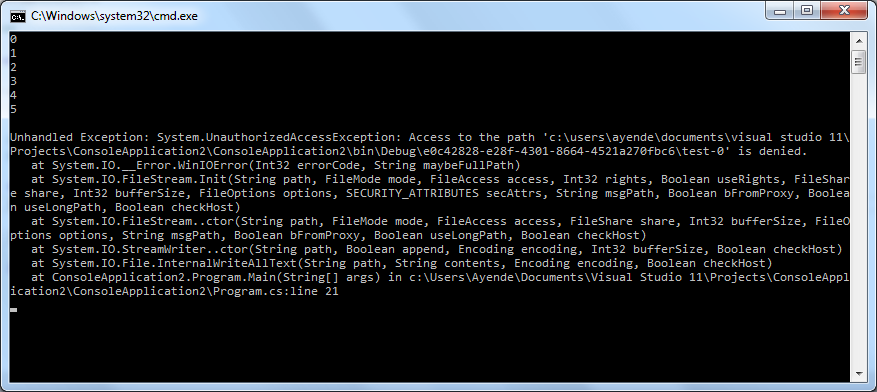

If you run this code, in 6 out of 10 runs, it would fail with something like this:

I am pretty sure that the underlying cause is some low lever “thang” somewhere on my system. FS Indexing, AV, etc. But it basically means that directory delete is not atomic, and that I should basically just suck it up and write a robust delete routine that can handle failure and retry until it is successful.

Comments

It reminds me of a problem in the Ripple from FubuMVC. There very same error was found by this team.

I have hit this issue as well. Make the loop a Parallel.For and the issue is even easier to reproduce.

Forgive me, for I have sinned. I had the same problem, but just added a Thread.Sleep(100) after the delete.

I've made a SafeDelete() method before that attempts the delete and retries a few times with 100ms sleep in between until success.

"write a robust delete routine that can handle failure and retry until it is successful"

I wonder how many times should you try? I wonder if you should just queue the files to be delete, and just do "Delete" runs with a process maybe?

Why delete all data directory ?

Could you create exclusive locks on your files so that no other process can come near ?

That way you could do anything with your files. What I'm missing here?

Petar, I am running a test, and I want to delete the files to leave clean ground for the next test.

Fire-and-forget a delete to a previous directory (or all of them by some naming convention) contained inside sys temp folder.

Then create new dir for new test.

Don't worry, everything is OK. The file system is eventually consistent ;) You just need to wait a little before doing anything funny.

Yes, unfortunately the "atomic" file operations are not always that atomic (same goes for move/rename). A retry/check loop is required where also the check may sometimes fail even though the operation succeeded (e.g. move file -> succeeds, check if moved file exists -> fails, check again -> succeeds). As @Rafal indicated: kinda sometimes eventually consistent and kinda sometimes not.

In addition, and specific to memmapping: Just because you have disposed the map and renamed/deleted the file, does not seem to automatically imply that your app won't read from the file anymore. If e.g. you have some pointers left in an array that is GC'd shortly after the map was closed and the file was deleted, you may observe that the OS starts reading from the file again. Also I think I have observed similar behavior when re-opening (with a new memmap over it) such a file shortly after it was closed.

@Alex actually file rename/move is atomic and it's explicitly said in MSDN documentation. This can be used to perform other file operations in a kinda-atomic way, for example, ,rename the directory to some random/unique name first and then delete it without worrying about duplicate paths.

That is, i hope directory rename is atomic too...

I see this issue a lot when issuing a del -Force -Recurse to clear up a build directory before doing a clean rebuild. It seems to have replaced the earlier "directory is not empty" failures that happened when doing the same sort of operation in older versions of Windows.

Updated test with "Robust delete" haha

public static void RobustDelete() { var name = Guid.NewGuid().ToString();

@Steve Gilham We experience this issue alot in our TFS (2010) builds; also before doing the cleanup before a new build.

Upgraded to TFS 2012 this weekend, but I dont think it will fix this issue.

@Peter

Using goto statements for retry operations IMO is a perfectly fine usage of goto, it is much cleaner than writing odd loops to do it. I do recommend adding a counter to prevent an infinite loop that it will bust out and return a real error at some point.

The classical windows-problem of two processes or threads accessing the same directory or file simultaneously => Deleting may ALWAYS fail at any point.

If, for example, you using version-control tools on windows, you may encounter this whenever you try to delete something, because they continuously scan the disk in background, etc...

You can use sysinternals handle.exe to see everything that's being accessed on the disk...

Linux/unix has a different disk-access model, so will not be a problem there...

Comment preview