The curse of resource utilization

I got a request from a customer to look at the resource utilization of RavenDB under load. In particular, the customer loaded the entire FreeDB data set into RavenDB, then setup SQL Replication and a set of pretty expensive indexes (map reduce, full text searches, etc).

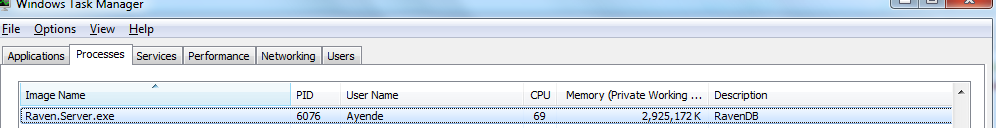

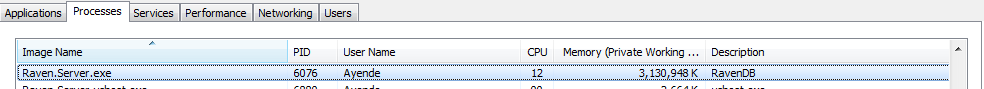

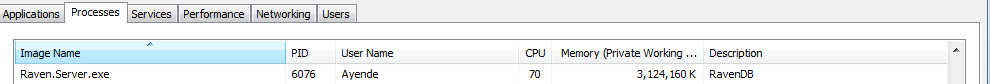

This was a great scenario to look at, and we did find interesting things that we can improve on. But during the testing, we had the following recording taken.

in fact, all of them were taken within a minute of each other:

The interesting thing about them is that they don’t indicate a problem. They indicate a server that is making as much utilization of resources as is available to it to handle the work load that it has.

While I am writing this, there is about 1GB of free RAM that we’ve left for the rest of the system, but basically, if you have a big server, and you give it a lot of work to do, it is pretty much given that you’ll want to get your money’s worth from all of that hardware. The problem in the customer’s case was that while it was very busy, it didn’t get things done properly, but that is a side note.

What I want to talk about is the assumption that the server is using a lot of resources, that is bad. In fact, that isn’t true, assuming that it is using them properly. For example, if you have a 32GB RAM, there is little point in trying to conserve memory utilization. And there is all the reason in the world to try to prepare ahead of time answers to upcoming queries. So we might be using CPU even on idle moments. The key here is how you detect when you are over using the system resources.

If you are under memory pressure, is is best to let go of that cache. And if you are busy handling requests, it is probably better to reduce the load of background tasks. We’ve been doing this sort of auto tuning in RavenDB for a while. A lot of the changes between 1.0 and 2.0 were done around that area.

Comments

So when you gauge available resources for RavenDB do you only take into account the resources on the server, or do you factor in the other applicaitons / services running on that machine?

Khalid, Nope, what we are doing is using as much free resources as we can do, with some buffer for the unexpected.

Ah ok, so it's like a family dinner. You grab what's left on the plate :) If my app snoozes it loses. Makes sense. Thanks.

Khalid, Great analogy!

"The key here is how you detect when you are over using the system resources."

We are now left with the the question of how does one go about doing that? I feel there's no easy answer and it probably differs for different applications. Will this be the subject of tomorrow's post?

Great,

Where is a good start point for RavenDB?

Dan, The way we do it is to look at the available free RAM and make decisions based on that. We also look at time & IO, but not as important. We'll try to take as much RAM as we need, until we hit the buffer we leave for other stuff. If there is too much, we will scale back our work to free resources.

Ehsan, The ravendb website, ravendb.net

Comment preview