Voron’s Log

I was thinking, which is a bit dangerous, about Voron’s backup story, and things sort of started to roll down the hill from there.

Nitpicker note: None of the things that I am discussing here is actually novel in any way, I am well aware of that. I am articulating my thought process about a problem and how it went down from an initial small feature to something much bigger. Yes, I am aware of previous research in the area. I know that Xyz is also doing that. Thank you in advance.

Voron’s backup story is a thing of beauty, and I can say that because I didn’t write it. I took it all from LMDB. Basically, just copy the entire file in a safe and transactional manner. However, it cannot do incremental backups. So I started thinking about what would be needed to happen to get that, and I found that it is actually a pretty easy proposition.

Whenever we commit a transaction, we have a list of modified pages that we write back to the data file. An incremental backup strategy could be just:

Do a full backup – copy the full data file and put it somewhere. Keep track of all the modified pages in a log file. Incremental backup would just be copying the change log elsewhere are restarting the change log.

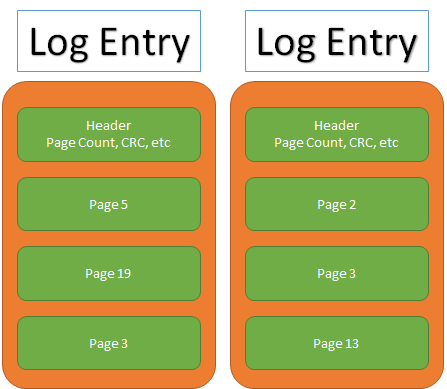

Restoring would happen by taking the full data file and applying the changes to it. This looks hard, but not when you consider that we always work in pages, so we can just have a change log format like this:

The process for applying the change log then becomes something like this:

foreach(LogEntry entry in GetLogEntryFiles()) { foreach(Page page in entry.Pages) { WritePageToDataFile(page.PageNumber, page.Base); } }

And voila, we have incremental backups.

Of course, this means that we will have to write the data twice, once for the incremental log file, and once for the actual data file. But that led me to another series of thoughts. Once of the areas where we can certainly do better is random writes. This is an area where we have a particular problem with. I already discussed this here. But if we already have a log file (which we need to support incremental backups), and if we already write twice… why not take advantage of that.

Instead of having a change log, we can have a write ahead log. That turn all of our writes into sequential writes. Leading to much faster performance overall.

The downside of write ahead logging is that you are going to need to do a few important things. To start with, there is a point where you need to spill the write ahead log into the actual file. That leads to doubling the costs of writes, in comparison to LMDB’s usual manner of only a single write. It also means that you now have to implement some form of recovery, since we need to read the log at startup.

The benefits however are pretty good, I think. There is a reason why most databases have some form of Write Ahead Log to make sure that their writes are sequential. And the nice thing about it is that we can now flush only very clearly defined section of the file, and only do fsyncs on the actual log file.

The idea now becomes:

In the data file header we add record to indicate what is the last flushed log file, and up to where in the log file we flushed. We pre-allocate a log file with 64MB, and start write to it using mem map.

We also write to the end of of the mapped range, and we can safely state what section of the file needs to flush & fsynced, and it is always at the (logical) end of the file. Once every X (where X is time or size or just idleness), we take all the records from the log file from the last position we flushed the log file to the current location, then update the data file header accordingly. The fun part about this is that this is absolutely safe in the case of failure. If we failed midway through for any reason, we can just re-apply the process from the beginning and there can be no corruption. There is also a good chance for being able to do additional optimizations here, because we can merge a lot of writes into a single operation and then make sure that we access the disk in a predictable and optimal fashion. All of this means that this can be an async process that is allowed to fail, which is very good from our perspective, because it means we can just kick it off with low priority to avoid having impacting regular write performance.

Until we get the data flushed to the data file, we maintain an in memory map of pages. In the image above, we will have a map for pages 2,3,13,19 and when they are requested, we will give out the address of the memory mapped log file entry at the appropriate location.

One thing that I want to avoid is stalls, like what happen to leveldb under heavy sustained writes. So when the log file is full, we just create a new log file and write to that. It is possible that under very heavy load you’ll have the “flush to data file” process take long enough that the new log file will also be full before the process is done. Unlike leveldb, we are going to just go ahead and create a new log file. So when the “flush to disk file” process resumes, it may need to process multiple log files. That is fine with us, and likely to be even better, because free space reuse will mean that we can probably skip writing pages that were overwritten by newer transactions (for example, above, we only need to write page 3 once).

There are a bunch of “details” that still need to be done:

- What happens if you have a single transaction that is bigger than the size of the log file?

- We can reject that transaction, 64MB per transaction is a lot.

- Probably it means that we need to have a log file dedicated to that transaction, but how do we detect / decide that? That doesn’t seems workable.

- Probably better would be to keep the 64MB size but say that this is a transaction start only, and that we need to process transaction end before we consider this committed. This is very similar to the way the leveldb log works.

- Need to think about way to reduce the impact of flushing the logs:

- Maybe provide a way for the user to “suppress” this for a period of 1 minute. We can use that during things like bulk insert, to get very high speed. And as long as the bulk insert goes, we will keep asking for an extension. (I don’t want to have on/off switches, because that means we have to be very careful about turning it on again).

- Maybe we can detect the rate of writes and adjust accordingly? But what happens when we are running multiple databases at the same time?

- Transaction logs should be named 0000000001.txlog, 0000000002.txlog, etc. Because I keep getting send them as “database log” during debug scenarios.

- Need to make sure that this works properly for large values. So values that takes more than a single page are supported.

- To make things complicated, what happen if we are the last 3 items in the log file, but we need to write a value that is 5 pages long? We need to mark the transaction as “tx started”, move to the next log file, and continue the transaction.

- For that matter, what if we have a single value that is more than the size of the log file? Is that something that we want to support? We can do the same as “tx started”, marker, but maybe also indicate that we a “tx large value start”, “tx large value mid”, “tx large value end”.

- For both “tx started” and “tx large value started” we need to be sure that we consider the transaction aborted unless we have the “tx end” part.

- On startup, we need to read the log file(s) from the last flushed log location and upward and build the map of the pages contained in the log file. This introduce a “recovery” step of a sort, but I think that this should be a pretty small one overall.

- We need to make sure to delete old log files (unless users want them to incremental backup purposes). And we need to make sure that the memory map for read transactions is kept alive as long there are any transactions that may be reading from it.

Overall, I think that this is a pretty radical departure in our internal process compare to how LMDB works, but I have high hopes for this. Even though it starts with “do we really need to support incremental backups?”

My expectation is that we will see much higher writes, and about the same numbers for reads. Especially for our scenarios.

Comments

Mmm. You're going down a direction that we specifically ran way from, in BDB. Sysadmins were forever having problems with the logfiles accumulating and taking up exorbitant amounts of space. And recovery tended to fail, which was even worse.

You will find that you need to add a lot of features to make this work well, and every one of those features will require options and configuration knobs. (E.g., configure path to logfiles, so you can place them on a separate spindle from the data files, so log and data writes can be concurrent. Size of logfile buffers, size of individual logfiles, frequency of flushes, all of these need to be tailored to different workloads.) Again, we explicitly ran away from this complexity after having dealt with it for over a decade.

For very large objects the common practice is to write them directly to the data file, and not to the log. If they are large, you're getting a sequential write out of it anyway.

The approach you're considering here would make WRITEMAP about equally complex as non-WRITEMAP, negating some of its write performance advantage. It will also slow down read performance since all reads will have to go through a page mapping lookup to see whether to read from the logfile page or from the datafile page. In LMDB only write transactions pay that mapping cost; your approach is shifting the balance away from read-optimized and simple to write-optimized and complex. It's a tradeoff everyone else has already made. Sounds like ultimately you want to be using one of those other DB engines. ;)

Howard, You are correct that this is explicitly not what you are doing. And you are also correct that this require more complexity and more configuration. However, there are good reasons why you want to do that. The ability to have logs & data files on separate spindles is an important one for increasing I/O rate.

Our needs is a bit different than what OpenLDAP needs, because for the most part, we are dealing with a lot of values that would overflow (typical values for us are 10s - 100s KB, and it is common to have a few MB as well. LMDB currently doesn't do a good job in reclaiming space under those conditions, and random writes, which is a common scenario that we need to deal with show a particularly poor perf.

Yes, we still consider ourselves to be mostly read database, but there are many cases in which we need to deal with a lot of data coming in. Daily import jobs are the most common scenarios, and that impacts our users heavily. And one of the most common micro benchmarks that people do is test the speed of putting the data into the db. Yes, that is usually a silly benchmark, and it doesn't mean much for real world operations. But it does impact the perception of the database, and that is quite important.

I am actually going to be moving away from WRITEMAP option, because it is too unpredictable for our purposes with regards to how the system behaves. We can't control the order in which things happen, and we need to do that. So we move to doing most of our IO using file i/o, instead of mem map ops on the data file.

Read perf in this case is going to have to deal with O(1) cost to seek a page, so I don't expect that to be a big hit, but we will pay it if needed. Currently we see read rates of 1M+ / sec without trying very hard, I can give up a lot of that and still be in a better condition than where we are now with Esent.

Interesting, I had not thought of it from the perspective of having incremental backups. The predictable constant throughput write performance had been my main concern (combined with an easy recovery story), but this is indeed a nice added benefit.

As mentioned in my comment on the "With malice ..." post,I am currently writing to journal and data file at roughly the same time (i.e. on transaction commit), fsyncing the log file but not the data file, which is done periodically in a background task.

If I understood correctly, you are doing a type of "lazy background writing" for the data file and using the pages in the journal chunks as part of the B+Tree until it has caught up. Any specific reason for not letting the OS lazy writer deal with this?

I guess there could be an advantage if it were to be combined with making freed pages reclaimable (i.e. moving them from the list of transaction id bound "recyclable page ranges" to the list of "free page ranges") at that time, since that too is essentially a task that can be done "lazily" and is much more efficient in "bulk".

Another somewhat random thought: If you were to include a second type of "transaction commit checkpoint marker" for the journal it would become relatively easy to go to 2-phase commit scenarios / paxos style replication for the storage engine. This should work pretty well in combination with the "lazy chasing writer" strategy.

The problem with writing to the data file as well is that you are never sure when the OS will actually write and in what pattern. That means that I can't trust it, therefor, I want to write in predictable manner using File I/O, and only when I flush the journal. We have a better solution for free space than the current one, I think. We'll see how it works soon

Alex, I am not seeing how the tx commit checkpoint is helpful, can you explain the usage.

The checkpoint was a not very well thought through idea that popped into my head related to the "journal position" (prepare / commit checkpoint positions in the journal). I will need to think about that some more to determine if it made any sense, sorry for that.

For freespace handling, a strategy I am currently pursuing relates to the idea that you don't really want to reuse any pages until a sufficient amount of these pages is available as a sequential region (e.g. 32 or 64). This means you do not have to track individual pages, but for instance blocks of 32 (requiring 5 bits / block). When a page is freed that falls in the block (which could be done by the lazy writer), increase the block's counter by 1. If a block or 2 adjacent blocks are full, they can be used to reclaim pages from, and only at that time is it needed to track either individual pages, or again use a 5 or 6 bit counter that indicates how many of them have already been reclaimed (since this is supposed to occur in sequential order). If combined with shadow paging of this freelist structure this seems to work pretty well.

Alex, We have tried that approach, but that means that you actually going to need a LOT of tracking around. See my previous post about finding runs of free space. What we are doing now is having a bit per page, and using bit sets to find free space.

Ayende, perhaps I did not explain the approach very well, or maybe I don't quire understand your reply.

The approach I outlined, only requires around 5 bits per 32 pages or 6 bits per 64 pages, depending on the size of blocks you track (well need to round that up a little bit, due to some overhead but that is essentially it).

So that is basically a factor 6-10 more compact than using 1 bit per page, because no individual pages require tracking until a whole block is eligible for reclaiming. There is no need to do any searching, you can "div and mod 5 or 6 bits" to reach a block and get its number of free pages. You increment the 5/6 bit count when a page in the block has become free, and if this makes the block "full" (i.e. count had reached its max of 31 or 63 when trying to increment the count), the count is reset to 0 and the block is appended/inserted to the root freelist page that holds the list of blocks being used to reclaim freed pages from.

In this way around 210,000 - 350,000 pages can be tracked in a single (4096 bytes) memory page (using 32 or 64 page blocks respectively).

A second memory page (the free list's "root page"), is used to track pages being reclaimed/recycled by incrementing a counter for sequentially reclaimed pages in a block. Depending on how you pack the information on this page, it is unlikely that it will ever need to grow larger than 1 memory page. Tracking is easy, you use the first block on this page within the context of a transaction for handing out free pages and remove it when all pages have been handed out (i.e. counter has reached max).

Alex, Let us imagine a database who is 64 pages in total. We currently start with the whole database busy. We free 5 pages (1, 5, 18, 56, 43).

Where do we record that information? Because what I think you are saying is that we'll only keep track on the number of free pages in a particular 32/64 block range. But using your method, until the block isn't fully available (all pages in it are free),we can't allocate anything from it, because we don't know what pages are free or not. It is entirely possible to have a set of operations that are going to generate a lot of free space, but no full 64 pages blocks that are all free. That means that we can't use any of that free space. That is fragmentation issue that would have to be resolved. And it make it harder to deal with allocating very large docs. For example, 64 pages gives us about 256K, but how do we allocate space for a document requiring 300K ? That isn't easy, and require special coding.

Sure, we are using a lot more space, but for a database that is 100GB in size (*26,214,400 pages) we are going to need 26,214,400 bits, which are 3,276,800 bytes which are under 4 MB of space.That is an entirely acceptable overhead, as far as I am concerned.

Also, can you stop using the nospam address? I usually send updates when I am posting a comments, and it is easier in general.

I've been looking into using bit vectors for our IDLs but so far it has increased the code complexity and slowed things down.

One thing to consider - one of the things that slows down LevelDB and BerkeleyDB is the need to delete/create/open/close the logfiles on a continual basis. You might do better simply cycling through the same files again over and over. (If you want, rename the old file to a new serial number, just to make things obvious.) Every filesystem op will cost you a lot of performance, every op you can shave will pay off big.

Howard, The major reason we want to use bit vectors here is that we routinely expect to have to use overflow. A lot of our values are > 2KB, in fact, it is commonly in the 10s - 1000s of KB that we usually deal with. The LMDB method doesn't do a good job in allowing to concat adjacent free spaces that were freed by different transactions, that led us to need an alternative that would allow us better utilization fo that. We can't rename the files (we run on Windows, and that isn't going to work there), but we can pre-allocate those files ahead of time, or re-use the files, yes.

Ayende, agreed, using block sizes of 32 or 64 pages may be a bit too large. It appears though that fragmentation even with block sizes this large primarily occurs if you have mostly / many sequential inserts, and that otherwise the amount of fragmentation over an extended period of use is quite limited.

If you want to track individual page usage without frequent individual bit counting / scanning (i.e. optimize queries for ranges of free pages), a type of Fenwick tree might be useful. It can be used to track individual page usage for n pages in a little below (2 x n) bits, and allows O(log n) queries for ranges.

If e.g. used to track a block of 32 pages, 63 bits are needed. This leaves a single bit free that could be used as a marker, which would allow it to be used in e.g. a "packed" sequential page usage tracking list that contains the following types of entries. E.g.

I added a simple example implementation of such a Fenwick tree for a block of 32 pages in a gist https://gist.github.com/anonymous/6998576

Alex, The problem here is that we have two common requirements. We need multiple pages, for the actual data. And we need one page, for the tree structure. That means that whatever we use will require to handle both efficiently. Also, since we require less than 4 MB to handle 100GB db, I don't really see the urgent need to heavily optimize this.

Comment preview