Implementation details: RavenDB Bulk Inserts

With RavenDB Bulk Inserts, we significantly improved the time we take to insert a boat load of documents to RavenDB. By over an order of magnitude, in fact.

How did we do that? By doing a whole bunch of things, but mostly by being smart in utilizing the resources on both client & server.

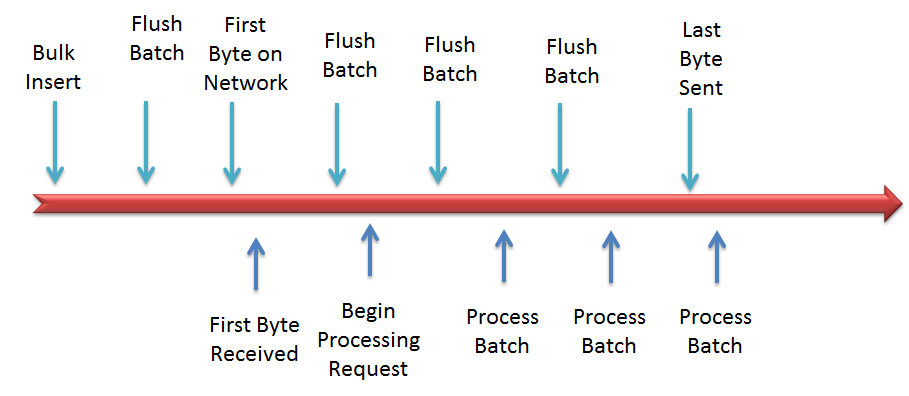

Here is how a standard request timeline looks like:

As you can see, there are several factors that hold us up here. We need to prepare (in memory) all of the data to send. On the server side ,we wait until we have the entire dataset before we can start processing the data. Just that aspect cost us a lot.

And because there is finite amount of memory we can use, it means that we have to split things to batches, and each batch is a separate request, requiring the same song and dance just on the network layer.

That doesn’t count what we have to do on the server end once we actually have the data, which is to get the data, process it, flush it to disk, register it for indexing, call fsync, etc. That isn’t too much, unless you are trying to shove as much data s you can, as fast as you can.

In contrast, this is how the bulk insert looks like on the network:

We stream the results to the server directly, so while the client is still sending results, we are already flushing them to disk.

To make things even more interesting, we aren’t using standard GZip compression over the whole request. Instead, each batch is compressed independently, which means we don’t have a dependency on the internals of the compression routine internal buffering system, etc. It also means that we get each batch much faster.

There are, of course, rate limits built in, to protect ourselves from flooding the buffers, but for the most part, you will have hard time hitting them.

In addition to that, because we are in the context of a single request, we can apply additional optimizations, we can do lazy flushes (not have to wait for a full fsync for each batch) because we do the final fsync at the end of the final request.

Finally, we actually created an optimized code path that skips doing a lot of the things that we do in the normal path. For example, by default we assume you are doing an insert only (saves checking the disk, and will throw if not true), we don’t populate the indexing prefetching queue, etc. All in all, it means that we got more than an order of magnitude improvement.

Comments

Can you think of actual real world scenarios where batch insert performance makes any difference at all? I mean who needs that except for first-time migration from a different database like sql server, in which case it doesn't matter if it takes 1 minute or 10 minutes...

Daniel, Many cases, you might have an import process running nightly, a customer may upload a file, etc. But don't discount the one time costs. Sure, it is going to be one time in production, but that ISN'T what happens in dev. Beside, I got tried from "XyzDB does Y inserts, and you do less than that."

Well, I guess that makes sense, although I've never came across the need to increase batch performance, even when importingf many thousands of documents, because those operation are usually not time senstive. Thanks for explanation - I especially like that we now can do better in those silly db-comparisons that you mentioned.

I find it helpful, personally. The sooner I can run real queries against real data the better.

Don't discount import jobs that are really large, e.g importing a planet dump of OpenStreetMap data ... being able to do that within 24 hours instead of a week is quite important.

Comment preview