Introducing Rhino.Events

After talking so often about how much I consider OSS work to be indicative of passion, I got bummed when I realized that I didn’t actually did any OSS work for a while, if you exclude RavenDB.

I was recently at lunch at a client, when something he said triggered a bunch of ideas in my head. I am afraid that I made for poor lunch conversation, because all I could see in my head was code and IO blocks moving around in interesting ways.

At any rate, I sat down at the end of the day and wrote a few spikes, then I decided to write the actual thing in a way that would actually be useful.

What is Rhino Events?

It is a small .NET library that gives you embeddable event store. Also, it is freakishly fast.

How fast is that?

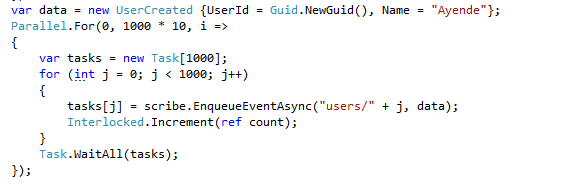

Well, this code writes a fairly non trivial events 10,000,000 (that is ten million times) to disk.

It does this at a rate of about 60,000 events per second. And that include the full life cycle (serializing the data, flushing to disk, etc).

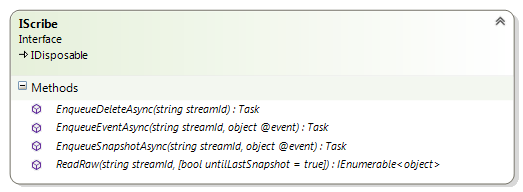

Rhino.Events has the following external API:

As you can see, we have several ways of writing events to disk, always associating to a stream, or just writing the latest snapshot.

Note that the write methods actually return a Task. You can ignore that Task, if you wish, but this is part of how Rhino Events gets to be so fast.

When you call EnqueueEventAsync, we register the value in a queue and have a background process write all of the pending events to disk. This means that we actually have only one thread that is actually doing writes, which means that we can batch all of those writes to get really nice performance from being able to handle all of that.

We can also reduce on the number of times that we have to actually Flush to disk (fsync), so we only do that when we run out of things to write or at a predefined times (usually after a full 200 ms of non stop writes. Only after the information was fully flushed to disk will we set the task status to completed.

This is actually a really interesting approach from my point of view, and it makes the entire thing transactional, in the sense that you can wait to be sure that the event has been persisted to disk (and yes, Rhino Events is fully ACID) or you can fire & forget it, and move on with your life.

A few words before I let you go off and play with the bits.

This is a Rhino project, which means that it is a fully OSS one. You can take the code and do pretty much whatever you want with it. But I , or Hibernating Rhinos, will not be providing support for that.

You can get the bits here: https://github.com/ayende/Rhino.Events

Comments

In the PersistedEventsStorage ctor, creating a thread via Task.Factory.StartNew is not a very good idea as it will "streal" one from the thread pool, which is subject to constraints and thread injection policies that assume short lived tasks. It would be better to use the LongRunning option when creating the Task or to just create a thread explicitly.

Ha! Next time we have dinner, Oren, if I see glazed, wandering eyes, I'll have to find out what new project you're thinking up. :-)

Nice work with Rhino Events.

isn't PersistedEventsStorage.WriteToDisk() a spinwait?

I would not recommend anyone using this code as it stands for anything more than a sample app or something that doesn't have high requirements. There are quite a few issues in it.

I have always said an event store is a fun project because you can go anywhere from an afternoon to years on an implementation.

I think there is a misunderstanding how people normally use an event stream for event sourcing. They read from it. Then they write to it. They expect optimistic concurrency from another thread having read from then written to the same stream. This is currently not handled. This could be handled as simply as checking the expected previous event but this wouldn't work because the file could be scavenged in between. The way this is generally worked around is a monotonically increasing sequence that gets assigned to an event. This would be relatively trivial to add (just another attribute)

The next issue is that I can only read the stream from the beginning to the end or vice versa. If I have a stream with 20m records in it and I have read 14m of them and the power goes out; when I come back up I want to start from 14m (stream.Position = previous; is a Seek() and 14m can be very expensive if you happen to be working with files the OS has not cached for you). This is a hugely expensive operation to redo and the position I could have saved won't help me as the file could get compacted in between. To allow arbitrary access to the stream is a bit more difficult. The naive way would be to use something like a sorteddictionary or dictionary of lists as an index but you will very quickly run out of memory. B+Trees/LSM are quite useful here.

Even with the current index (stream name to current position) there is a fairly large problem as it gets large. With 5+m streams you will start seeing large pauses from the serializing out the Dictionary. At around 50m your process will blow up due to 1gb object size limit in CLR

Similar to the index issue is that with a dictionary of all keys being stored in memory and taking large numbers of writes per second it is quite likely you will run out of memory if people are using small streams (say I have 10000 sensors and I do a stream every 5 seconds for their data to partition). Performance will also drastically decrease as you use more memory due to GC.

A more sinister problem is the scavenge / compaction. It stops the writer. When I have 100mb of events this may be a short pause. When I have 50gb of events this pause may very well turn into minutes.

There is also the problem of needing N * N/? disk space in order to do a scavenge (you need both files on disk). With write speeds of 10MB/second it obviously wouldn't take long to make these kinds of huge files especially in a day where we consider a few TB to be small. The general way of handling this is the file gets broken into chunks then each chunk can be scavenged independently (while still allowing reads off it). Chunks can for instance be combined as well as they get smaller (or empty).

Another point to bring up is someone wanting to write N events together in a transactional fashion to a stream. This sounds like a trivial addition but its less than trivial to implement (especially with some of the other things discussed here). As was mentioned in a previous thread a transaction starts by definition when there is more than one thing to do.

There are decades worth of previous art in this space. It might be worth some time looking through it. LSM trees are a good starting point as is some of the older material on various ways of implementing transaction logs.

Greg

Interesting bits of code. It's fun to play around with it and see how you implemented the different pieces. However, I wonder in which type of scenarios you would consider using it?

+1 Greg.

Last week I also raised concerns and suggested looking at how current implementations of LSM's are built because they make some very important design decisions that aren't implemented here.

Haha, "poor lunch conversation".

Thanks for the library, want to go benchmark it in my dev environment and see what kind of stats I can squeze out of it :P

Comment preview