Code crimes, because even the Law needs to be broken

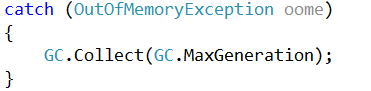

In 99.9% of the cases, if you see this, it is a mistake:

But I recently came into a place where this is actually a viable solution to a problem. The issue occurs deep inside RavenDB. And it boils down to a managed application not really having any way of actually assessing how much memory it is using.

What I would really like to be able to do is to specify a heap (using HeapCreate) and then say: “The following objects should be created on this heap”, which would allow to me to control exactly how much memory I am using for a particular task. In practice, that isn’t really possible (if you know how, please let me know).

What we do instead is to use as much memory as we can, based on the workload that we have. At certain point, we may hit an Out of Memory condition, which we take to mean, not that there is no more memory, but the system’s way of telling us to calm down.

By the time we reached the exception handler, we already lost all of the current workload, that means that now there is a LOT of garbage around for the GC. Before, when it tried to cleanup, we were actually holding to a lot of that memory, but not anymore.

Once an OOME happened, we can adjust our own behavior, to know that we are consuming too much memory and should be more conservative about our operations. We basically reset the clock back and become twice as conservative about using more memory. And if we get another OOME? We become twice as conservative again ![]() , and so on.

, and so on.

Eventually, even under high workloads and small amount of memory, we complete the task, and we can be sure that we make effective use of the system’s resources available to us.

Comments

Do you try to scale the memoryconsumption up again? If not couldn't you run into what could be considered memory-starvation? Say I once in a while run a longish-running very memoryconsuming task on my ravendb-server - if that coincides with ravendb doing this optimization couldn't the memory-usage by ravendb be scaling back too much? or do you have a bare minimum you use to ensure reasonable performance?

Simon, Yes, there are minimum boundaries under which we will not go down.

Generally speaking recovering from asynchronous exceptions in .NET is not recommended - depending on where they happen they can corrupt the app domain or process. This is discussed here: http://msdn.microsoft.com/en-us/magazine/cc163298.aspx

The better approach (also discussed in the article) is to use the MemoryFailPoint class (http://msdn.microsoft.com/en-us/library/system.runtime.memoryfailpoint.aspx) to predict if an allocation will fail. This is not guaranteed because of race conditions etc, but is better than just running up to the limit and then failing.

"In 99.9% of the cases, if you see this, it is a mistake"

There are all kinds of "best practices" and "never use" guidelines on the internet simply because people fail to explain "the why", "the how" or "the what" and simply limit themselves to saying "just don't do that".

Cathing OOM exceptions, is indeed dangerous if not done right, it's one of the more dangerous things to do if not done properly. But like many other things considered dangerous, it can be verry helfull in certain situations.

The point: "I realy hate mindles guidelines and rules" that simply state don't do that is bad.

Have you tried custom CLR hosting for your preferred (HeapCreate etc.) solution?

Paul, I would love to be able to do that, but I don't know in advance how much memory I'll be using.

Cynic, I thought about this, but this get complex REALLY fast, and considering that we want to be able to run on IIS, Mono, etc, I don't think it is worth it.

Ayende,

Interesting point; how do you not know how much memory you need, at least within an order of magnitude? You can make multiple calls to MemoryFailPoint and reading the MSDN topic it is about throttling the amount of memory you allocate.

I clearly don't understand the context of your reason for doing this, but have bad experience of doing what you are doing and the process becoming corrupt, so maybe that reflects my dislike of the construct.

Paul, Simple scenario, I need to index some number of documents. I don't know what their size is (I don't know how many, for that matter), and I need to execute a user supplied function over them, and index the results. I don't know what the results of the user supplied function would be, or what would be the cost of indexing things (is it a single term, analyzed, etc).

So you could know the document size when they request the document to index?

You could also build up a heuristic of the amount of memory required for their function by comparing the amount of memory consumed before/after for a given document size.

I agree it wouldn't be a perfect measure, but as an order of magnitude it could work.

You can try to use a MemoryFailPoint estimating the amount of memory that you require for the task. It will fail with a InsufficientMemoryException if you try to allocate more than expected during the lifetime of the MemoryFailPoint object. http://msdn.microsoft.com/en-us/library/system.runtime.memoryfailpoint.aspx

Paul, Not really. I can tell how much a document is on disk, but I don't know how much it is in memory (not the same), and I don't have any way of measuring the amount of data that the UDF is using, nor how much mem is consumed in the actual indexing.

I remember a presentation from the Chief Architect of Myspace (a couple of years back) where they ran into memory issues in their caching solution. So they build a custom memory management system where they allocated a number of huge binary arrays and then they just serialized/deserialized the objects to and from those huge arrays.

It sounded crazy at the time, but they were on a crazy scale of course.

Ayende, so do these ADF's run in the main application memory or in a separate AppDomain?

Jesse, Same AppDomain, the cost of marshaling across app domains is too expensive.

Hmmm.. I wonder what Ricky Leeks would think about this. :) http://www.dotnetrocks.com/default.aspx?ShowNum=760

How do you make sure that you handle OOM exceptions in all RavenDB threads? Do you wrap everything in try {} catch(OOM)?

Rafal, No, just the places where I know that are likely to cause the issue. Other than that, it is wrapped in standard exception handling and that can take care of that.

This is a very interesting problem. I wonder if it would be useful to have a community standardized API (akin to CommonServiceLocator) with which to query the resource consumption of the process, with OS-specific implementations? Regarding your current solution, it does seem like a time-bomb as any allocation may throw (as in a method call that needs to allocate memory in which to JIT code).

I do not buy in that you do not know how much memory you can allocate. Under 64 bit there is virtually no limit until the page file is full. But you do not want to page out the managed heap because each GC is needing nearly all pages again. For 32 bit there is the a limit around 1.1-1.3 GB of private bytes. You should set a memoryfailpoint in this region to stay clear of bad surprises. Your current approach assumes that you are faster if you can consume more memory. But this assumption breaks down as soon as the system is spending large amounts of time paging data into the page file and data out of the page file to complete a full GC.

It could be as easy to check Memory:Page Faults/sec to see the system already swapping too much. At this point of time I would throttle my activity and not continue to consume more memory. This will serve you much better than to exhaust the complete page file. You can then treat an OOM as really bad error and stop working. You could continue working if the OOM does happen on an pure indexing thread and you know which data is corrupt. On any other thread you should terminate your app.

So, why can't you create a managed C++ helper DLL to call HeapCreate() [& other low level functions] and manage what you need from C++? If I'm not mistaken, you can link up managed C++ DLLs in C#.

Brian, Oh, I can certainly do that, no need for C++, you can do that with PInvoke. Problem is that I can't force .NET to allocate on that heap.

Comment preview