In the previous post I outlined the requirements we have for FastPFor in RavenDB. Now I want to dig into the actual implementation. Here is the shape of the class in question:

The process starts when we start encoding the items. Each call to encode is going to process a different posting list, and it is expected that a single instance will process many posting lists during a single indexing run.

Here is the internal state of this class:

Here is how we start the process:

As you can see, there isn’t much here. We initialize the state of exceptions and exceptionsStarts (will discuss this in more detail later in this post) and then we ensure that we have a big enough buffer to encode the data. Note that we retain a point to the original entries that we were passed, that will be important later as well.

Here is the code of the encoding process:

I create a couple of vector variables, one to hold the first item in the list and the other to hold uint.MaxValue. Then I start processing the buffers in blocks of 256 items.

A good resource for using vectors and SIMD in C# can be found here.

The classic FastPFor is using vectors of 128 bits (although there are implementations of up to 512 bits). I chose to use Vector256 for the implementation. Today, pretty much any x64 machine is going to have native support for AVX2 (shipped since 2011!). On ARM, they are implemented using Vector128, so they are still vectorized there as well.

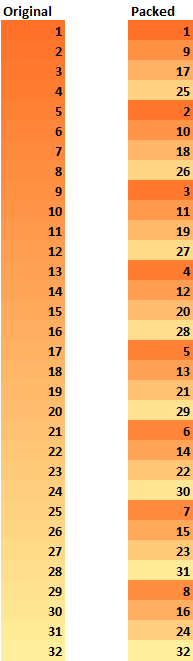

What this means is that we can process 256 numbers as a block. But what are we doing with them? Look at the inner look, where you can see some interesting details. We are using shuffle instructions as well as masking to shift the current value and add the previous one.

In other words, let’s assume that we have the following input array: 1,2,3,4,5,6,7,8 – using longs, so Vector256 will contain 4 elements.

On line 5, we create the prev vector and assign it to have 4 copies of the first item in the array:

prev = [1,1,1,1]

On line 13, we load the 4 elements from the array into cur, giving us:

cur = [1,2,3,4]

On line 14, we shuffle both the prev and the cur values, here are intermediate states:

curShuffled = [0,1,2,3] – note that we have 0 at the start because of the mask

prevShuffled = [1,0,0,0] – the last three items are zero because of the mask.

We then or those two intermediates together, giving us:

mixed = [1,1,2,3]

We subtract that from cur to get the delta. The idea is that we massively reduce the number of operations that we have to perform to compute the delta of the values.

On lines 19..22 we check if any of the deltas is greater than uint.MaxValue, we’ll dig into that in a bit.

The last part of the loop is when we deal with detlaInts, where we are doing some interesting things. We are pulling the low bits from all four elements in the vector to the start of the vector. Then we write the deltas to the output entries buffer.

However, we write 8 values, but we increment the position by 4, so we’ll actually overwrite the last 4 items in the next loop iteration. The entries buffer is sized based on the entries buffer, so we are ensured to have enough space, and the double writes give us better performance.

The end result of the inner loop is that we are performing running delta over the items, converting them from longs to ints. Then we can call the ProcessBlock() method to actually do the code of the FastPFor algorithm:

I already explained how this works in this post.

It is important to understand that we have multiple data channels that we operate on here:

- The entries output, where we write the packed bits

- Metadata, where we describe each block

- Exceptions, where we store the exception data

The encoding process runs through all of them, but isn’t actually going to be writing them anywhere, that happens later.

We process the entries in the array in blocks of 256 numbers each, but what happens when we get to the end? The last block size may not be a multiple of 256, after all. For the last item, we use simple delta encoding plus variable-sized integers. Nothing really fancy is needed at this point, since that is very small.

Finally, we run over all the exceptions data that we created during the encoding process and compute their size. The final result we have is the total size we would need to store all the encoded data as a single buffer.

What about handling numbers whose delta is greater than uint.MaxValue?

You can see that we expect this to be rare, so we handle that to be on the safe side, but aren’t trying to be too optimal about it. We simply take the high portion of the current delta and write it to the metadata. That is wasteful in terms of space, but it is simple and doesn’t require complex code. We’ll see how that is being used during the decoding process in the next post.

Finally, it is now time to actually write things out to the buffer. We have to be careful here, since we need to support partial writes. Let’s see how that works:

We define a header for the encoded data, which looks like this:

The header contains the initial value of the encoded data, the exceptions bitmap (discussed here) and the exception and metadata offsets. Because we always limit the size of the buffer to a maximum of 8KB, I used ushort here.

The first value we set in the header is the baseline, here we need to explain a bit. When we encode the data, we start from the very first item in the list, and go forward. The problem with doing things in this manner is that when we need to split the encoded data, we need to use the baseline that is the previous value that we encoded with. For that reason, we use either the first item in the entries array or the item from the end of the previous block that we consumed.

Next, we need to deal with the exceptions, as a reminder, we have a set of exceptions that are shared across blocks. When we do a partial write, we need to keep track of how many exceptions we consumed from each of the exceptions array.

The most interesting part comes when we start iterating over the metadata values, which tells us how to deal with the data:

Here we start with the exceptional cases, of a metadata value that is greater than the max delta or the varint batch marker (which occurs in the end).

You can see that there isn’t much there, except that we verify that we have enough space for the written buffers. For fun, I’m also using a goto here, this is needed to break out of the loop, since I cannot use a break statement in a switch case.

The more interesting aspect happens when we process full blocks of compressed 256 numbers:

This looks like a lot of code, but what it is actually doing is to mostly checking that we have enough space in the buffer for the block we are about to write. Of particular interest to us here is that we may have enough space to write the block, but we also need to ensure that we have space for the metadata and exceptions data that is associated with the current and all previous blocks.

If we have enough space, we continue to write more blocks to the output, or we exit if we are about to run out of room. After the loop, we have the header and compressed integers data in the output buffer, but we still need to write the metadata and exceptions data, this is handled here:

We compute the bitmap of all the exceptions, writing them out as packed segments (basically, 256 blocks of packed bits). Note that we have to account for how many of them we actually write out to the output, since we may have stopped in the middle.

Then we can simply write out the metadata buffer and return the total size we took.

I find it very amusing that we use the same bit packing routines to store the numbers and the exceptions data as well.

Overall, most of the code isn’t particularly interesting, but there are a few highlights that I would like to point out:

- We compute the deltas and the narrowing from int64 to int32 on the buffer during the Encode()

- We do the actual bit packing during the Write()

There is some argument to make here that we can do the bit packing during the Encode() as well, but it is unlikely to matter, I believe.

In the next post, I’m going to be diving into how we implement decoding for FastPFor. That is actually of far more interest to me, since that happens a lot more often. It’s going to be interesting…